April 23, 2015 weblog

Zensors: Making sense with live question feeds

Getting answers to what you really want to ask, beyond if the door is open or shut, could be rather easy. A video on YouTube demonstrates something called Zensors. Started at Carnegie Mellon last year and worked on by a team of faculty and graduate students from the school's Human-Computer Interaction Institute, Zensors is promoted as sensing made easy.

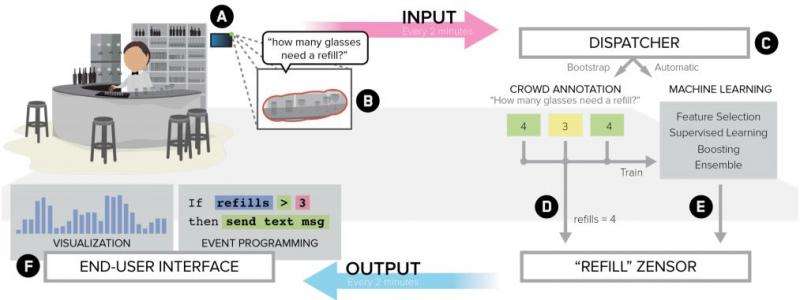

Place your unused smartphone in a convenient location. Ask a question about your home, business (if a restaurant, for example, you can ask "how many drinks are almost empty," or "is there a check sitting on the table," or "does table four need to be cleaned"), or city (how many cars are there in the parking lot) or anything else ("is the printer in use," or "how long is the line for coffee," "are people using their laptops"). Then set how frequently your sensor runs. That's it. You have a sensor running with real live data. With just one device you can create dozens of sensors.

In addition to counting, you can ask yes or no questions, scales, and multiple choice. In a CHI presentation by Gierad Laput at the Human-Computer Interaction Institute at Carnegie Mellon, he said the team built Zensors as a sensing approach that fuses real-time human intelligence from online crowd workers with automatic approaches to provide robust, adaptive, and readily deployable intelligent sensors. "With Zensors, users can go from question to live sensor feed in less than 60 seconds. Through our API, Zensors can enable a variety of rich end-user applications and moves us closer to the vision of responsive, intelligent environments."

The team presented their paper, "Zensors: Adaptive, Rapidly Deployable, Human-Intelligent Sensor Feeds," authored by Laput, Jason Wiese, Robert Xiao, Jeffrey Bigham, and Chris Harrison from Carnegie Mellon and Walter Lasecki from the University of Rochester.

The nice thing about their concept is its opportunity to repurpose old mobile devices as sensor hosts. "Users upgrade their devices on average once every two years. It is not uncommon for people to have a slew of older smart devices stashed in a drawer or closet. Although older, these devices are capable computers, typically featuring one or more cameras, a touchscreen, and wifi connectivity," the paper stated. They can support image-based sensing. The authors explained how users download their Zensors app on to their devices. Doing so allows them to create or modify sensors. "Users then 'stick' the device in a context of their choosing."

Going back to the restaurant scenario, their paper explains what goes on: the restaurant owner's sensors are initially powered by crowd workers interpreting his plain text question, providing immediate human-level accuracy, as well as human-centered abstractions. Since the use of crowd workers can be costly and difficult to scale, however, ideally it is only used temporarily – answers from the crowd are recorded and used as labels to bootstrap automatic processes.

Harrison said the fused answers from crowd workers and automatic approaches provide instant, human-intelligent sensors, which end users can set up in under one minute. The authors stated that "Once enough data labels have been collected, Zensors can seamlessly hand-off image classification to machine learning utilizing computer-vision-derived features."

Zensors can also utilize standalone Wi-Fi-enabled cameras; in that case, their web client can be used. For developers, responsive applications can be built using their API.

Discussing their work, Chris Harrison, one of the authors, said the team's contribution to sensor output responds to what has been a challenging problem: sensor output does not always match the types of questions humans actually wish to ask. "For example, a door opened/closed sensor may not answer the user's true question: 'are my children home from school?' A restaurateur may want to know how many patrons need their beverages refilled, and graduate students want to know, 'is there free food in the kitchenette?' Unfortunately, these sophisticated, multidimensional and often contextual questions are not easily answered by the simple sensors we deploy today."

The team's approach seeks to provide human-centered, actionable sensor output. Harrison also said that their approach needs minimal, nonpermanent sensor installation.

More information: — www.chrisharrison.net/index.php/Research/Zensors

— Zensors: Adaptive, Rapidly Deployable, Human-Intelligent Sensor Feeds (PDF): chrisharrison.net/projects/zensors/zensors.pdf

© 2015 Tech Xplore