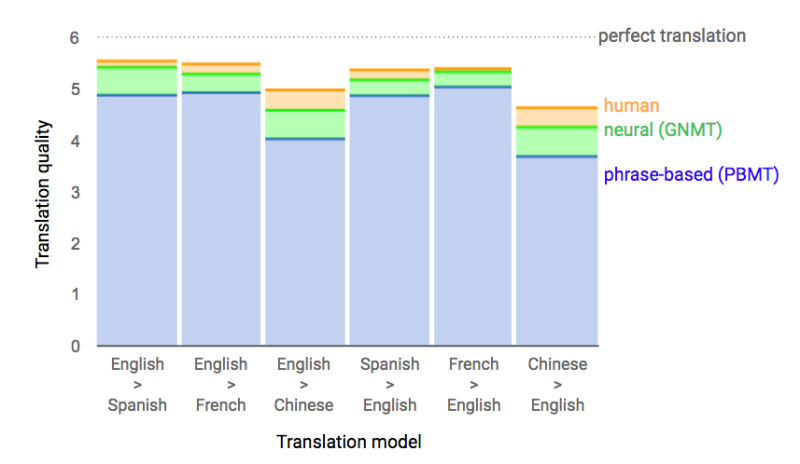

Data from side-by-side evaluations, where human raters compare the quality of translations for a given source sentence. Scores range from 0 to 6, with 0 meaning “completely nonsense translation”, and 6 meaning “perfect translation."

(Tech Xplore)—Cutesy headlines are not always appreciated as some readers just want the facts without smirks and giggles. Nonetheless, the headline in this week's Engadget was both funny and quite descriptive: "Google's Chinese-to-English translations might now suck less."

Cherlynn Low, Reviews Editor, Engadget, said, "Mandarin Chinese is a notoriously difficult language to translate to English, and for those who rely on Google Translate to decipher important information, machine-based tools simply aren't good enough."

Google, however, is taking a step in a new direction to lay out a translation path for better results. Google has implemented a new learning system in its web and mobile translation apps, said Low.

She was referring to Google's announcement on Tuesday about a neural network for machine translation. The posting was headlined "A Neural Network for Machine Translation, at Production Scale." The posting was by Quoc Le and Mike Schuster, two Google Brain Team research scientists.

Ten years ago, there was the announcement of the launch of Google Translate, and the key algorithm behind this service Phrase-Based Machine Translation. In 2016, they said, still, "improving machine translation remains a challenging goal."

Now Google wants to turn the page. "Today we announce the Google Neural Machine Translation system (GNMT)." The system is using "state-of-the-art training techniques to achieve the largest improvements to date for machine translation quality."

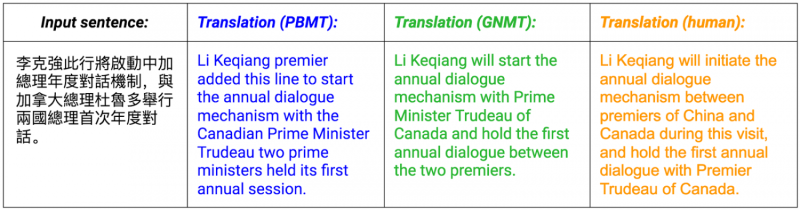

An example of a translation produced by our system for an input sentence sampled from a news site.

What are they doing that's so special?

They explored Recurrent Neural Networks (RNNs) a few years back to learn the mapping between an input sequence (a sentence in one language) to an output sequence (that sentence in another language). Phrase-Based Machine Translation (PBMT) breaks an input sentence into words and phrases to be translated largely independently. They said Neural Machine Translation (NMT) considers the entire input sentence as a unit for translation. "The advantage of this approach is that it requires fewer engineering design choices than previous Phrase-Based translation systems." They reported good results in "modest size" public benchmark data sets but they kept working on improvements.

Still, improvements did not overshadow the fact that "NMT wasn't fast or accurate enough to be used in a production system, such as Google Translate."

Eventually they said they overcame the challenges. They said they were able to make NMT work on "very large data sets and built a system that is sufficiently fast and accurate enough to provide better translations for Google's users and services."

So can the team pack up the empty pizza boxes and move on to another project? Not quite. "Machine translation is by no means solved," they stated. "GNMT can still make significant errors that a human translator would never make, like dropping words and mistranslating proper names or rare terms, and translating sentences in isolation rather than considering the context of the paragraph or page."

At the same time, they said GNMT represents "a significant milestone. We would like to celebrate it with the many researchers and engineers—both within Google and the wider community—who have contributed to this direction of research in the past few years."

An academic paper about this undertaking is on arXiv. The paper is titled "Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation."

© 2016 Tech Xplore