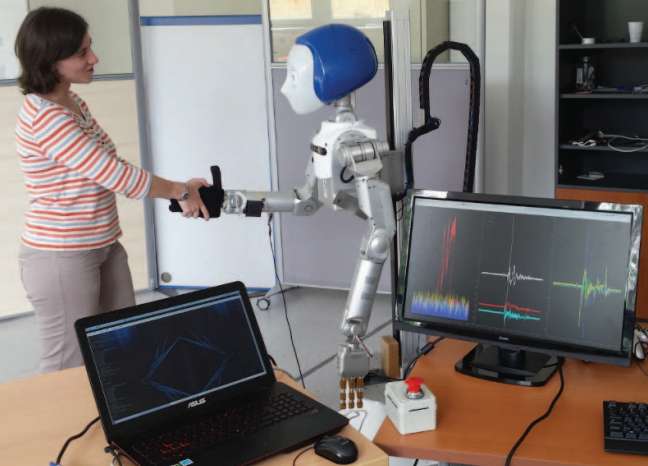

Human-Robot handshake using Meka humanoid robot. Credit: 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids) (2017). DOI: 10.1109/HUMANOIDS.2016.7803388

(Tech Xplore)—Applause goes out to all those scientists, developers and engineers who put together robots who can grip tools, lift packages, sling pingpong balls, deliver pizzas and cut into fabric. If we are winning the robot-task race, what about the be-my-pal race? The "soft" side of social robots that interact with humans will be interesting to watch.

Home robots that play with young children, help people with mental difficulties and support the elderly involve attributes such as behaving politely, showing empathy and maybe having a laugh or two.

This week, several reports looked at the team at the University Paris-Saclay who are teaching robots how to show personality and emotion through touch and other senses.

The Manufacturer was one of several sites reporting: "The ENSTA research team claim to have developed robots to elicit different emotions and dominance depending on the situation and context. This includes, for example, adapting the arm stiffness and amplitude in a hand shaking interaction."

Speaking of the handshake, the team wrote a paper earlier that discussed how robots can tell gender by the handshake. The paper's title said it clearly: "Let's handshake and I'll know who you are: Gender and personality discrimination in human-human and human-robot handshaking interaction."

The paper was added to IEEE's Xplore Digital Library. Pierre-Henri Orefice, Mehdi Ammi, Moustapha Hafez and Adriana Tapus are the authors.

TechRadar reported the team claimed the system was able to infer the gender and how extroverted the person they were shaking hands with in 75 percent of cases.

Duncan Geere, TechRadar science writer, wrote about what this team is trying to do.

"Adriana Tapus and her colleagues are aiming to develop a humanoid robot that's sensitive to tactile stimulation in the same way people are."

The authors recognized that touch as a communication modality has been very little exploited. They wrote in their abstract, "In order to appear as natural as possible during social interaction, robots need either the ability to express or to measure emotions...Our paper aims to create a model of the tactile features activated during a handshake act, that can discriminate intrinsic characteristics of a person such as gender or extroversion."

How they did it:

"First, we construct a model of handshaking based on the human-human styles of handshaking. This model is thereafter compared with a human-robot handshaking interaction. The Meka robot is used in our experiments. A method is proposed to manage the modeling using feature selection with ANOVA and linear discriminant analysis (LDA)."

Results? The authors said they found the results encouraging. They said the first preliminary results showed it was possible to recognize robot recognition of gender—and extroversion personality trait—based on firmness and the movement style of handshaking. Consistency was found when comparing human-human handshaking with human-robot ones. The authors said the "encouraging" results would allow them to develop personalized interactions.

Professor Tapus' research group at ENSTA have also studied emotion recognition as part of a project concerning people suffering with Autistic Syndrome Disorder (ASD).

Overall, the team hopes their technology can benefit the elderly and those with autism. Prof. Tapus quoted in London Post on Wednesday: "Giving robots a personality is the only way our relationship with artificial intelligence will survive. If we can simulate a human like emotional response from a robot we can ensure a two-way relationship, benefiting the most vulnerable and isolated members of our society."

More information: Pierre-Henri Orefice et al. Let's handshake and I'll know who you are: Gender and personality discrimination in human-human and human-robot handshaking interaction, 2016 IEEE-RAS 16th International Conference on Humanoid Robots (Humanoids) (2017). DOI: 10.1109/HUMANOIDS.2016.7803388

Abstract

In order to appear as natural as possible during social interaction, robots need either the ability to express or to measure emotions. Touch can be a very powerful communication modality but is very little exploited. Our paper aims to create a model of the tactile features activated during a handshake act, that can discriminate intrinsic characteristics of a person such as gender or extroversion. First, we construct a model of handshaking based on the human-human styles of handshaking. This model is thereafter compared with a human-robot handshaking interaction. The Meka robot is used in our experiments. A method is proposed to manage the modeling using feature selection with ANOVA and linear discriminant analysis (LDA). The first preliminary results show that it is possible to recognize gender and extroversion personality trait based on the firmness and movement of the style of handshaking. For instance, smaller pressure and frequency were found to describe female handshakes and higher speed amplitude describe introverted handshakes. Consistency was also found when comparing human-human handshaking with human-robot ones. These are encouraging results that will allow us to develop personalized interactions.

© 2017 Tech Xplore