Precision (left) and power (right) grasps generated by the grasp planner. Credit: Lu & Hermans.

Researchers at the University of Utah have recently developed a probabilistic grasp planner that can explicitly model grasp types to plan high-quality precision and power grasps in real time. Their supervised learning approach, outlined in a paper pre-published on arXiv, can effectively plan both power and precision grasps for a given object.

For both humans and robots, different manipulation tasks require different types of grasps. For instance, holding a heavy tool, such as a hammer, requires a multi-fingered power grasp that offers stability, while holding a pen requires a multi-fingered precision grasp, as this can impart dexterity on the object.

When testing their previous approach to grasp planning, the team of researchers at the University of Utah noticed that it almost always generated power grasps in which the robot's hand wraps around an object, with large contact regions between its hand and the object. These grasps are useful for completing a variety of robotic tasks, such as picking up objects somewhere else in the room, yet they are unhelpful when performing in-hand manipulation tasks.

"Think about moving a paintbrush or scalpel you're holding with your fingertips," Tucker Hermans, one of the researchers who carried out the study, told TechXplore. "These kinds of tasks require precision grasps, where the robot holds the object with its fingertips. Looking at the literature, we saw that existing methods tend to generate only one kind of grasp, either precision or power. So we set out to create a grasp-synthesis approach that can handle both. This way, our robot can use power grasps to stably pick and place objects it wants to move, but precision grasps when it needs to perform in-hand manipulation tasks."

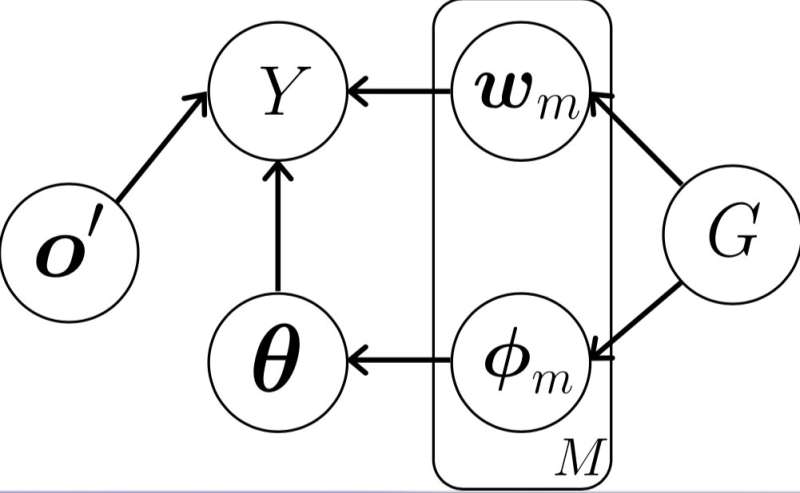

The proposed grasp-type probabilistic graphical model. Credit: Lu & Hermans.

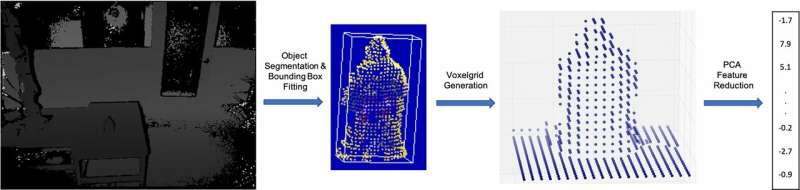

In the approach to grasp planning devised by Hermans and his colleague Qingkai Lu, a robot learns to predict grasp success from past experiences. The robot attempts different types of grasps on different objects, recording which of these were successful and which failed. This data is then used to train a classifier to predict whether a given grasp will succeed or not.

"The classifier takes as input a depth image of the object and the selected grasp configuration (i.e. where to put the hand and how to shape the fingers)," Hermans explained. "In addition to predicting success, the classifier reports how confident it is that the grasp will succeed on a scale of zero to one. When presented with an object to grasp, the robot plans a grasp by searching over different possible grasps and selecting the grasp which the classifier predicts the highest confidence in succeeding."

The supervised learning approach developed by Hermans and Lu can plan different types of grasps for previously unseen objects, even when only partial visual information is available. Theirs could be the first grasp planning method to explicitly plan both power and precision grasps.

-

Illustration of the visual-feature extraction process. Credit: Lu & Hermans.

-

Example RGB image generated by the Kinect2 camera showing the robot and the Lego-like object on the table. Credit: Lu & Hermans.

The researchers evaluated their model and compared it with a model that does not encode grasp type. Their findings suggest that modeling grasp type can improve the success rate of generated grasps, with their model outperforming the other method.

"We believe that our results are important in two meaningful ways," Hermans said. "First, our proposed approach enables a robot to explicitly select the kind of grasp it desires, solving the issue we set out to address. Second, and potentially more important, adding this grasp-type knowledge into the system actually improves the ability for the robot to successfully grasp objects. Thus, even if you only want one type of grasp, say power grasps, it still helps to know that precision grasps exist when learning to grasp."

The approach devised by Hermans and Lu could aid the development of robots that can generate a diverse set of grasps. This would ultimately allow these robots to complete a broader variety of tasks, which entail different types of object manipulation.

-

Examples of successful precision and power grasps generated by the new grasp-type modeling approach to grasp planning. The top two rows are precision grasps. The bottom two rows are power grasps. Credit: Lu & Hermans.

-

Precision (left) and power (right) grasps generated by the new grasp planner. Credit: Lu & Hermans.

"We are now looking at two direct extensions of this work," Hermans said. "First, we wish to examine the effects of modeling more types of grasps, for example, distinguishing between sub-types of precision grasps characterized by different segments of the finger making contact with the object. In order to achieve this, we are planning to augment the robot hand with skin in order to enable automatic detection of where contacts are made. Second, we wish to incorporate more information to aid in automatically selecting the appropriate type of grasp given a requested task. For example, how can the robot automatically decide that it should use a precision grasp to create a painting, without the operator telling it?"

More information: Modeling grasp type improves learning-based grasp planning. arXiv:1901.02992 [cs.RO]. arxiv.org/abs/1901.02992

© 2019 Science X Network