January 7, 2019 feature

An end-to-end imitation learning system for speed control of autonomous vehicles

Researchers at Valeo, a tech company specialized in automotive innovation, have recently developed an end-to-end imitation learning system for car speed control. Their approach, outlined in a paper pre-published on arXiv, uses a neural network with long short-term memory (LSTM), a type of recurrent neural network (RNN) that can learn long-term dependencies.

"Valeo is the world leader in sensors, the ears and eyes of autonomous cars, and has already achieved several world firsts, such as the recent experimentation with our Valeo Drive4U vehicle, the first autonomous car to be demonstrated in the streets of Paris," Emilie Wirbel, one of the researchers who carried out the study, told TechXplore. "My team and I work in one of the company's 56 research and development centers, investigating how deep learning can be used to achieve better decision and control of autonomous cars. The objective of this research was to prove that it is possible to handle complex situations that can be encountered in urban environments by using only cameras and learning from what a human driver can do."

The new system developed by Wirbel and her colleagues employs an artificial neural network (ANN) that relies on deep learning techniques. The network is fed demonstrations of a human being operating a car that are taken from a frontal camera and hence closely resemble what the person was seeing while driving.

The neural network is then trained to imitate the driver's actions, particularly focusing on reproducing the car's current speed. For instance, when an input image contains a 50-kph speed limit panel, the network ensures that the car does not go faster than 50 kph.

"When there is another car in front of us, a human driver will slow down accordingly and the network should learn to do the same," Wirbel explained. "Our approach tries to replicate how a human learns and drives. The network only receives information from the frontal camera and does not need explicit perception, for example, related to the traffic lights or the lanes, just as a human driver does not have an explicit model of exactly where the lines are and what their shape is."

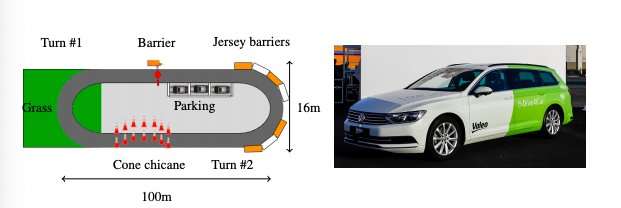

After training their neural network, Wirbel and her colleagues tested it in a simulation environment and then integrated it inside a real car, evaluating its performance on a challenging test track. They found that their system effectively reacted to complex situations, controlling the speed of the car where necessary (e.g. slowing down at traffic cones and sharp turns, stopping at barriers and when approaching warning signs, etc.).

"Our study proves that complex situations, such as working zones, unexpected obstacles, etc., can be dealt with merely by observing what a human would do and then reproducing it in new, similar situations," Wirbel said. "This means that as long as we have enough demonstration data, we can handle use cases that human drivers would reasonably deal with. This could be used in complex interaction situations in combination with the more classical approaches, to make the vehicle able to react consistently and smartly."

The system devised by Wirbel and her colleagues has achieved very promising results and could soon be applied to autonomous vehicles, leading to more effective speed control and more intuitive driving. The researchers are planning to extend their proof of concept to more complex situations, teaching their system to handle a broader variety of interactions with other vehicles on the road, as well as adding more complex maneuvers, such as changing lanes, turning at intersections, or navigating roundabouts.

"We would also like to work on the system's explainability and compatibility with existing autonomous vehicles, providing an explanation to the end user of how the network perceives its environment and why it takes its decisions," Wirbel added. "The research roadmap is very wide, so we are attending and contributing to major scientific conferences to keep up with the latest state of the art developments in this area. Our role as an R&D team is also to provide the rest of Valeo with the right keys and expertise to bring our proofs of concept closer to production."

More information: Imitation learning for end to end vehicle longitudinal control with forward camera. arXiv:1812.05841 [cs.CV]. arxiv.org/abs/1812.05841

End to end vehicle lateral control using a single fisheye camera. arXiv:1808.06940 [cs.RO]. arxiv.org/abs/1808.06940

© 2019 Science X Network