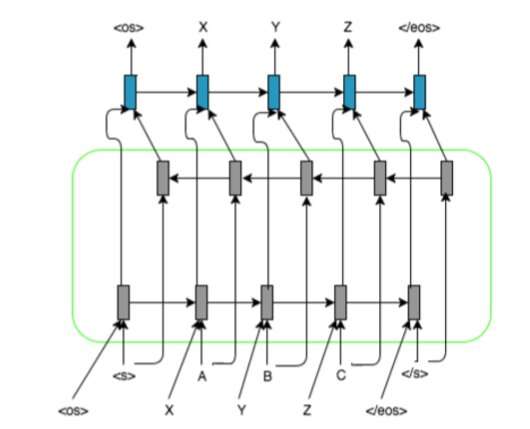

The researchers’ RNN-based model architecture with encoder-decoder bidirectional LSTM and alignment representation on input sequences. They use and , , and markers to pad the grapheme/phoneme sequences to a fixed length. Credit: Ngoc Tan Le et al.

A team of researchers at Universite du Quebec a Montreal and Vietnam National University Ho Chi Minh (VNU-HCM) have recently developed an approach for machine transliteration based on recurrent neural networks (RNNs). Transliteration entails the phonetic translation of words in a given source language (e.g. French) into equivalent words in a target language (e.g. Vietnamese).

Via transliteration, an individual word is transformed into a phonetically equivalent word in another writing system. This transformation typically relies on a large set of rules defined by linguists, which determine how phonemes are aligned, considering the origin of a word and the phonological system of the target language.

In recent years, researchers have developed several deep learning approaches for machine translation, which have been found to be a valuable alternative to existing statistical approaches. These promising results motivated the team of researchers at Universite du Quebec a Montreal and VNU-HCM to develop a deep learning approach for machine transliteration.

Their approach uses recurrent neural networks (RNNs), as these have been found to be particularly useful for dealing with similar problems. The researchers observed that most state-of-the-art grapheme-to-phoneme methods were primarily based on the use of grapheme-phoneme mappings, while RNNs do not require any alignment information.

"Grapheme-to-phoneme models are key components in automatic speech recognition and text-to-speech systems," the researchers explained in their paper, which was published on ACM Digital Library. "With low-resource language pairs that do not have available and well-developed pronunciation lexicons, grapheme-to-phoneme models are particularly useful. These models are based on initial alignments between grapheme source and phoneme target sequences."

In their study, the researchers introduced a new method to achieve low-resource machine transliteration, which uses RNN-based models and alignment information for input sequences. Given a word in a given language that is not present in the bilingual pronunciation dictionary, their system can automatically predict its phonemic representation in the target language.

"Inspired by sequence-to-sequence recurrent neural network-based translation methods, the current research presents an approach that applies an alignment representation for input sequences and pre-trained source and target embeddings to overcome the transliteration problem for a low-resource language pair," the researchers explained in their paper.

This new approach combines several deep learning and neural network based techniques, including encoder-decoders, attention mechanisms, alignment representation for input sequences and pre-trained source and target embeddings. The researchers evaluated their method in a transliteration task involving French-Vietnamese low-resource language pairs, attaining very promising results.

"Evaluation and experiments involving French and Vietnamese showed that with only a small bilingual pronunciation dictionary available for training the transliteration models, promising results were obtained," the researchers wrote.

According to the researchers, their study was among the first to analyze the Vietnamese language in a transliteration task using RNNs. Their method attained remarkable results, outperforming other state-of-the-art statistical-based and multijoint sequence-based approaches.

The new system devised by the researchers can effectively and automatically learn linguistic regularities from small bilingual pronunciation dictionaries. Although their study specifically applied it to French-Vietnamese transliteration tasks, it could also be extended to any other low-resource language pairs for which a bilingual pronunciation dictionary is available.

"In future work, we intend to test our proposed approach with a larger bilingual pronunciation dictionary as well as to study other approaches, such as semi-supervised or non-supervised," the researchers wrote in their paper. "We also intend to investigate transfer learning using other NLP tasks or languages in low-resource settings."

More information: Low-resource machine transliteration using recurrent neural networks. DOI: 10.1145/3265752. dl.acm.org/citation.cfm?id=3265752

© 2019 Science X Network