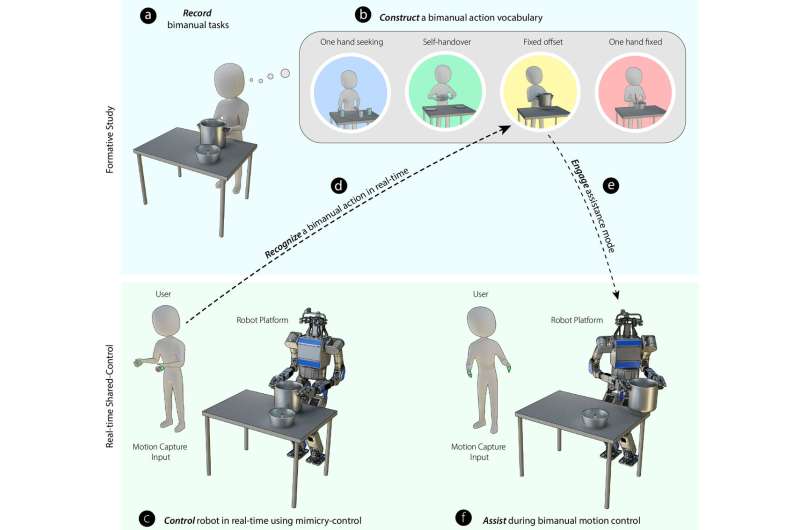

Diagram illustrating the experimental design for bimanual robot manipulation. A “bimanual action vocabulary” for robots was developed from an extensive analysis of human bimanual hand and arm motions (top panel labelled “formative study”). A robot programmed with a neural network and a bimanual action vocabulary was linked to a volunteer who attempted to control the robot to complete different bimanual tasks. The robot captured the human’s poses and inferred the correct motion by pulling from its bimanual vocabulary. Credit: Rakita et al., Sci. Robot. 4, eaaw0955 (2019)

A team of researchers from the University of Wisconsin and the Naval Research Laboratory has designed and built a robotic system that allows for bimanual robot manipulation through shared control. In their paper published in the journal Science Robotics, the group explains the ideas behind their work and how well they worked in practice.

As the researchers note, using two hands working together to complete a task is very complicated—it entails far more than just two individual hands working independently on the same task at the same time. When a person opens a jar, for example, the brain has to serve as a mediator of sorts, directing the action as it receives and sends signals to both hands. Such simple tasks are so complicated that robots are not able to perform them—this is why virtually all robots work with just one hand. In this new effort, the researchers have taken one small step in the direction of teaching robots how to use two hands to complete a task.

The researchers note that many semi-robotic applications allow robots to serve an augmentation role, rather than do something on their own. A surgeon directing a robot hand by using his own hand is one example. But they also note that simple mimicry would not work for two-handed augmented robotic systems—such movements are too complex. The solution, they found, was to combine mimicry with a deep learning network. The result was a technique that enabled a robot to carry out bimanual tasks by sharing control with a human being.

A narrated video explaining the major concepts, methods, results and implications of this study. Credit: Rakita et al., Sci. Robot. 4, eaaw0955 (2019)

The work started by equipping a robot with two arms and hands. They added hardware to allow the robot arms to communicate with a deep learning network and sensors placed on a human being. A robot was told which task was going to be attempted, and then the human carried it out. As the human did so, the robot did its best to mimic the action at the same time. Repeating the procedure many times allowed the robot to learn about the many tiny tasks that were involved in carrying out the main task, which led to some intuition on its part.

Over time, as a robot worked in conjunction with a human, it added its own commands to achieve a better result. The robot did not progress to the point of performing the task on its own—instead, it learned to serve as a more fully capable augmented assistant. The researchers note that such a robot could possibly serve as an assistant for partially disabled people. Also, it would seem the Navy could use such a robot for remote underwater operations.

More information: Daniel Rakita et al. Shared control–based bimanual robot manipulation, Science Robotics (2019). DOI: 10.1126/scirobotics.aaw0955

Journal information: Science Robotics

© 2019 Science X Network