The SensFoot device. Credit: Moschetti et al

Researchers at the BioRobotics Institute of Scuola Superiore Sant"Anna, Co-Robotics srl and Sheffield Hallam University have recently proposed a new approach to improve interactions between humans and robots as they are walking together. Their paper, published in MDPI's Robotics journal, proposes the use of wearable sensors as a means to improve the collaboration between a human and a robot that are moving around in a shared environment.

Recent technological advances have enabled the employment of robots as assistants within a wide range of everyday life situations. To perform well in most of these settings, however, robots should be able to interact with human users seamlessly and effectively. Researchers have hence been developing approaches and techniques to enhance the ability of robots to understand social signals and respond accordingly.

In their study, the team particularly focused on tasks that involve humans and robots walking together or pursuing a task that involves standing and moving around a shared environment. Their goal was to develop an approach that allows humans to move naturally in a given space along with a robot, without the need for physical links between the two.

"This paper proposes the use of wearable inertial measurement units (IMUs) to improve the interaction between human and robot while walking together without physical links and with no restriction on the relative position between the human and the robot," the researchers wrote in their paper.

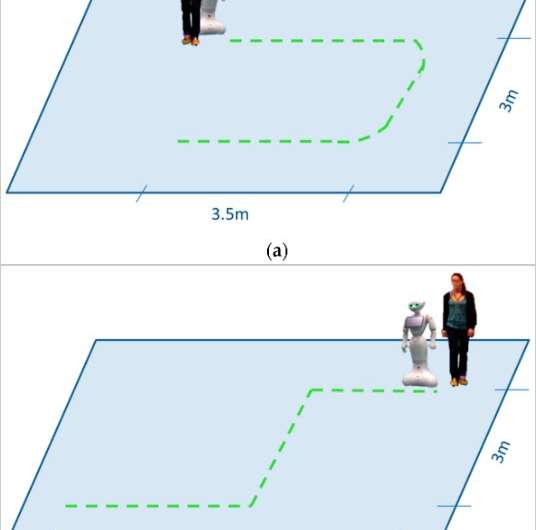

Scheme of the following task (a) and follow-me task (b). Credit: Moschetti et al.

The approach proposed by the researchers entails the use of IMU sensors, which are electronic devices that measure and report orientation, velocity and other data pertaining movements, typically using accelerometers, gyroscopes and/or magnetometers. These sensors are worn by humans (e.g. on their shoes) without causing them discomfort, thus allowing them to move freely in their surrounding space.

The IMUs collect real-time information about the human user's movements and gait-related parameters (e.g. walking speed, stride length, orientation angle, etc.). Subsequently, this data is processed and used to shape the robot's motion, ultimately creating a more natural interaction between the two agents.

The researchers built a prototype IMU system called SensFoot and evaluated its accuracy and efficacy in a series of experiments that involved humans and robots interacting with each other. They recruited 19 human participants and asked them to complete two different tasks, which they refer to as a "following task" and a "follow-me" task.

First, they verified the accuracy of their system by comparing the walking information calculated by a reference vision system with that derived from data collected by the IMUs. Subsequently, they tested the sensors in a real human-robot interaction scenario.

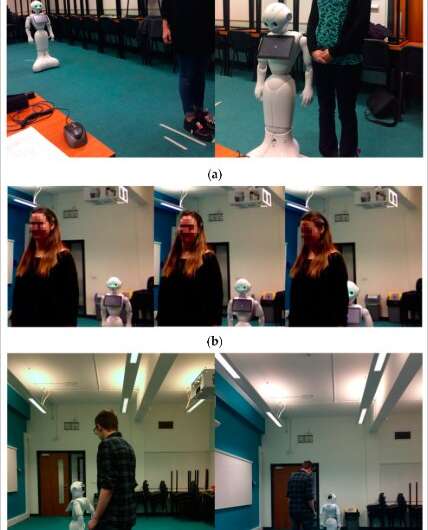

Example of tests with users (a) and sequences from the following task (b) and follow-me task (c). Credit: Moschetti et al.

"We experimented with 19 human participants in two different tasks, to provide real-time evaluations of gait parameters for a mobile robot moving together with a human, and studied the feasibility and the perceived usability by the participants," the researchers wrote. "The results show the feasibility of the system, which obtained positive feedback from the users, giving valuable information for the development of a natural interaction system, where the robot perceives human movements by means of wearable sensors."

The evaluations carried out by the researchers yielded highly promising results, suggesting that the use of IMUs could significantly improve interactions between humans and robots that are moving around a shared space. Moreover, the feedback collected from participants who tested the sensors was overwhelmingly positive. In the future, the approach proposed by the researchers could pave the way for more adaptive and efficient assistive robotics solutions that involve the integration of IMUs or other sensors with machine learning algorithms.

"Future works concern the possibility to enhance the system perception of the walking user, improving accuracy in extracted parameters, and the adaptability of the robot, overcoming the current limitations in control and integration," the researchers wrote.

More information: Alessandra Moschetti et al. Wearable Sensors for Human–Robot Walking Together, Robotics (2019). DOI: 10.3390/robotics8020038

© 2019 Science X Network