Credit: Arrichiello et al.

Researchers at the University of Cassino and Southern Lazio, in Italy, have recently developed a cutting-edge architecture that enables the operation of an assistive robot via a P300-based brain computer interface (BCI). This architecture, presented in a paper pre-published on arXiv, could finally allow people with severe motion disabilities to perform manipulation tasks, thus simplifying their lives.

The system developed by the researchers is based on a light robot manipulator. Essentially, this manipulator receives high-level commands from users via a BCI based on the P300 paradigm. In neuroscience, P300 waves are responses elicited by a human being's brain during the process of decision-making.

"The main objective of our work was to realize a system that allows users to generate high-level directives for robotic manipulators through brain computer interfaces (BCIs)," Filippo Arrichiello, one of the researchers who carried out the study, told TechXplore. "Such directives are then translated into motion commands for the robotic manipulator that autonomously achieves the assigned task, while ensuring the safety of the user."

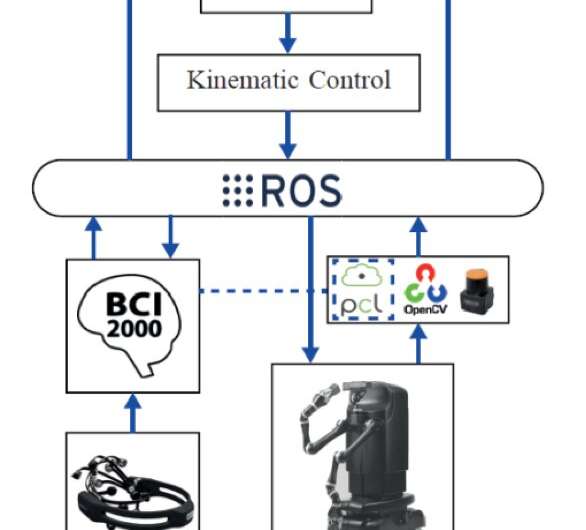

The architecture developed by the researchers has three key components: a P300 BCI device, an assistive robot and a perception system. Arrichiello and his colleagues integrated these three elements in an ROS environment, a renowned software middleware for robotics applications.

The architecture's first component, the P300 BCI device, measures electrical activity in the brain via electroencephalography (EEG). It then translates these brain signals into commands that can be fed to a computer.

"The P300 paradigm for BCI uses the reaction of the user's brain to external stimuli, i.e. flashing of icons on a screen, to allow the user to select an element on the screen by reacting (for example, by counting) each time that desired icon flashes," Arrichiello explained. "This allows the user to perform a set of choices among a set of predefined elements and build high-level messages for the robot concerning the action to perform, such as the manipulation of an object."

To perform the actions desired by the users, the researchers used a lightweight robotic manipulator called Kinova Jaco. This assistive robot's control software receives high-level directives generated by the user via the BCI and translates them into motion commands. Its motion is controlled via a closed loop inverse kinematic algorithm that can simultaneously manage different tasks.

-

Credit: Arrichiello et al.

-

Credit: Arrichiello et al.

"The control architecture we developed allows the robot to achieve multiple and prioritized objectives, i.e., achieving the manipulation task while avoiding collision with the user and/or with external obstacles, and while respecting constraints like the robot mechanical limits," Arrichiello said.

The final component of the architecture devised by Arrichiello and his colleagues is a perception system that is based on an RGB-D sensor (i.e., a Microsoft Kinect One), among other things. The system uses the Kinect One sensor to detect and locate objects that are to be manipulated by the robot within the workspace. The sensor can also detect a user's face, estimate the position of his/her mouth and recognize obstacles.

"The practical implications of our study are quite straightforward and ambitious," Arrichiello said. "Its final aim is to move in the direction of building a reliable and effective robotic set-up that can finally help users with severe mobility impairments to perform daily-life operations autonomously and without the constant support of a caregiver."

When the researchers started working on developing an assistive robot powered by a BCI, they first experimented with a system composed of a single fixed-base manipulator that recognizes objects through markers and with a preconfigured user interface. They have now advanced this architecture considerably, to the point that it allows users to handle more complex robotic systems, such as mobile robots with dual arms.

-

Credit: Arrichiello et al.

-

Credit: Arrichiello et al.

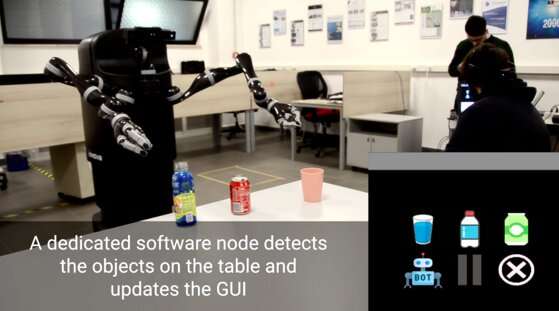

"We have also improved the perception module, which can now recognize and localize objects based on their shapes," Arrichiello explained. "Finally, we worked on the interaction between the perception module and the graphical user interface (GUI) to create GUI dynamics in accordance with the perception module's detections (e.g., the user's interface is updated on the basis of the number and type of objects recognized on a table by the perception module)."

To evaluate the performance and effectiveness of their architecture, Arrichiello and his colleagues carried out a series of preliminary experiments, attaining highly promising results. In the future, their system could change the lives of individuals affected by motion disabilities and physical injuries, allowing them to complete a wide variety of manipulation tasks.

"Future research will firstly be aimed at improving robustness and reliability of the architecture, beyond increasing the application domain of the system," Arrichiello said. "Moreover, we will test different BCI paradigms, i.e. different way to use the BCI as those based on motor imagery, in order to identify the most suitable one for teleoperation applications, where the user might control the robot using the BCI as a sort of joystick, without limiting the motion command impartable to the robots to a predefined set."

More information: Assistive robot operated via P300-based brain computer interface. arXiv:1905.12927 [cs.RO]. arxiv.org/abs/1905.12927 webuser.unicas.it/lai/robotica/

© 2019 Science X Network