June 14, 2019 feature

Spintronic memory cells for neural networks

In recent years, researchers have proposed a wide variety of hardware implementations for feed-forward artificial neural networks. These implementations include three key components: a dot-product engine that can compute convolution and fully-connected layer operations, memory elements to store intermediate inter and intra-layer results, and other components that can compute non-linear activation functions.

Dot-product engines, which are essentially high-efficiency accelerators, have so far been successfully implemented in hardware in many different ways. In a study published last year, researchers at the University of Notre Dame in Indiana used dot-product circuits to design a cellular neural network (CeNN)-based accelerator for convolutional neural networks (CNNs).

The same team, in collaboration with other researchers at the University of Minnesota, has now developed a CeNN cell based on spintronic (i.e., spin electronic) elements with high energy efficiency. This cell, presented in a paper pre-published on arXiv, can be used as a neural computing unit.

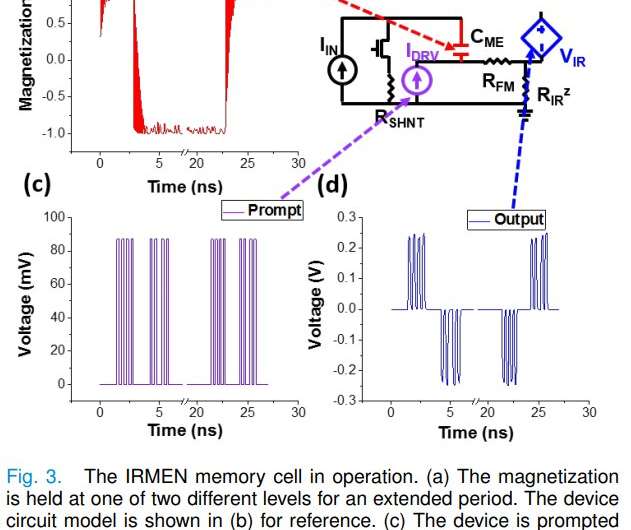

The cells proposed by the researchers, called Inverse Rashba-Edelstein Magnetoelectric Neurons (IRMENs), resemble standard cells of cellular neural networks in that they are based around a capacitor, but in IRMEN cells, the capacitor represents an input mechanism rather than a true state. To ensure that the CeNN cells are able to sustain the complex operations typically performed by CNNs, the researchers also proposed the use of a dual-circuit neural network.

The team carried out a series of simulations using HSPICE and Matlab to determine whether their spintronic memory cells could enhance the performance, speed and energy efficiency of a neural network in an image classification task. In these tests, IRMEN cells outperformed purely charge-based implementations of the same neural network, consuming ≈ 100 pJ in total per image processed.

"The performance of these cells is simulated in a CeNN-accelerated CNN performing image classification," the researchers wrote in their paper. "The spintronic cells significantly reduce the energy and time consumption relative to their charge-based counterparts, needing only ≈ 100 pJ and ≈ 42 ns to compute all but the final fully-connected CNN layer, while maintaining a high accuracy."

Essentially, compared to previously proposed approaches, IRMEN cells can save a substantial amount of energy and time. For instance, a purely charge-based version of the same CeNN used by the researchers requires over 12 nJ to compute all convolution, pooling and activation stages, while the IRMEN CeNN needs less than 0.14.

"With the growing importance of neuromorphic computing and beyond-CMOS computation, the search for new devices to fill these roles is crucial," the researchers concluded in their paper. "We have proposed a novel magnoelectric analog memory element with a built-in transfer function that also allows it to act as the cell in a CeNN."

The findings gathered by this team of researchers suggest that applying spintronics in neurmorphic computing could have remarkable advantages. In the future, the IRMEN memory cells proposed in their paper could help to enhance the performance, speed and energy-efficiency of convolutional neural networks in a variety of classification tasks.

More information: Nonvolatile spintronic memory cells for neural networks. arXiv:1905.12679 [cs.ET]. arxiv.org/abs/1905.12679

A mixed signal architecture for convolutional neural networks. arXiv:1811.02636v1. arxiv.org/abs/1811.02636

© 2019 Science X Network