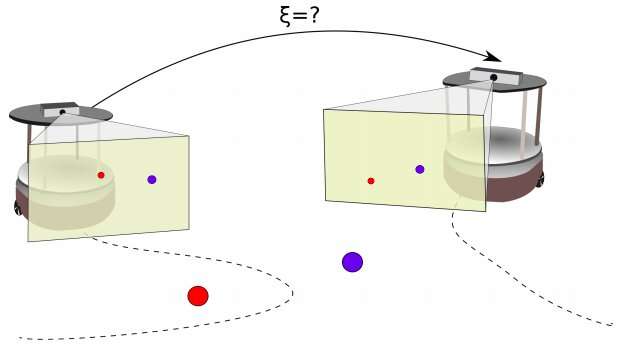

A representation of the problem addressed in the recent study. Two robots are observing a pair of 3D points. The researchers propose a pipeline to simultaneously estimate the 3D depth of the two 3D points and simultaneously obtain their relative pose. Credit: Rodrigues et al.

Researchers at the University of Porto in Portugal and KTH Royal Institute of Technology in Sweden have recently developed a framework that can estimate the depth and relative pose of two ground robots that are collaborating on a given task. Their framework, outlined in a paper pre-published on arXiv, could help to enhance the performance of multiple robots in tasks that involve exploration, manipulation, coverage, sampling and patrolling, as well as in search and rescue missions.

In recent years, researchers have carried out a growing number of studies aimed at developing solutions to effectively coordinate multiple robots within a decentralized architecture. To effectively tackle a given task as a group, individual robots within a swarm or formation should be at least partly aware of the pose of other agents in their surroundings.

This pose-related data, known as relative pose information, allows an agent to optimize the function of a given goal, re-plan its trajectories and avoid collisions with other robots. In some real-world settings, however, it can be difficult for agents to attain accurate relative pose estimates. For instance, during extreme missions in remote or secluded areas, robots might encounter problems with communication channels and with high-precision positioning or motion capture systems.

With this in mind, the team of researchers from the University of Porto and KTH set out to develop a framework that could enhance the 3-D depth estimation and relative pose estimation of ground robots that are working together towards a common goal. They specifically focused on a scenario involving two autonomous ground vehicles navigating an unknown environment, both equipped with perspective cameras.

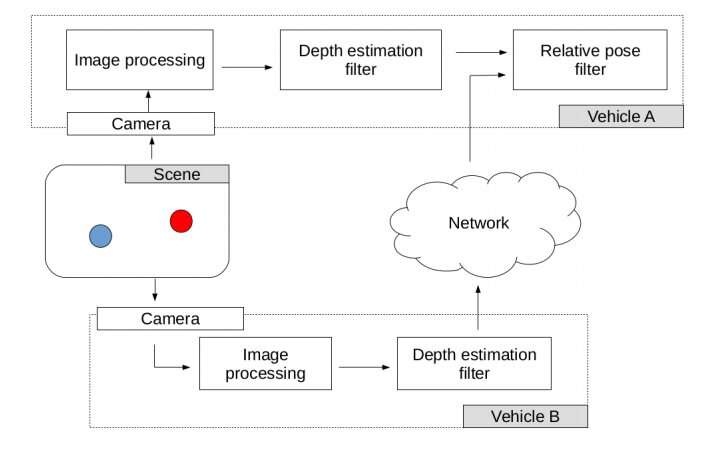

Pipeline of the framework proposed by the researchers. Credit: Rodrigues et al.

"The depth estimation problem aims at recovering the 3-D information of the environment," the researchers explain in their paper. "The relative localization problem consists of estimating the relative pose between two robots, by sensing each other's pose or sharing information about the perceived environment."

Most existing solutions for depth estimation and relative localization in robots work by analyzing a disconnected set of data, without taking into account the chronological order of events. The approach proposed by the researchers, on the other hand, considers information gathered by the two robots individually via their cameras and then combines this to compute the relative pose between them. Depth estimation information gathered by the two agents and input commands are fed to an Extended Kalman Filter (EKF), which is designed to process this data and estimate the relative pose between the robots.

"While previous solutions for this problem consider a set of two or more images from the environment or use some special fleet configuration (e.g. the robots are in each others' field of view or have the ability of sensing the bearing-information about each others' positions), we propose a framework that shares a set of common observations of the environment in the respective local frame of each robot (3-D point features are employed)," the researchers write.

The researchers evaluated their framework in a series of simulated scenarios, using two ground robots called TurtleBots. Their findings suggest that their approach does in fact enable effective depth estimation and relative localization for two robots collaborating on a task. In their future work, the researchers plan to also consider the active control of two robots in the same scenario explored in their recent study, as well as other aspects relevant to their coordination.

More information: A framework for depth estimation and relative localization of ground robots using computer vision. arXiv:1908.00309 [cs.RO]. arxiv.org/abs/1908.00309

© 2019 Science X Network