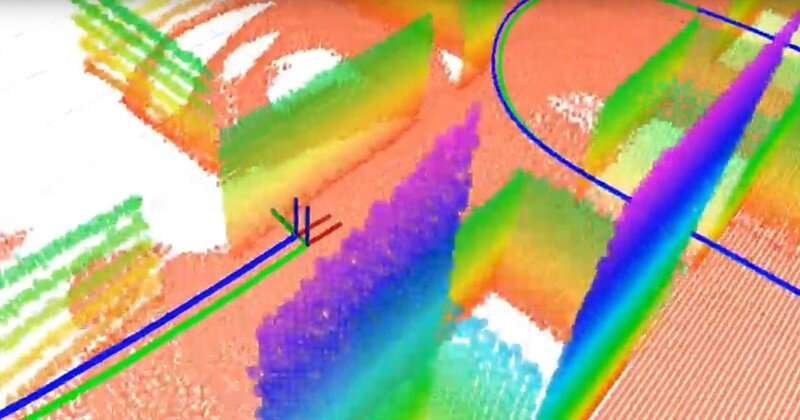

The team built a 3-D plane-based map using a 3-D LiDAR and an inertial sensor. LiDAR is like radar but uses light instead of radio waves. Points with different colors are the different planes (which serve as the landmarks for navigation), the green line is the true trajectory and the blue line is the estimated trajectory computed by the team's simultaneous localization and mapping (SLAM) algorithm. Credit: University of Delaware

Scientists around the world are racing to develop self-driving vehicles, but a few essential components have yet to be perfected. One is localization—the vehicle's ability to determine its place and motion. Another is mapping—the vehicles' ability to model its surroundings so that it can safely transport passengers to the right place.

The question is: How do you give a vehicle a sense of direction? While global positioning satellite (GPS) devices can help, they are not available or reliable in all contexts. Instead, many experts are investigating simultaneous localization and mapping, or SLAM, a notoriously difficult problem in the field of robotics. Novel algorithms developed by Guoquan (Paul) Huang, an assistant professor of mechanical engineering, electrical and computer engineering, and computer and information sciences at the University of Delaware, are bringing the answer closer into view.

Huang uses visual-inertial navigation systems that combine inertial sensors, which contain gyroscopes to determine orientation and accelerometers to determine acceleration, along with cameras. Using data from these relatively inexpensive, widely available components, Huang measures and calculates motion and localization.

For example, when his team connected their system to a laptop and carried it around UD's Spencer Laboratory, home of the Department of Mechanical Engineering, they generated sufficient data to map the building while tracking the motion of the laptop itself. In an autonomous vehicle, similar sensors and cameras would be attached to a robot in the vehicle.

An autonomous vehicle's ability to track its own motion and the motion of objects around it is critical. "We need to localize the vehicle before we can automatically control the vehicle," said Huang. "The vehicle needs to know its location in order to continue."

Then there's the issue of safety. "In an urban scenario, for example, there are pedestrians and other vehicles, so ideally the vehicle should be able to track its own motion as well as the motion of moving objects in its surroundings," said Huang.

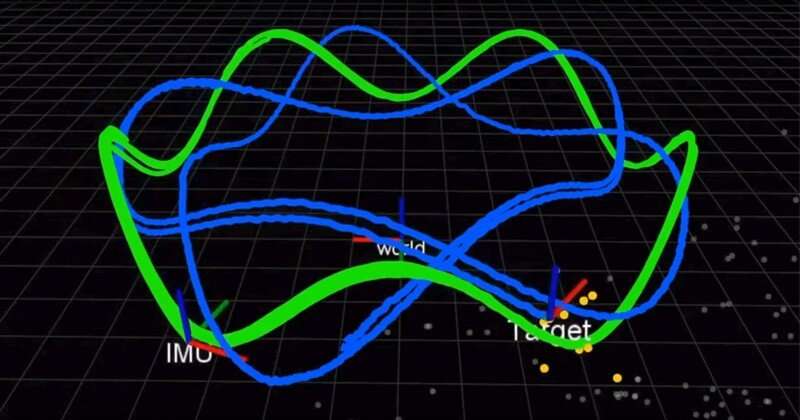

The team uses a camera and an inertial measurement unit (IMU) to simultaneously localize a robot and track a moving target. The green line is the robot's trajectory and the blue line is the target's trajectory. Credit: University of Delaware

In a paper published earlier this year in the International Journal of Robotics Research (IJRR), Huang and his team found a better, more accurate solution for combining the inertial measurements. Until now, scientists used discrete integration, a calculus technique that approximates the area under a curve, to approximate the solution. Instead, Huang's group found a solution and proved that it was better than existing methods. Even better, they are sharing their solution.

"We open source our code. It is on GitHub," said Huang. "Many people have used our code for their systems." In another recent IJRR paper, Huang and his team reformulated the SLAM problem as a formula that computes small increments of motion by the robots equipped with the visual and inertial sensors. Many of these research videos can be found on Huang's Lab YouTube Channel.

These discoveries could have applications beyond autonomous vehicles, from cars to aerial drones to underwater vessels and more. Huang's algorithms could also be used to develop augmented reality and virtual reality applications for mobile devices such as smartphones, which already have cameras and inertial sensors on board.

"These sensors are very common, so most mobile devices, smartphones, even drones and vehicles have these sensors," said Huang. "We try to leverage the existing cheap sensors and provide a localization solution, a motion tracking solution."

In 2018 and again in 2019, Huang received a Google Daydream (AR/VR) Faculty Research Award to support this work.

"People see that robots are going to be the next big thing in real life, so that's why industry actually drives this field of research a lot," said Huang.

More information: Zheng Huai et al. Robocentric visual–inertial odometry, The International Journal of Robotics Research (2019). DOI: 10.1177/0278364919853361

Kevin Eckenhoff et al. Closed-form preintegration methods for graph-based visual–inertial navigation, The International Journal of Robotics Research (2019). DOI: 10.1177/0278364919835021

Journal information: International Journal of Robotics Research

Provided by University of Delaware