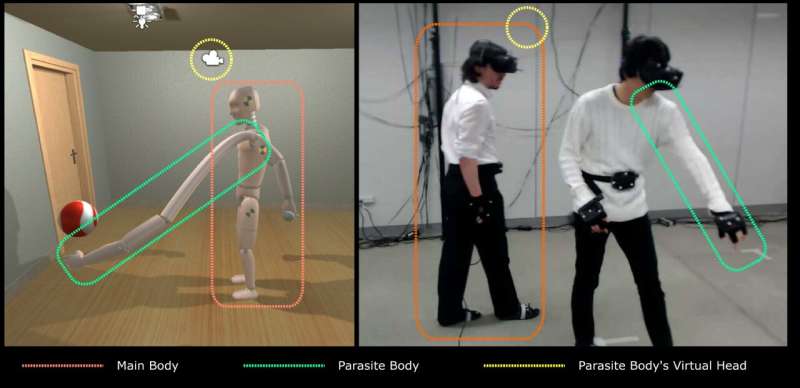

The parasitic body in two contexts. On the left, the VR perspective. On the right, the real space with both participants. The participant on the left (indicated in red) controls the main body. The participant on the right (indicated in green) controls the parasitic limb. The yellow circle indicates the camera position in both contexts. Credit: Takizawa et al.

Recent advancements in robotics have enabled the development of systems to assist humans in a variety of tasks. A type of robotic system that has gained substantial popularity over the past few years is wearable robotic arms remotely operated by a third party.

While assisting users, these arms need to collect visual feedback and share it with the third party operator. This feedback can be collected in several ways, the most common of which is by placing a camera on the user 'hosting' the robotic arm, also referred to as main body operator (MBO). This particular approach for collecting visual data, however, can be somewhat limiting, as it is highly dependent on the movements of the user who is wearing the system.

With this limitation in mind, researchers at Keio University, RIKEN AIP and the University of Tokyo have recently developed a virtual reality (VR) system to investigate the concept of "body editing," which entails the use of wearable robotic limbs to assist humans in day-to-day tasks. Their research was funded by the Japan Science and Technology Agency (JST)'s Exploratory Research for Advanced Technology (ERATO) program.

"The overarching goal of our recent work was to study body editing as part of our research group, ERATO JIZAI," Adrien Verhulst, one of the researchers who carried out the study, told TechXplore. "Body editing, such as extending the body with wearable assistive robotics technology, is a concept easy to find in some cultural areas, such as in the manga series Parasyte, by Hitoshi Iwaaki. We thought to ourselves, 'Having an artificial being attached to you and assisting you is exactly what we are looking for,' so we tried to loosely replicate the idea in VR."

Verhulst and his colleagues wanted to carry out what could be referred to as a 'shared body' experiment. Instead of proposing a solution to overcome the limitations of current systems to collect visual feedback, they set out to compare and evaluate existing approaches.

To do this, they developed a VR system that uses optitrack technology to track a user's body and a head-mounted display (HMD) to view the direction of both the MBO's and the robotic arm's bodies. In their paper, the researchers refer to the robotic arm's user as the "main body" and to third party tele-operator as the "parasitic body."

"It's logical to think that if the 'parasite body' is attached to the main body, then when the main body moves, the parasite is going to end up sick, right?" Verhulst said. "Consequently, the questions we asked ourselves are: How should we adapt the visual feedback collected by the body? Should it be a third view, like in video game? Then where should it be placed: above the main body, on the side, or rather close to the action? Maybe we could share the same view? Or a view depending of the movement of both people?"

Teams at different companies and institutions have presented several approaches to gather visual feedback from robotic arms. The most prominent among these are the "shared view" concept, the third person view and the close-to-the-action view.

As suggested by their names, the shared view approach collects visual feedback that matches what the user wearing the robotic arm sees; the third person view what a third person would see if they were standing beside or behind the user, and the close-to-the-action view shows a closeup of the task that is being completed. The researchers wanted to investigate whether these different view modes should be dependent on the movement of the main body, the parasite body, or both.

In order to explore this question, they carried out an experiment on 16 human users using the VR system they developed. This allowed them to gather interesting insight about different approaches for the collection of visual feedback. Nonetheless, their findings are still preliminary, as the number of people who participated in their study is limited.

"People need to feel oriented in the direction of their limb in order to use it. Try this: If you put a camera in front of you at a weird angle, and can only see yourself from that camera, you'll have more difficulty to move your arm in a given direction," Verhulst explained. "This means that each time the main body moves, the parasite's body reorients itself. Interestingly, we didn't notice a sharp difference in body ownership scores, meaning that no matter the viewpoint, participants felt that they 'owned' their body, and that they were in control of it."

The observations gathered by Verhulst and his colleagues suggest that viewpoint dependency is not that important. In other words, whether one collects visual feedback by placing a camera on the robotic arm, on the human user's shoulder, or in between the two, he/she can still achieve the correct "body ownership" when looking through the camera.

"The results we collected were very surprising," Verhulst added. "We also did not observe any significant difficulty in terms of workload (i.e. physical load, mental load, effort, time), which, again, hints that the camera dependency doesn't matter in this respect. However, the participants' performance was a bit better in situations where the view depends on both the main body and the parasite, hinting that for maximum effectiveness, it may be better to have the camera placed on the main body, but be motorized to follow the movement of the person controlling the robot arm."

The investigation conducted by this team of researchers specifically focused on the perspective dependency of robotic arm third party operators in task that involved finding and reaching objects. In the future, the VR system they developed could also be used to study approaches to gather visual feedback on more elaborate and realistic tasks.

"The next step is to create an experimental environment with more realistic situations and configurations," Ryo Takizawa, another researcher involved in the study, told TechXplore. "To explore cooperation methods in collaborative work, we are thinking of restricting communication methods and improving VR models, and so on."

The researchers decided to use VR and carry out a virtual experiment because it was an easy and inexpensive solution that did not require the maintenance of advanced robotic systems. In order to ascertain the validity of their findings, however, they will eventually need to compare their results with those achieved using a real robotic arm.

In their future work, Verhulst and his colleagues also plan to carry out a similar experiment in which participants are trained on the task they will be completing beforehand, as they believe that this would lead to different results. In this study, in fact, the users received no prior training before using their VR platform.

"We need to consider how to design a training task or program in a body-edited and shared body context, especially in terms of how to smooth over, if not enhance, cooperative tasks," Katie Seaborn, another researcher who was involved in the study, told TechXplore. "I've been exploring the notion of same-time, same-motion synchrony, which has been linked to boosts in cooperative performance in other contexts. We're wondering if such a 'shared action' strategy would work in this case, with two people sharing a very unusual body in VR."

More information: Ryo Takizawa et al. Parasitic Body: Exploring Perspective Dependency in a Shared Body with a Third Arm, 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (2019). DOI: 10.1109/VR.2019.8798351

© 2019 Science X Network