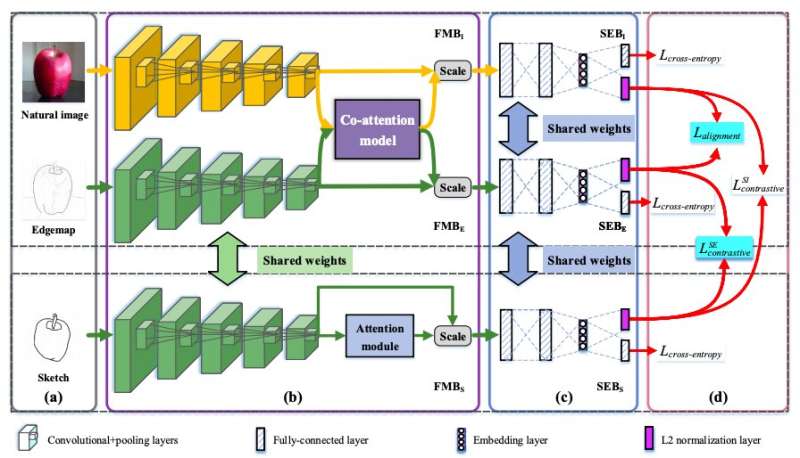

Illustration of Semi3-Net architecture. Credit: Lei et al.

In recent years, researchers have been developing increasingly advanced computational techniques, such as deep learning algorithms, to complete a variety of tasks. One task that they have been trying to address is known as "sketch-based image retrieval" (SBIR).

SBIR tasks entail retrieving images of a particular object or visual concept among a wide collection or database based on sketches made by human users. To automate this task, researchers have been trying to develop tools that can analyze human sketches and identify images that are related to the sketch or contain the same object.

Despite the promising results achieved by some of these tools, developing techniques that perform consistently well on SBIR tasks has so far proved challenging. This is mainly due to the stark visual differences between abstract sketches and real images. For instance, sketches made by humans are often deformed and abstract, which makes them harder to relate to objects in real images.

To overcome this challenge, researchers at Tianjin University and Beijing University of Posts and Telecommunications in China have recently developed a neural network-based architecture that learns discriminative cross-domain feature representations for sketch-based image retrieval (SBIR) tasks. The technique they created, presented in a paper pre-published on arXiv, combines a variety of computational techniques, including semi-heterogeneous feature mapping, joint semantic embedding and co-attention models.

"The key insight lies with how we cultivate the mutual and subtle relationships amongst the sketches, natural images and edgemaps," the researchers wrote in their paper. "Semi-heterogeneous feature mapping is designed to extract bottom features from each domain, where the sketch and edgemap branches are shared while the natural image branch is heterogeneous to other branches."

The model designed by the researchers is a semi-heterogeneous three-way joint embedding network (Semi3-Net). In addition to semi-heterogeneous mapping, it uses a technique known as joint semantic embedding. Semantic embedding allows the network to embed features from different domains (e.g., from sketches or photographs) into a common high-level semantic space. Semi3-Net also incorporates a co-attention model, which is designed to recalibrate features extracted from the two different domains.

Finally, the researchers designed a hybrid-loss mechanism that can calculate the correlation between sketches, edgemaps and natural images. This mechanism allows the Semi3-Net model to learn representations that are invariant across the two domains (i.e., sketches and images taken using cameras).

The researchers trained and evaluated Semi3-Net on data from Sketchy and TU-Berlin Extension, two datasets that are widely used in studies focusing on SBIR tasks. The Sketchy database contains 75,471 sketches and 12,500 natural images, while TU-Berlin Extension contains 204,489 natural images and 20,000 hand drawn sketches.

So far, Semi3-Net has performed remarkably well in all the experiments conducted by the researchers, outperforming other state-of-the-art models for SBIR. The team is now planning to continue working on the model and further enhance its performance, perhaps even adapting it to tackle other problems that require connecting data from different domains.

"In the future, we will focus on extending the proposed cross-domain network to fine-grained image retrieval and learning the correspondence of the fine-grained details for sketch-image pairs," the researchers wrote in their paper.

More information: Semi-heterogeneous three-way joint embedding network for sketch-based image retrieval. arXiv:1911.04470 [cs.CV]. arxiv.org/abs/1911.04470

© 2019 Science X Network