March 5, 2020 report

The Moral Machine reexamined: Forced-choice testing does not reveal true wishes

A pair of researchers at The University of North Carolina at Chapel Hill is challenging the findings of the team that published a paper called "The Moral Machine experiment" two years ago. Yochanan Bigman and Kurt Gray claim the results of the experiment were flawed because they did not allow test-takers the option of choosing to treat potential victims equally.

Back in 1967, British philosopher Phillippa Foot described the "Trolley Problem," which presented a scenario in which a trolley raced toward people on the tracks who were about to be killed. The trolley driver has the option of taking a side track prior to hitting the people—however, that track is populated, as well. The problem then poses moral quandaries for the driver, such as whether it is more reasonable to kill five people versus two, or whether it is preferable to kill old people versus young people.

Two years ago, a team at MIT revisited this problem in the context of programming a driverless vehicle. If you were the programmer instead of the trolley driver, how would you program the car to respond under a variety of conditions? The team reported that, as expected, most volunteers who took the test would program the car to run over animals rather than people, old people instead of young people, men rather than women, etc. In this new effort, Bigman and Gray are challenging the findings by the team at MIT because they suggest the answers did not truly represent the wishes of the volunteers. They suggest that not giving the test-takers a third option—to treat everybody equally—skewed the results.

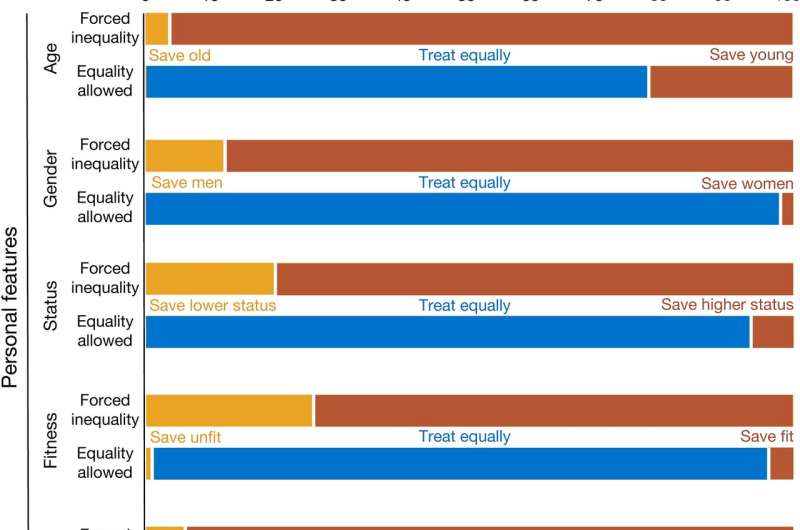

To find out if giving users a third answer resulted in more egalitarian results, the researchers conducted a similar experiment to the one by MIT, but gave users the option of choosing to show no preference over who would be run over and who would be spared. They found that most volunteers chose the third option under most of the scenarios. They suggest their findings indicate that forced-choice testing does not reveal the true wishes of the general public.

More information: Yochanan E. Bigman et al. Life and death decisions of autonomous vehicles, Nature (2020). DOI: 10.1038/s41586-020-1987-4

© 2020 Science X Network