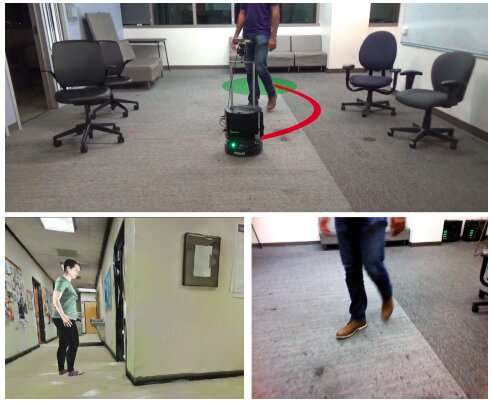

(Top) An autonomous visual navigation scenario considered by the researchers, in a previously unknown, indoor environment with humans, using monocular RGB images (bottom right). To teach machines how to navigate indoor environments containing humans, the researchers created HumANav, a dataset that allows for photorealistic rendering in simulated environments (e.g. bottom left). Credit: Tolani et al.

In order to tackle the tasks that they are designed to complete, mobile robots should be able to navigate real world environments efficiently, avoiding humans or other obstacles in their surroundings. While static objects are typically fairly easy for robots to detect and circumvent, avoiding humans can be more challenging, as it entails predicting their future movements and planning accordingly.

Researchers at the University of California, Berkeley, have recently developed a new framework that could enhance robot navigation among humans in indoor environments such as offices, homes or museums. Their model, presented in a paper pre-published on arXiv, was trained on a newly compiled dataset of photorealistic images called HumANav.

"We propose a novel framework for navigation around humans that combines learning-based perception with model-based optimal control," the researchers wrote in their paper.

The new framework these researchers developed, dubbed LB-WayPtNav-DH, has three key components: a perception, a planning, and a control module. The perception module is based on a convolutional neural network (CNN) that was trained to map the robot's visual input into a waypoint (i.e., the next desired state) using supervised learning.

The waypoint mapped by the CNN is then fed to the framework's planning and control modules. Combined, these two modules ensure that the robot moves to its target location safely, avoiding any obstacles and humans in its surroundings.

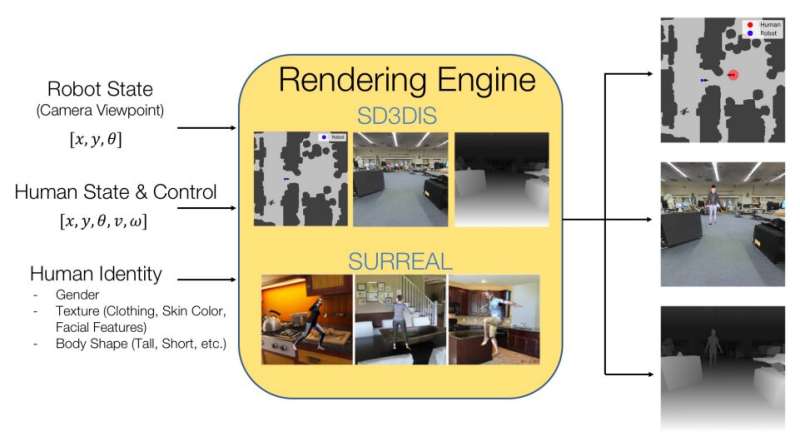

Image explaining what the HumANav dataset contains and how it achieves photorealistic rendering of indoor environments containing humans. Credit: Tolani et al.

The researchers trained their CNN on images included in a dataset they compiled, dubbed HumANav. HumANav contains photorealistic, rendered images of simulated building environments in which humans are moving around, adapted from another dataset called SURREAL. These images portray 6000 walking, textured human meshes, arranged by body shape, gender and velocity.

"The proposed framework learns to anticipate and react to peoples' motion based only on a monocular RGB image, without explicitly predicting future human motion," the researchers wrote in their paper.

The researchers evaluated LB-WayPtNav-DH in a series of experiments, both in simulations and in the real world. In real-world experiments, they applied it to Turtlebot 2, a low-cost mobile robot with open-source software. The researchers report that the robot navigation framework generalizes well to unseen buildings, effectively circumventing humans both in simulated and real-world environments.

Credit: Varun Tolani MS

Credit: Varun Tolani MS

"Our experiments demonstrate that combining model-based control and learning leads to better and more data-efficient navigational behaviors as compared to a purely learning based approach," the researchers wrote in their paper.

The new framework could ultimately be applied to a variety of mobile robots, enhancing their navigation in indoor environments. So far, their approach has proved to perform remarkably well, transferring policies developed in simulation to real-world environments.

In their future studies, the researchers plan to train their framework on images of more complex or crowded environments. In addition, they would like to broaden the training dataset they compiled, including a more diverse set of images.

More information: Visual navigation among humans with optical control as a supervisor. arXiv:2003.09354 [cs.RO]. arxiv.org/abs/2003.09354

© 2020 Science X Network