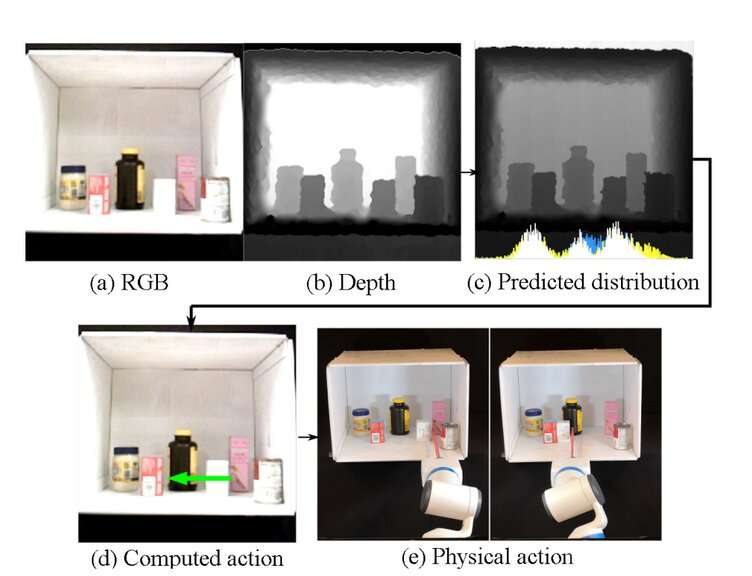

Credit: Huang Huang et al.

Artificial intelligence is being applied to virtually every aspect of our work and recreational lives. From determining calculations for the construction of towering skyscrapers to designing and building cruise ships the size of football fields, AI is increasingly playing a key role in the most massive projects.

But sometimes, all we want to do is move a can of beans.

According to a recently published abstract by researchers at the University of California, Berkeley, they have developed a mechanism that "couples a perception pipeline predicting a target object occupancy support distribution with a mechanical search policy that sequentially selects occluding objects to push to the side to reveal the target as efficiently as possible."

In other words, they've trained a robot to find and move items on a shelf.

Teams of researchers at both Google and Berkeley have been working on ways to apply AI to this most mundane of activities. In fact, improving the means of finding and selecting objects is at the heart of industrial processes, scientific laboratories, health care, grocery stores, computer components and countless other commercial and manufacturing processes.

"For instance, a service robot at a pharmacy or hospital may need to find supplies from a cabinet, an industrial robot may need to find kitting tools from shelves in warehouses, or a service robot in a retail store may need to search shelves for requested products from customers," stated Huang Huang and nine of his Berkeley colleagues in a white paper titled, "Mechanical Search on Shelves using Lateral Access X-RAY."

The paper reports that while robotics has focused for years on mechanical searches in "unstructured clutter," studies in more organized locations such as on shelves or in cabinets and closets have been scarce. Such environments contain conditions that introduce novel problems to robotic search-and-detect missions. Limited space, complex maneuvers to reach items and obstruction of view are some of the challenges robotics projects must overcome.

The LAX-RAY, as the Berkeley system is called, is trained to search for hidden or partially obstructed objects and determine a safe way that access can be gained to that object.

A video demonstration of LAX-RAY shows a robotic arm exploring objects lined up in a confined area of a shelf, moving items in the front row to create a path for access to items located in the back.

Experimenters generated 800 random environments ranging from simple to more complex arrangements. The LAX-RAY was connected to a depth-sensing camera that calculated distances and determined locations of objects. Researchers say the demonstrations had an 87 percent accuracy rate.

"We work within a shelf as opposed to the infinite planar workspace X-RAY assumes," the report states, explaining that instead of grasping objects "along an infinite plane," the robot patiently considers alternate, safer motions instead, "pushing actions in a tightly constrained shelf."

In the Google project, video footage from a touch-sensitive robot sensor converts collected data into a physical representation of the region. The robot uses the image to push items aside and avoid damaging them.

Future experimentation by Google and Berkeley will include the use of suction cups to grasp objects and the inclusion of non-rigid items such as cloths to be detected, grasped and moved.

More information: Mechanical Search on Shelves using Lateral Access X-RAY, arXiv:2011.11696 [cs.RO] arxiv.org/abs/2011.11696

© 2020 Science X Network