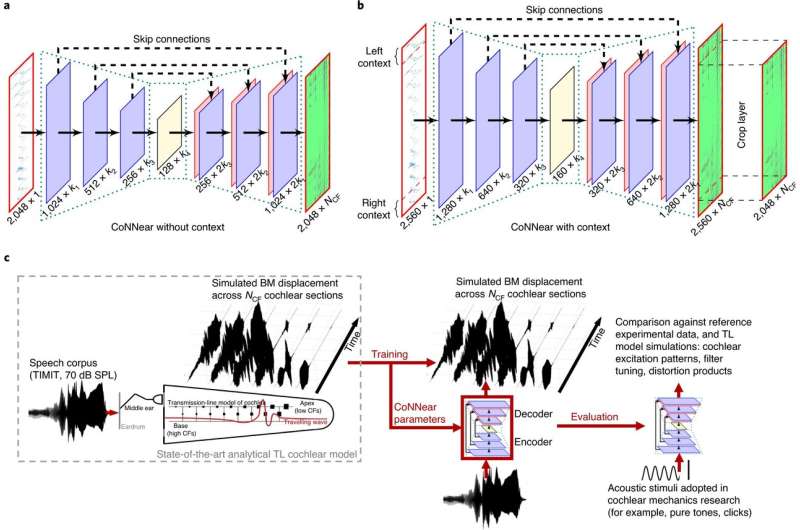

CoNNear is a fully convolutional encoder–decoder NN with strided convolutions and skip connections to map audio input to 201 BM vibration outputs of different cochlear sections (NCF) in the time-domain. a,b, CoNNear architectures with (a) and without (b) context. The final CoNNear model has four encoder and decoder layers, uses context and includes a tanh activation function between the CNN layers. c, An overview of the model training and evaluation procedure. Whereas reference, analytical TL-model simulations to a speech corpus were used to train the CoNNear parameters, evaluation of the model was performed using simple acoustic stimuli commonly adopted in cochlear mechanics studies. Credit: Nature Machine Intelligence (2021). DOI: 10.1038/s42256-020-00286-8

A trio of researchers at Ghent University has combined a convolutional neural network with computational neuroscience to create a model that simulates human cochlear mechanics. In their paper published in Nature Machine Intelligence, Deepak Baby, Arthur Van Den Broucke and Sarah Verhulst describe how they built their model and the ways they believe it can be used.

Over the past several decades, great strides have been made in speech and voice recognition technology. Customers are routinely serviced by phone-based agents, for example. Also, voice recognition and response systems on smartphones have become ubiquitous. But one feature they all have in common is that in spite of how they seem, none of them operate in real time. Each is based on hardware and software that process what is heard. In this new effort, the researchers suggest that the problem with current devices is the complexity involved in the computations that must be done. To address this problem, they have created a model that simulates hearing in humans that is based on melding the best features of convolutional neural networks with computational neuroscience.

Hearing in humans comes courtesy of the various parts of the ear. Sound enters the ear canal and strikes the eardrum. The eardrum vibrates in response, sending signals to bones in the inner ear that create ripples in the liquid in the cochlea. That liquid stirs the hair cells that line the cochlea. The movement of the hair cells stimulates ion channels that in turn generate signals that are sent to the brain stem. The researchers in Belgium created an AI system that was taught to recognize sound and then to decode it in a similar way. They then connected their system to a model based on human anatomy. They named their system CoNNear—a working model of the cochlea.

Testing showed the system is able to transform 20-kHz sampled acoustic waveforms to cochlear basilar-membrane waveforms in real-time, besting state-of-the-art traditional systems by a wide margin. CoNNear carried out cochlear functions 2,000 times faster than current hearing aid technology. The researchers suggest their findings could lay the groundwork for a new generation of human-like hearing, or augmented hearing and speech recognition devices.

More information: Deepak Baby et al. A convolutional neural-network model of human cochlear mechanics and filter tuning for real-time applications, Nature Machine Intelligence (2021). DOI: 10.1038/s42256-020-00286-8

Journal information: Nature Machine Intelligence

© 2021 Science X Network