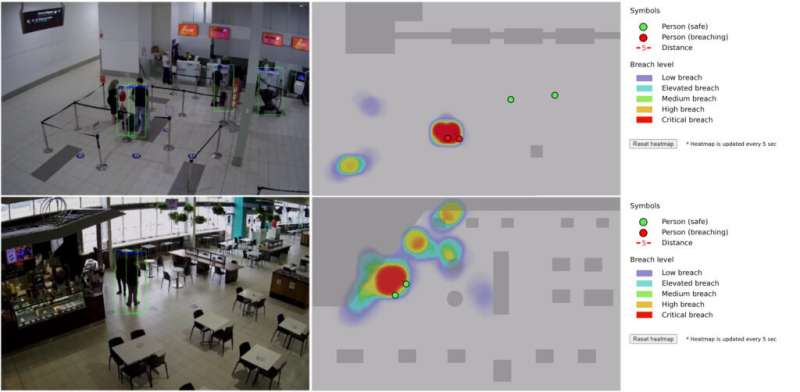

AI detection in action monitoring the check-in and departure area cafe, it can also generate a heat map of cumulative breaches. Credit: Griffith University

Griffith University researchers have developed an AI video surveillance system to detect social distancing breaches in an airport without compromising privacy.

By keeping image processing gated to a local network of cameras, the team bypassed the traditional need to store sensitive data on a central system.

Professor Dian Tjondronegoro from Griffith Business School says data privacy is one of the biggest concerns with this technology because the system has to constantly observe people's activities to be effective.

"These adjustments are added to the central decision-making model to improve accuracy."

Published in Information, Technology & People, the case study was completed at Gold Coast Airport which, pre-COVID-19 had 6.5 million passengers annually with 17,000 passengers on-site daily. Hundreds of cameras cover 290,000 square meters with hundreds of shops and more than 40 check-in points.

Researchers tested several cutting-edge algorithms, lightweight enough for local computation, across nine cameras in three related case studies testing automatic people detection, automatic crowd counting and social distance breach detection to find the best balance of performance without sacrificing accuracy and reliability.

"Our goal was to create a system capable of real-time analysis with the ability to detect and automatically notify airport staff of social distancing breaches," Professor Tjondronegoro said.

Three cameras were used for the automatic social distance breach detection testing covering the check-in area, food court and waiting area. Two people were employed to compare live video feeds and the AI analysis results to determine if people marked as red were in breach.

Researchers found camera angles affect the ability of AI to detect and track people's movements in a public area and recommend angling cameras between 45 to 60 degrees.

Professor Tjondronegoro said their AI-enabled system design was flexible enough to allow humans to double check results reducing data bias and improving transparency in how the system works.

"The system can scale up in the future by adding new cameras and be adjusted for other purposes. Our study shows responsible AI design can and should be useful for future developments of this application of technology."

More information: Nehemia Sugianto et al, Privacy-preserving AI-enabled video surveillance for social distancing: responsible design and deployment for public spaces, Information Technology & People (2021). DOI: 10.1108/ITP-07-2020-0534

Provided by Griffith University