September 10, 2021 feature

A framework to evaluate techniques for simulating physical systems

The simulation of physical systems using computing tools can have numerous valuable applications, both in research and real-world settings. Most existing tools for simulating physical systems are based on physics theory and numerical calculations. In recent years, however, computer scientists have been trying to develop techniques that could complement these tools, which are based on the analysis of large amounts of data.

Machine learning (ML) algorithms are particularly promising approaches for the analysis of data. Therefore, many computer scientists developed ML techniques that can learn to simulate physical systems by analyzing experimental data.

While some of these tools have achieved remarkable results, evaluating them and comparing them to other approaches can be challenging due to the huge variety of existing methods and the differences in the tasks they are designed to complete. So far, therefore, these tools have been evaluated using different frameworks and metrics.

Researchers at New York University have developed a new benchmark suite that can be used to evaluate models for simulating physical systems. This suite, presented in a paper pre-published on arXiv, can be tailored, adapted and extended to evaluate a variety of ML-based simulation techniques.

"We introduce a set of benchmark problems to take a step toward unified benchmarks and evaluation protocols," the researchers wrote in their paper. "We propose four representative physical systems, as well as a collection of both widely used classical time integrators and representative data-driven methods (kernel-based, MLP, CNN, nearest neighbors)."

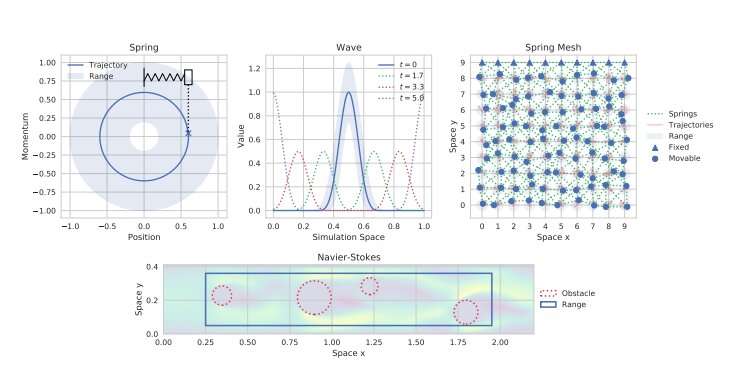

The benchmark suite developed by the researchers contains simulations of four simple physical models with training and evaluation setups. The four systems are: a single oscillating spring, a one-dimensional (1D) linear wave equation, a Navier-Stokes flow problem and a mesh of damped springs.

"These systems represent a progression of complexity," the researchers explained in their paper. "The spring system is a linear system with low-dimensional space of initial conditions and low-dimensional state; the wave equation is a low-dimensional linear system with a (relatively) high-dimensional state space after discretization; the Navier-Stokes equations are nonlinear and we consider a setup with low-dimensional initial conditions and high-dimensional state space; finally, the spring mesh system has both high-dimensional initial conditions as well as high-dimensional states."

In addition to simulations of these simple physical systems, the suite developed by the researchers includes a collection of simulation approaches and tools. These include both traditional numerical approaches and data-driven ML techniques.

Using the suite, scientists can carry out systematic and objective evaluations of their ML simulation techniques, testing their accuracy, efficiency and stability. This allows them to reliably compare the performance of tools with different characteristics, which would otherwise be difficult to compare. The benchmark framework can also be configured and extended to consider other tasks and computational approaches.

"We envision three ways in which the results of this work might be used," the researchers wrote in their paper. "First, the datasets developed can be used for training and evaluating new machine learning techniques in this area. Secondly, the simulation software can be used to generate new datasets from these systems of different sizes, different initial condition dimensionality and distribution, while the training software could be used to assist in conducting further experiments, and thirdly, some of the trends seen in our results may help inform the design of future machine learning tasks for simulation."

The new benchmark suite introduced by this team of researchers could soon help to improve the evaluation of both existing and emerging techniques for simulating physical systems. Currently, however, it does not cover all possible model configurations and settings, thus it could be expanded further in the future.

More information: An extensible benchmark suite for learning to simulate physical systems. arXiv: 2108.07799 [cs.LG]. arxiv.org/abs/2108.07799

© 2021 Science X Network