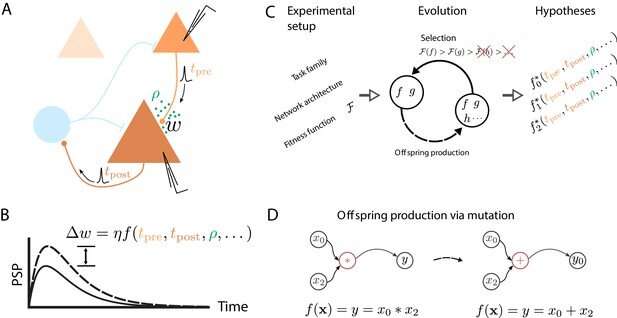

Artificial evolution of synaptic plasticity rules in spiking neuronal networks. (A) Sketch of cortical microcircuits consisting of pyramidal cells (orange) and inhibitory interneurons (blue). Stimulation elicits action potentials in pre- and postsynaptic cells, which, in turn, influence synaptic plasticity. (B) Synaptic plasticity leads to a weight change (Δw) between the two cells, here measured by the change in the amplitude of post-synaptic potentials. The change in synaptic weight can be expressed by a function f that in addition to spike timings (tpre,tpost) can take into account additional local quantities, such as the concentration of neuromodulators (ρ, green dots in A) or postsynaptic membrane potentials. (C) For a specific experimental setup, an evolutionary algorithm searches for individuals representing functions ff that maximize the corresponding fitness function ℱ. An offspring is generated by modifying the genome of a parent individual. Several runs of the evolutionary algorithm can discover phenomenologically different solutions (f0,f1,f2) with comparable fitness. (D) An offspring is generated from a single parent via mutation. Mutations of the genome can, for example, exchange mathematical operators, resulting in a different function f. Credit: DOI: 10.7554/eLife.66273

Uncovering the mechanisms of learning via synaptic plasticity is a critical step towards understanding how our brains function and building truly intelligent, adaptive machines. Researchers from the University of Bern propose a new approach in which algorithms mimic biological evolution and learn efficiently through creative evolution.

Our brains are incredibly adaptive. Every day, we form new memories, acquire new knowledge, or refine existing skills. This stands in marked contrast to our current computers, which typically only perform pre-programmed actions. At the core of our adaptability lies synaptic plasticity. Synapses are the connection points between neurons, which can change in different ways depending on how they are used. This synaptic plasticity is an important research topic in neuroscience, as it is central to learning processes and memory. To better understand these brain processes and build adaptive machines, researchers in the fields of neuroscience and artificial intelligence (AI) are creating models for the mechanisms underlying these processes. Such models for learning and plasticity help to understand biological information processing and should also enable machines to learn faster.

Algorithms mimic biological evolution

Working in the European Human Brain Project, researchers at the Institute of Physiology at the University of Bern have now developed a new approach based on so-called evolutionary algorithms. These computer programs search for solutions to problems by mimicking the process of biological evolution, such as the concept of natural selection. Thus, biological fitness, which describes the degree to which an organism adapts to its environment, becomes a model for evolutionary algorithms. In such algorithms, the "fitness" of a candidate solution is how well it solves the underlying problem.

Amazing creativity

The newly developed approach is referred to as the "evolving-to-learn" (E2L) approach or "becoming adaptive." The research team led by Dr. Mihai Petrovici of the Institute of Physiology at the University of Bernand Kirchhoff Institute for Physics at the University of Heidelberg, confronted the evolutionary algorithms with three typical learning scenarios. In the first, the computer had to detect a repeating pattern in a continuous stream of input without receiving feedback about its performance. In the second scenario, the computer received virtual rewards when behaving in a particular desired manner. Finally, in the third scenario of "guided learning," the computer was precisely told how much its behavior deviated from the desired one.

"In all these scenarios, the evolutionary algorithms were able to discover mechanisms of synaptic plasticity, and thereby successfully solved a new task," says Dr. Jakob Jordan, corresponding and co-first author from the Institute of Physiology at the University of Bern. In doing so, the algorithms showed amazing creativity: "For example, the algorithm found a new plasticity model in which signals we defined are combined to form a new signal. In fact, we observe that networks using this new signal learn faster than with previously known rules," emphasizes Dr. Maximilian Schmidt from the RIKEN Center for Brain Science in Tokyo, co-first author of the study. The results were published in the journal eLife.

"We see E2L as a promising approach to gain deep insights into biological learning principles and accelerate progress towards powerful artificial learning machines," says Mihai Petrovoci. "We hope it will accelerate the research on synaptic plasticity in the nervous system," concludes Jakob Jordan. The findings will provide new insights into how healthy and diseased brains work. They may also pave the way for the development of intelligent machines that can better adapt to the needs of their users.

More information: Jakob Jordan et al, Evolving interpretable plasticity for spiking networks, eLife (2021). DOI: 10.7554/eLife.66273

Journal information: eLife

Provided by University of Bern