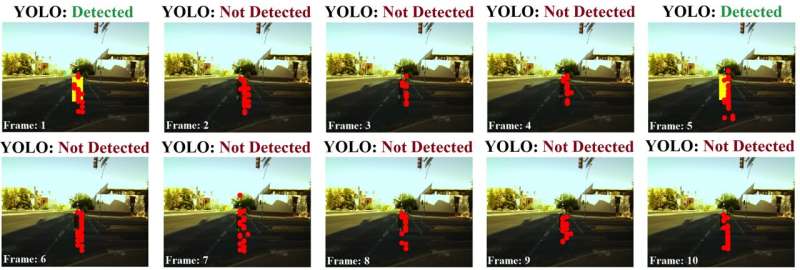

Scenario where the Inter-Frame Hungarian Algorithm ensures that radar reflections across consecutive frames from the same object(s) are still labeled, even if YOLO fails intermittently. Credit: Sengupta, Yoshizawa & Cao.

In recent years, roboticists and computer scientists have been developing a wide range of systems that can detect objects in their environment and navigate it accordingly. Most of these systems are based on machine learning and deep learning algorithms trained on large image datasets.

While there are now numerous image datasets for training machine learning models, those containing data collected using radar sensors are still scarce, despite the significant advantages of radars over optical sensors. Moreover, many of the available open-source radar datasets are not easy to use for different user applications.

Researchers at University of Arizona have recently developed a new approach to automatically generate datasets containing labeled radar data-camera images. This approach, presented in a paper published in IEEE Robotics and Automation Letters, uses a highly accurate object detection algorithm on the camera image-stream (called YOLO) and an association technique (known as the Hungarian algorithm) to label radar point-cloud.

"Deep-learning applications using radar require a lot of labeled training data, and labeling radar data is non-trivial, an extremely time and labor-intensive process, mostly carried out by manually comparing it with a parallelly obtained image data-stream," Arindam Sengupta, a Ph.D. student at the University of Arizona and primary researcher for the study, told TechXplore. "Our idea here was that if the camera and radar are looking at the same object, then instead of looking at images manually, we can leverage an image-based object detection framework (YOLO in our case) to automatically label the radar data."

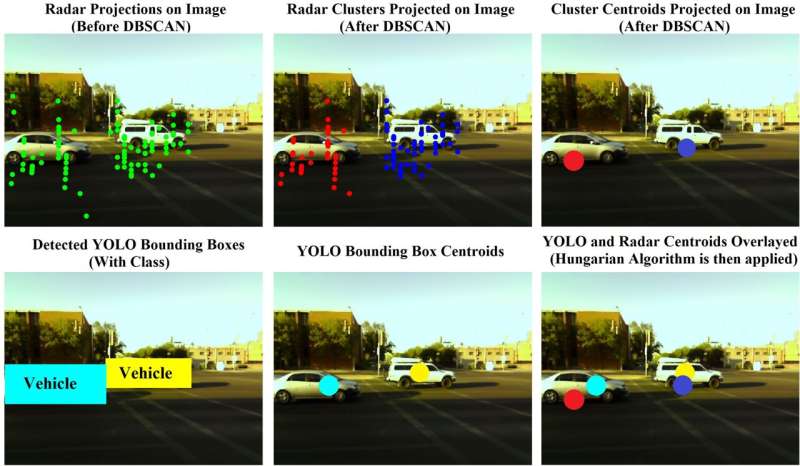

The auto-labeling algorithm at work on real camera-radar data acquired at a traffic intersection in Tucson, Arizona. Credit: Sengupta, Yoshizawa & Cao.

Three characterizing features of the approach introduced by Sengupta and his colleagues are its co-calibration, clustering and association capabilities. The approach co-calibrates a radar and its camera to determine how an object's location detected by the radar would translate in terms of a camera's digital pixels.

"We used a density-based clustering scheme (DBSCAN) to a) detect and remove noise/stray radar returns; and b) segregate radar returns in clusters to distinguish between distinct objects," Sengupta said. "Finally, an intra-frame and an inter-frame Hungarian algorithm (HA) is used for association. The intra-frame HA associated the YOLO predictions to the co-calibrated radar clusters in a given frame, while the inter-frame HA associated the radar clusters pertaining to the same object over consecutive frames to account for labeling radar data in frames even when optical sensors fail intermittently."

In the future, the new approach introduced by this team of researchers could help to automate the generation of radar-camera and radar-only datasets. In addition, in their paper the team explored both proof-of-concept classification schemes based on a radar-camera sensor-fusion approach and on data collected only by radars.

"We also suggested the use of an effective 12-dimensional radar feature vector, constructed using a combination of spatial, Doppler and RCS statistics, rather than the traditional use of either just the point-cloud distribution or just the micro-doppler data," Sengupta said.

Ultimately, the recent study carried out by Sengupta and his colleagues could open new possibilities for the rapid investigation and training of deep learning-based models for classifying or tracking objects using sensor-fusion. These models could help to enhance the performance of numerous robotic systems, ranging from autonomous vehicles to small robots.

Steps leading up to the intra-frame YOLO-radar association, which then results in the radar clusters getting labeled. Credit: Sengupta, Yoshizawa & Cao.

"Our lab at the University of Arizona conducts research on data-driven mmWave radar research targeting autonomous, healthcare, defense and transportation domains," Dr. Siyang Cao, an Assistant Professor at the University of Arizona and Principal Investigator for the study, told TechXplore. "Some of our ongoing research include investigating robust sensor-fusion based tracking schemes and further improving stand-alone mmWave radar perception using classical signal processing and deep learning."

More information: Automatic radar-camera dataset generation for sensor-fusion applications. IEEE Robotics and Automation Letters(2022). DOI: 10.1109/LRA.2022.3144524.

© 2022 Science X Network