Credit: Tian & Franchitti.

Artificial intelligence (AI) tools have proved to be highly valuable for completing a wide range of tasks. While they are primarily used to increase productivity or simplify everyday processes, they have also shown promise for automatically generating creative texts and artistic images.

Researchers at University of Waterloo and New York University Courant Institute have recently created an AI tool that can automatically generate unique artistic images based on text descriptions. Their method, introduced in a paper pre-published on arXiv, is based on a dynamic memory generative adversarial network (DM-GAN), a model based on two artificial neural networks that work together to generate increasingly convincing images.

"We create an end-to-end solution that can generate artistic images from text descriptions," Qinghe Tian and Pr. Jean-Claude Franchitti wrote in their paper.

The key idea behind the recent work by Tian and Franchitti was to create a model that could use text descriptions provided by users to produce artistic images matching these descriptions. This would allow people with disabilities that prevent them from effectively drawing and other individuals who are not very good at drawing to produce beautiful artistic images depicting specific things.

Most existing datasets for training generative models, however, either contain labeled images or texts, rather than images paired with their text descriptions. Therefore, the researchers had to come up with an alternative way of training their model.

"Due to the lack of datasets with paired text description and artistic images, it is hard to directly train an algorithm which can create art based on text input," the researchers explained in their paper. "To address this issue, we split our task into three steps."

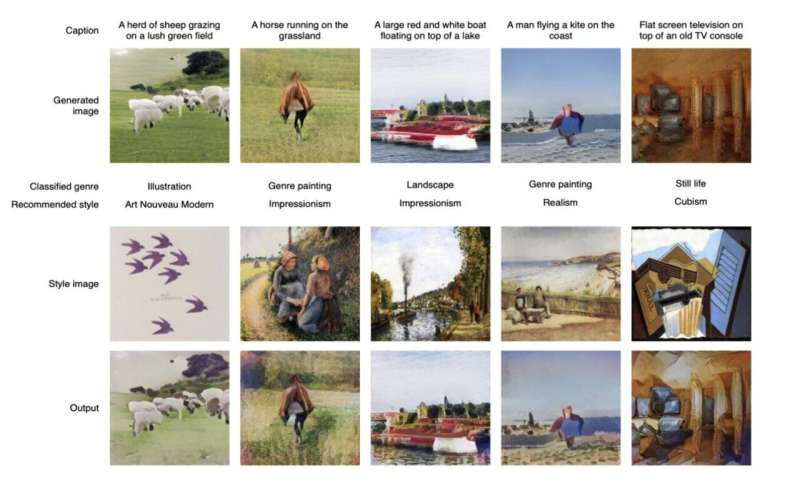

Firstly, the researchers' used their DM-GAN model to generate a realistic image that represents a text description. Subsequently, they used ResNet, an artificial neural network with several layers, to classify the image produced by the DM-GAN into one of the genre categories outlined by the WikiArt dataset.

The WikiArt dataset, which has often been used to train deep learning methods, contains more than 40,000 artistic paintings produced by 195 artists. After it classified the image produced by DM-GAN into one of the genre categories outlined by WikiArt, the model can select a painting style compatible with this genre category and transfer it to the generated image, using a neural artistic stylization network.

The researchers evaluated their multi-framework method in a series of initial trial experiments. While it attained pretty good results, they would like to improve its performance further in their next works.

"In general, we obtain acceptable results for multiple combinations of text inputs and desired styles," the researchers wrote in their paper. "However, there are still many areas of our solution that can be improved. In particular, we plan to add a speech recognition module to make it possible for people with hand disabilities to specify their inputs via voice instead of typing."

In the future, the technique developed by Tian and Franchitti could potentially be integrated into graphics and drawing applications, allowing all individuals to produce high-quality artistic images, irrespective of their abilities and artistic talents. The code for the model devised by the researchers is publicly available on GitHub. In their next studies, the team also plan to compare its performance to that of other methods for image generation and improve the performance of its individual components.

More information: Qinghe Tian, Jean-Claude Franchitti, Text to artistic image generation. arXiv:2205.02439v1 [cs.CV], arxiv.org/abs/2205.02439

© 2022 Science X Network