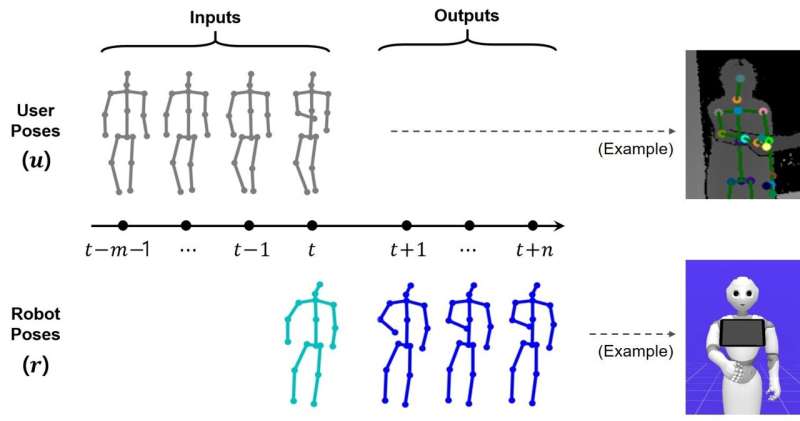

The generation of robot social behavior involves assigning the next robot behavior in order to respond to the current user behavior while maintaining continuity with the current robot behavior.

Researchers at the Electronics and Telecommunications Research Institute (ETRI) in Korea have recently developed a deep learning-based model that could help to produce engaging nonverbal social behaviors, such as hugging or shaking someone's hand, in robots. Their model, presented in a paper pre-published on arXiv, can actively learn new context-appropriate social behaviors by observing interactions among humans.

"Deep learning techniques have produced interesting results in areas such as computer vision and natural language understanding," Woo-Ri Ko, one of the researchers who carried out the study, told TechXplore. "We set out to apply deep learning to social robotics, specifically by allowing robots to learn social behavior from human-human interactions on their own. Our method requires no prior knowledge of human behavior models, which are usually costly and time-consuming to implement."

The artificial neural network (ANN)-based architecture developed by Ko and his colleagues combines the Seq2Seq (sequence-to-sequence) model introduced by Google researchers in 2014 with generative adversarial networks (GANs). The new architecture was trained on the AIR-Act2Act dataset, a collection of 5,000 human-human interactions occurring in 10 different scenarios.

"The proposed neural network architecture consists of an encoder, decoder and discriminator," Ko explained. "The encoder encodes the current user behavior, the decoder generates the next robot behavior according to the current user and robot behaviors, and the discriminator prevents the decoder from outputting invalid pose sequences when generating long-term behavior."

The 5,000 interactions included in the AIR-Act2Act dataset were used to extract more than 110,000 training samples (i.e., short videos), in which humans performed specific nonverbal social behaviors while interacting with others. The researchers specifically trained their model to generate five nonverbal behaviors for robots, namely bowing, staring, shaking hands, hugging and blocking their own face.

Ko and his colleagues evaluated their model for nonverbal social behavior generation in a series of simulations, specifically applying it to a simulated version of Pepper, a humanoid robot that is widely used in research settings. Their initial findings were promising, as their model successfully generated the five behaviors it was trained on at appropriate times during simulated interactions with humans.

"We showed that it is possible to teach robots different kinds of social behaviors using a deep learning approach," Ko said. "Our model can also generate more natural behaviors, instead of repeating pre-defined behaviors in the existing rule-based approach. With the robot generating these social behaviors, users will feel that their behavior is understood and emotionally cared for."

The new model created by this team of researchers could help to make social robots more adaptive and socially responsive, which could in turn improve the overall quality and flow of their interactions with human users. In the future, it could be implemented and tested on a wide range of robotic systems, including home service robots, guide robots, delivery robots, educational robots, and telepresence robots.

"We now intend to conduct further experiments to test a robot's ability to exhibit appropriate social behaviors when deployed in the practical world and facing a human; the proposed behavior generator would be tested for its robustness to noisy input data that a robot is likely to acquire," Ko added. "Moreover, by collecting and learning more interaction data, we plan to extend the number of social behaviors and complex actions that a robot can exhibit."

More information: Woo-Ri Ko et al, Nonverbal Social Behavior Generation for Social Robots Using End-to-End Learning, arXiv (2022). DOI: 10.48550/arxiv.2211.00930

Ilya Sutskever et al, Sequence to Sequence Learning with Neural Networks, arXiv (2014). DOI: 10.48550/arxiv.1409.3215

Woo-Ri Ko et al, AIR-Act2Act: Human–human interaction dataset for teaching non-verbal social behaviors to robots, The International Journal of Robotics Research (2021). DOI: 10.1177/0278364921990671

Journal information: International Journal of Robotics Research , arXiv

© 2022 Science X Network