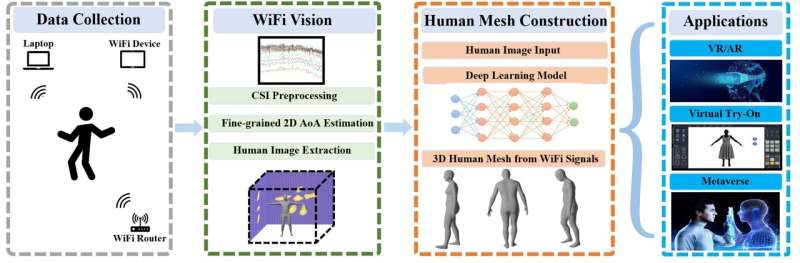

Wi-Mesh analyzes the wi-fi signals reflections at multiple antennas to construct 3D human mesh representation, which can then be utilized for various innovative applications. Credit: Yichao Wang and Jie Yang

A 3D mesh is a three-dimensional object representation made of different vertices and polygons. These representations can be very useful for numerous technological applications, including computer vision, virtual reality (VR) and augmented reality (AR) systems.

Researchers at Florida State University and Rutgers University have recently developed Wi-Mesh, a system that can create reliable 3D human meshes, representations of humans that can then be used by different computational models and applications. Their system was presented at the Twentieth ACM Conference on Embedded Networked Sensor Systems (ACM SenSys '22), a conference focusing on computer science research.

"Our research group specializes in cutting-edge wi-fi sensing research," Professor Jie Yang at Florida State University, one of the researchers who carried out the study, told Tech Xplore. "In previous work, we have developed systems that use wi-fi devices to sense a range of human activities and objects, including large-scale human body movements, small-scale finger movements, sleep monitoring, and daily objects. Our E-eyes and WiFinger systems were among the first to use wi-fi sensing to classify various types of daily activities and finger gestures, with a focus on predefined activities using a trained model."

The key objective of the recent work by Professor Yang and his colleagues was to assess whether wi-fi devices that are commonly used for communications could also help to construct 3D human meshes. A 3D human mesh represents the surface of a human body in three-dimensions, capturing different people's heights, weights, somatotypes, body proportions and articulation-induced body deformations.

"3D human meshes have numerous applications, including VR/AR content creation, virtual try-on, and exercise monitoring, and are a fundamental building block for various downstream tasks, such as animation, clothed human reconstruction, and rendering," Professor Yang explained.

Wi-Mesh, the system created by the researchers, leverages several antennas integrated in common wi-fi communication devices to create human meshes. Essentially, the system emits wi-fi signals to probe the human body and then analyzes reflections to derive the shape of the human body.

"Our system analyzes reflections off the human body to estimate the two-dimensional angle of arrival (2D AoA) of the signal reflections, allowing wi-fi devices to 'see' the environment like humans do," Professor Yang said. "The system then extracts the 2D AoA images of the human and further leverages deep learning models to convert the 2D AoA images of human body into 3D mesh representation, which can be used in various human computer interaction (HCI) applications like VR/AR content creation, virtual try-on, and exercise monitoring."

Wi-Mesh has several advantages over tradition camera-based systems for creating 3D human meshes. Most notably, these traditional systems cannot function in non-line-of-sight (NLoS), dark or baggy clothing scenarios, while Wi-Mesh even works through walls and obstacles, as wi-fi signals can penetrate them.

In contrast with other proposed systems, Wi-Mesh also does not rely on wearable sensors or markers, making it transparent and easy to use. Finally, this new system can be easily deployed using existing wi-fi communication devices, making it more affordable and facilitating its widespread adoption.

"Our study shows that it's possible to use commodity wi-fi to construct a 3D human mesh, something that was previously thought impossible," Professor Yang said. "This breakthrough means that wi-fi devices can not only communicate but also 'see' and visualize the human body, offering a new level of sensing capabilities."

The recent study by this team of researchers could soon open new and exciting possibilities for the construction of human meshes for different technological applications. In the future, the Wi-Mesh system could be used to create better VR and AR content, more advanced real-time dancing videogames and exercise monitoring apps, and other technologies that bridge the gap between the physical and virtual world, such the widely discussed "metaverse."

In their next studies, Professor Yang and his colleagues plan to create innovative applications that can take advantage of the 3D human meshes created by their system. One of these applications, a smart home security system, is set to be presented at the USENIX Security Conference 2023.

"We are currently working on an intelligent surveillance system that uses 3D human meshes from wi-fi devices to perform person re-identification (Re-ID)," Professor Yang added. "The constructed 3D human mesh can capture information about a person's height, weight, body proportions, and gait, making it possible to identify individuals. This approach offers several benefits compared to traditional camera-based surveillance systems. For example, it can identify individuals even in changing appearances and poses, and it works in non-line-of-sight scenarios, where camera-based systems often fail."

More information: Yichao Wang et al, 3D Human Mesh Construction Leveraging Wi-Fi, arXiv (2022). DOI: 10.48550/arxiv.2210.10957

Yan Wang et al, E-eyes, Proceedings of the 20th annual international conference on Mobile computing and networking (2014). DOI: 10.1145/2639108.2639143

Sheng Tan et al, MultiTrack, Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (2019). DOI: 10.1145/3290605.3300766

Sheng Tan et al, WiFinger, Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing (2016). DOI: 10.1145/2942358.2942393

Jian Liu et al, Tracking Vital Signs During Sleep Leveraging Off-the-shelf WiFi, Proceedings of the 16th ACM International Symposium on Mobile Ad Hoc Networking and Computing (2015). DOI: 10.1145/2746285.2746303

Yili Ren et al, Liquid Level Sensing Using Commodity WiFi in a Smart Home Environment, Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (2020). DOI: 10.1145/3380996

Journal information: arXiv

© 2023 Science X Network