Microsoft has come up with a new tool that can identify human emotions in pictures. Recent advances in machine learning and artificial intelligence have made this possible. What does the person feel? Sad? Anger? Something else? The software can "read" into the emotion. The special tool was announced at Microsoft's Future Decoded conference in the UK. The Microsoft Project Oxford team is behind the new tool.

Fundamentally they are telling developers that this Microsoft Project Oxford Emotion API will allow them to build more personalized apps, with Microsoft's cloud-based emotion recognition algorithm. The Microsoft Project Oxford page tells developers that "Microsoft's cutting edge cloud-based emotion recognition algorithms let you build more personalized apps. The API identifies emotions in the wild based on facial expressions that are universal."

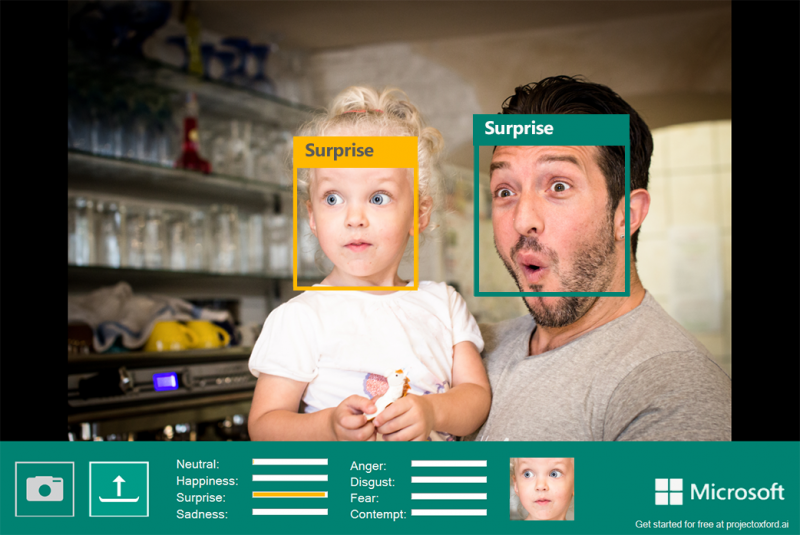

Using machine learning, the Emotion API can distinguish among eight emotions: happiness, sadness, anger, surprise, disgust, fear, contempt and neutral, based on facial expressions.

The Project Oxford team explained more about the tool. "The Emotion API takes an image as an input, and returns the confidence across a set of emotions for each face in the image, as well as bounding box for the face, using the Face API. If a user has already called the Face API, they can submit the face rectangle as an optional input." Also, "In interpreting results from the Emotion API, the emotion detected should be interpreted as the emotion with the highest score, as scores are normalized to sum to one. Users may choose to set a higher confidence threshold within their application, depending on their needs."

Chris Bishop, head of Microsoft Research Cambridge in the UK, demonstrated the tool at Microsoft's Future Decoded conference.

The Emotion API became available as of Wednesday, in that it is available to developers as a public beta. "In addition, Microsoft is releasing public beta versions of several other new tools by the end of the year. The tools are available for a limited free trial," said the company's "Next at Microsoft" blog.

Tibi Puiu in ZME Science said that the software will analyze a given photo smaller than 4MB in size, identify a face and give a score for the kinds of emotions it manages to interpret. "The highest value, or the best guess, will show up first."

Supported image formats include: JPEG, PNG, GIF(the first frame) and BMP. The Project Oxford team said the emotions "are understood to be cross-culturally and universally communicated with particular facial expressions."

Using machine learning, these types of systems get smarter as they receive more data. In the case of facial recognition, said the Next at Microsoft blog, "the system can learn to recognize certain traits from a training set of pictures it receives, and then it can apply that information to identify facial features in new pictures it sees."

Dave Gershgorn in Popular Science talked about applications for this tool. "Microsoft says that this could be useful in gauging customers' reactions to products in stores, and even a reactive messaging app."

Ryan Galgon, a program manager in Microsoft's Technology and Research group, said developers might want to use these tools to create systems that marketers can use to gauge people's reaction to a store display, movie or food.

More information: www.projectoxford.ai/demo/emotion#detection

© 2015 Tech Xplore