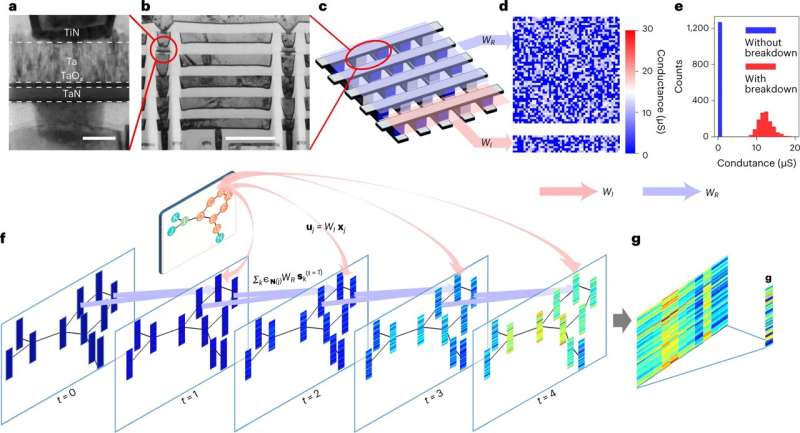

Hardware–software co-design of random resistive memory-based ESGNN for graph learning. a, A cross-sectional transmission electron micrograph of a single resistive memory cell that works as a random resistor after dielectric breakdown. Scale bar 20 nm. b, A cross-sectional transmission electron micrograph of the resistive memory crossbar array fabricated using the backend-of-line process on a 40 nm technology node tape-out. Scale bar 500 nm. c, A schematic illustration of the partition of the random resistive memory crossbar array, where cells shadowed in blue are the weights of the recursive matrix (passing messages along edges) while those in red are the weights of the input matrix (transforming node input features). d, The corresponding conductance map of the two random resistor arrays in c. e, The conductance distribution of the random resistive memory arrays. f, The node embedding procedure of the proposed ESGNN. The internal state of each node at the next time step is co-determined by the sum of neighboring contributions (blue arrows indicate multiplications between node internal state vectors and the recursive matrix in d), the input feature of the node after a random projection (red arrows indicate multiplications between input node feature vectors with the input matrix in d) and the node internal state in the previous time step. g, The graph embedding based on node embeddings. The graph embedding vector g is the sum pooling of all the node internal state vectors in the last time step. Credit: Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00609-5

Graph neural networks have been widely used for studying social networks, e-commerce, drug predictions, human-computer interaction, and more.

In a new study published in Nature Machine Intelligence as the cover story, researchers from Institute of Microelectronics of the Chinese Academy of Sciences (IMECAS) and the University of Hong Kong have accelerated graph learning with random resistive memory (RRM), achieving 40.37X improvements in energy efficiency over a graphics processing unit on representative graph learning tasks.

Deep learning with graphs on traditional von Neumann computers leads to frequent data shuttling, inevitably incurring long processing times and high energy use. In-memory computing with resistive memory may provide a novel solution.

The researchers presented a novel hardware–software co-design, the RRM-based echo state graph neural network, to address those challenges.

The RRM not only harnesses low-cost, nanoscale and stackable resistors for highly efficient in-memory computing, but also leverages the intrinsic stochasticity of dielectric breakdown to implement random projections in hardware for an echo state network that effectively minimizes the training cost.

The work is significant for developing next-generation AI hardware systems.

More information: Shaocong Wang et al, Echo state graph neural networks with analogue random resistive memory arrays, Nature Machine Intelligence (2023). DOI: 10.1038/s42256-023-00609-5

Journal information: Nature Machine Intelligence

Provided by Chinese Academy of Sciences