Disaster relief could benefit from neural net combining multiple remote data sources

In an example of the burgeoning field of 'data fusion', researchers have developed a neural net technique that bridges optical imaging and synthetic-aperture radar into a single comprehensive data source. The approach combines various sets of information more capably than traditional methods.

The approach is described in a paper appearing in the Space: Science and Technology journal published on October 12.

Most contemporary techniques used to interpret information from remote sensing (such as from satellites or planes) are focused on single-modal data—that received from a single source of data collection. Such interpretation technologies rarely make full use of multiple sources (or 'modes'), and so fail to take advantage of complementary data that, when taken together, can tell a fuller story of what is being observed.

One example of this is how optical imaging from satellites—the sort of passive beam-scanning many scientists working with remote-sensing data will be familiar with—is rarely paired with synthetic-aperture radar, or SAR. SAR is a form of radar that produces its own energy and then records the amount of that energy reflected back after interacting with the Earth. While optical imagery is similar to interpreting a photograph, SAR data require a different way of thinking in that the signal is instead responsive to surface characteristics.

Crucially, unlike optical imaging, SAR is not defeated by challenging illumination conditions or clouds and fog. However, it suffers from a lot of data 'noise' and low texture details, which means that even well-trained experts sometimes struggle to interpret the output.

As a result, in the last decade or so, efforts to use artificial intelligence to combine multiple modes of data collection, such as both optical imaging and SAR, together into a single comprehensive source have started to be developed. This fusing together of data from different modes or types of sensors is often called 'data fusion'.

This emerging area of innovation promises to revolutionize fields that make use of remote sensing—as varied as land-use monitoring, pollution prevention and military intelligence—by combining various sets of information into a single source faster, more comprehensively and more capably than traditional methods.

One crucial area where data fusion promises to offer substantial benefit is in disaster response. Such activities should become timelier if SAR and optical imaging could be 'data fused' together because adverse weather and night would no longer be obstacles of rescue and monitoring work. In addition, trying to track down a missing flight like that of the infamous Malaysia Airlines Flight 370 that disappeared after leaving Kuala Lumpur in 2014 should become easier.

"At the time of the missing flight, human analysts struggled with the volume and variety of space-based data," said Meiyu Huang, an assistant professor with the Qian Xuesen Laboratory of Space Technology. "If we could figure out a way to fuse SAR and optical imaging, rescuers should be able to use time-series remote sensing data from multiple sources to detect and pinpoint phenomena across very large areas much faster."

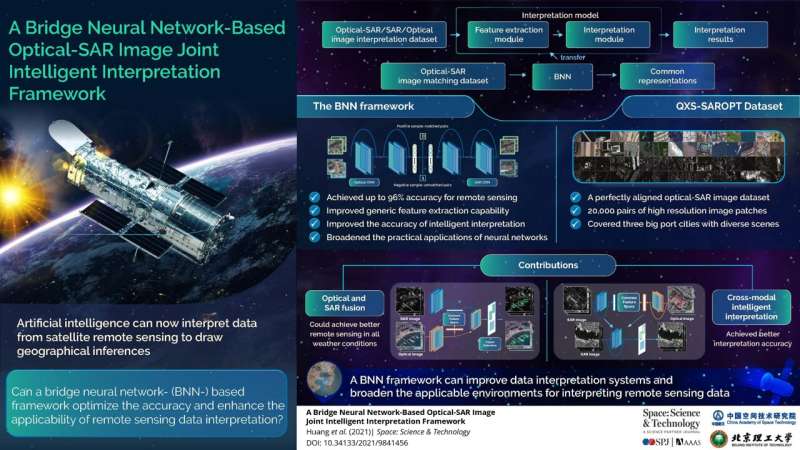

The researchers developed a data fusion algorithm they call a bridge neural net, or BNN, to combine optical and SAR data. It enhances those observed features that are common to both data sources so as to assist the AI to better produce matches between them.

The AI first of all has to be trained on a very large collection of SAR and optical data. So the researchers also put together a dataset of 20,000 pairs of perfectly aligned optical-SAR image matches from various high-resolution scenes from San Diego, Shanghai, and Qingdao. The SAR images come from the Gaofen-3 satellite while the corresponding optical images are drawn from Google Earth. This collection, which they call the QXS-SAROPT, has been made publicly available under open access license for other researchers to use.

After training the BNN on the QXS-SAROPT dataset, the researchers tested this BNN model on an optical-to-SAR crossmodal object detection task on four benchmark detection datasets of ships at sea and at port. They found that their technique achieved up to a 96 percent accuracy rate.

The approach is far from limited to detecting boats or planes though. It can be applied to interpretation tasks such as segmenting out and then checking the health of different crops, monitoring flood conditions, and even identifying buildings under construction in rapidly urbanizing areas to assist with quantifying economic development in a region.

Moving forward, the research group hopes to further develop a deep learning framework to integrate all possible steps ('end to end') within a data processing workflow on board. This should automate the process of broad-area search for detecting, monitoring, and characterizing the progression of natural or human-caused events using time-series spectral imagery from several space-based sensors.

More information: Meiyu Huang et al, A Bridge Neural Network-Based Optical-SAR Image Joint Intelligent Interpretation Framework, Space: Science & Technology (2021). DOI: 10.34133/2021/9841456