This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Human body movements may enable automated emotion recognition, researchers say

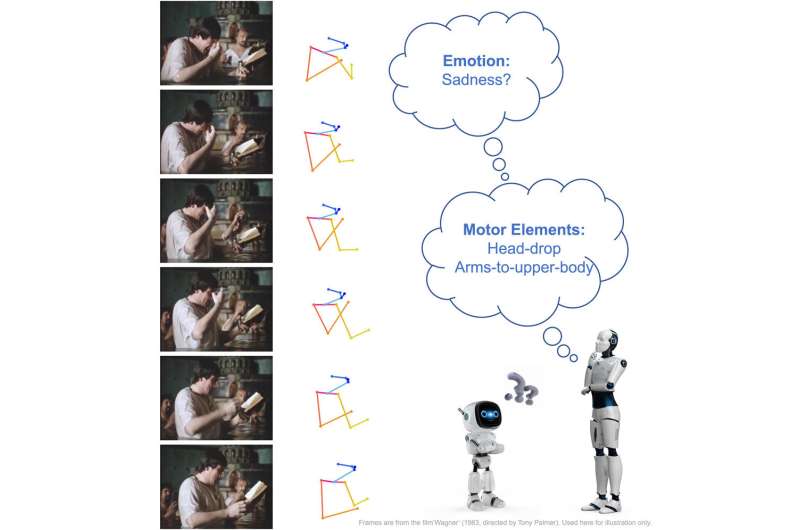

An individual may bring their hands to their face when feeling sad or jump into the air when feeling happy. Human body movements convey emotions, which plays a crucial role in everyday communication, according to a team led by Penn State researchers. Combining computing, psychology and performing arts, the researchers developed an annotated human movement dataset that may improve the ability of artificial intelligence to recognize the emotions expressed through body language.

The work—led by James Wang, distinguished professor in the College of Information Systems and Technology (IST) and carried out primarily by Chenyan Wu, a graduating doctoral student in Wang's group—was published Oct. 13 in the print edition of Patterns and was featured on the journal's cover.

"People often move using specific motor patterns to convey emotions and those body movements carry important information about a person's emotions or mental state," Wang said. "By describing specific movements common to humans using their foundational patterns, known as motor elements, we can establish the relationship between these motor elements and bodily expressed emotion."

According to Wang, augmenting machines' understanding of bodily expressed emotion may help enhance communication between assistive robots and children or elderly users; provide psychiatric professionals with quantitative diagnostic and prognostic assistance; and bolster safety by preventing mishaps in human-machine interactions.

"In this work, we introduced a novel paradigm for bodily expressed emotion understanding that incorporates motor element analysis," Wang said. "Our approach leverages deep neural networks—a type of artificial intelligence—to recognize motor elements, which are subsequently used as intermediate features for emotion recognition."

The team created a dataset of the way body movements indicate emotion—body motor elements—using 1,600 human video clips. Each video clip was annotated using Laban Movement Analysis (LMA), a method and language for describing, visualizing, interpreting and documenting human movement.

Wu then designed a dual-branch, dual-task movement analysis network capable of using the labeled dataset to produce predictions for both bodily expressed emotion and LMA labels for new images or videos.

"Emotion and LMA element labels are related, and the LMA labels are easier for deep neural networks to learn," Wu said.

According to Wang, LMA can study motor elements and emotions while simultaneously creating a "high-precision" dataset that demonstrates the effective learning of human movement and emotional expression.

"Incorporating LMA features has effectively enhanced body-expressed emotion understanding," Wang said. "Extensive experiments using real-world video data revealed that our approach significantly outperformed baselines that considered only rudimentary body movement, showing promise for further advancements in the future."

More information: Chenyan Wu et al, Bodily expressed emotion understanding through integrating Laban movement analysis, Patterns (2023). DOI: 10.1016/j.patter.2023.100816