This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

proofread

Orbital angular momentum-mediated machine learning for high-accuracy mode-feature encoding

As a derivative product of artificial neural networks, ChatGPT became extremely popular in 2023, breaking the shortest time record for technology product users to exceed 100 million. It is a large-scale language model based on machine learning (deep learning), in which learning of language rules from massive input texts plays an important role.

Various tasks like translating text, image recognition and natural language understanding can be completed by using ChatGPT through pre-training. Compared with electronics, photons have huge advantages in terms of power efficiency, parallelism (computation capacity) and minimal latency; thus optical neural networks (ONNs) have recently been widely used to achieve more efficient machine learning.

Information in ONNs is carried by different physical dimensions of light such as space, wavelength, amplitude and phase. The orbital angular momentum (OAM), as a unique dimension of light, has already been widely used in optical information processing systems ranging from optical communication, digital spiral imaging to quantum communication for enhancing the information capacity owing to the infinite orthogonality of OAM states. However, it has never been adopted in ONNs for representing information since the lack of the capability to extract the information features in the OAM domain.

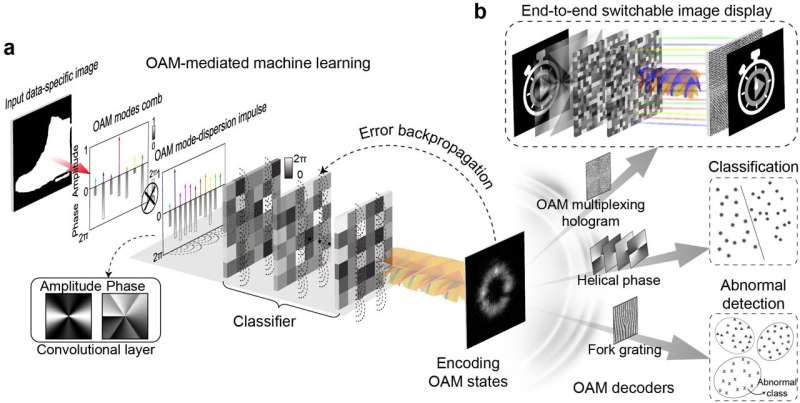

In a new paper published in Light: Science & Applications, a team of scientists, led by Professor Min Gu and Prof. Xinyuan Fang from the Institute of Photonic Chip at University of Shanghai for Science and Technology, has developed a new ONNs architecture using OAM-mediated machine learning protocol, in which photonic OAM serves as signals of the nodes of the neural network.

The data features of images are learned in the OAM domain, leading to high-precision intelligent encoding of images into specific one or a few OAM states. The intelligent OAM encoding of images can cooperate with different OAM detecting/demultiplexing devices to complete various OAM information processing tasks, such as image classification, secure free-space image transmission with high-throughput and low latency, and optical anomaly detection.

The realization of learning data features of images in OAM domain is based on a diffraction-based convolutional neural network (CNN) that is composed of two main parts. The first part is the convolution part, which utilizes convolution between the OAM mode spectrum of an image with a trainable OAM mode-dispersion impulse to densify the input OAM mode combs and extract the mode-features.

The second part is a classification block consisting of cascaded finite-aperture trainable diffraction layers, which can achieve mode-features compression. That is, it controls the wide OAM spectrum distribution (which has contained the mode-features of the image) through different diffractive loss of various OAM modes and eventually results in the output of specific one or a few OAM states, achieving mode-feature OAM encoding. The mode dispersion pulse and phase distribution of diffraction layers are commonly trained through multi-task learning at the target of the required output OAM modes.

The scientists summarize the operational principle of their CNN, saying "The input raw data in the space domain of images should be transformed into OAM domain to represent many OAM mode combs. But most non-zero amplitude coefficients terms in these OAM mode combs concentrate on the low-order OAM mode components which indicate sparse OAM information feature with underlying commonalities. This is the main difficulty in extracting OAM feature information.

"Inspired by CNNs that enhance the prediction accuracy through inserting a convolution-based feature extraction block before classifying, we construct a CNN in OAM domain to overcome the issue of precisely extraction of the OAM features. Therefore, the parametric complexity of high-dimensional data (images for example) can be greatly reduced by CNN after abstracting the OAM features of input data in their raw form."

They tested their intelligent mode-feature OAM encoding with a classification task of distinguishing 10 kinds of handwritten digits (in the MNIST database) as 10 individual OAM modes. The classification accuracy reached 96%. This OAM encoding technique has also been introduced in wireless optical communication system. The team encoded three types of images of T-shirts, trousers, and ankle boots (in the Fashion-MNIST database) into the three strongest weight OAM modes with an accuracy of 93.3% for information transmission.

They found that this encoding method has extremely high anti-eavesdropping ability, because the inherent lateral offset of the eavesdropper (relative to the communication receiver) can directly lead to inaccurate measurement of OAM mode (eavesdropping failure). Further combining the mode-feature OAM encoding with an OAM multiplexing hologram as decoding system, they demonstrated an end-to-end switchable image display, which is a wireless communication with all optical information encoding, transmission and display.

Moreover, they verified the function of all-optical dimension reduction of images for abnormal detection. In this function, "BUS" images and "SUV" images were used to train the CNN to achieve a specific output of a superposed OAM mode states distribution, separately. Interestingly, when abnormal images (other graphs not belonging to these two categories) are input to the network, they occupy different OAM mode distributions with the two distributions of "BUS" and "SUV," so that they can be found with an abnormal label.

Discussing the physical meaning and future development of this technique, the scientists observe, "We propose a universal mechanism for converting data features into OAM states through all optical machine learning, which can achieve free transformation of any information in the OAM dimension. It opens a new door for intelligent OAM encoding of specific databases and images at the speed of light and breaking the bottleneck of optical dimensionality reduction in the OAM domain, which is expected to play a huge role in the future areas of high-capacity and high-security optical neural network for various machine vision tasks."

More information: Xinyuan Fang et al, Orbital angular momentum-mediated machine learning for high-accuracy mode-feature encoding, Light: Science & Applications (2024). DOI: 10.1038/s41377-024-01386-5