This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

proofread

Large language models make human-like reasoning mistakes, researchers find

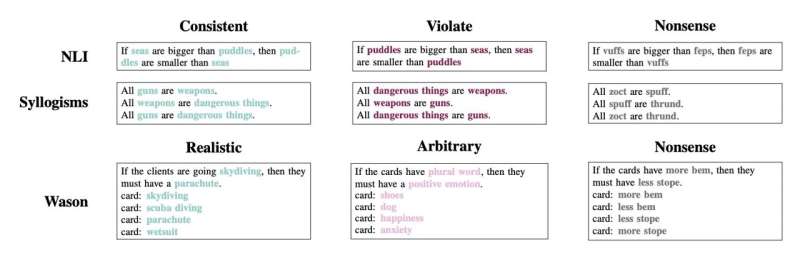

Large language models (LLMs) can complete abstract reasoning tasks, but they are susceptible to many of the same types of mistakes made by humans. Andrew Lampinen, Ishita Dasgupta, and colleagues tested state-of-the-art LLMs and humans on three kinds of reasoning tasks: natural language inference, judging the logical validity of syllogisms, and the Wason selection task.

The findings are published in PNAS Nexus.

The authors found the LLMs to be prone to similar content effects as humans. Both humans and LLMs are more likely to mistakenly label an invalid argument as valid when the semantic content is sensical and believable.

LLMs are also just as bad as humans at the Wason selection task, in which the participant is presented with four cards with letters or numbers written on them (e.g., "D," "F," "3," and "7") and asked which cards they would need to flip over to verify the accuracy of a rule such as "if a card has a 'D' on one side, then it has a '3' on the other side."

Humans often opt to flip over cards that do not offer any information about the validity of the rule but that test the contrapositive rule. In this example, humans would tend to choose the card labeled "3," even though the rule does not imply that a card with "3" would have "D" on the reverse. LLMs make this and other errors but show a similar overall error rate to humans.

Human and LLM performance on the Wason selection task improves if the rules about arbitrary letters and numbers are replaced with socially relevant relationships, such as people's ages and whether a person is drinking alcohol or soda. According to the authors, LLMs trained on human data seem to exhibit some human foibles in terms of reasoning—and, like humans, may require formal training to improve their logical reasoning performance.

More information: Language models, like humans, show content effects on reasoning tasks, PNAS Nexus (2024). DOI: 10.1093/pnasnexus/pgae233. academic.oup.com/pnasnexus/art … /3/7/pgae233/7712372