July 1, 2015 weblog

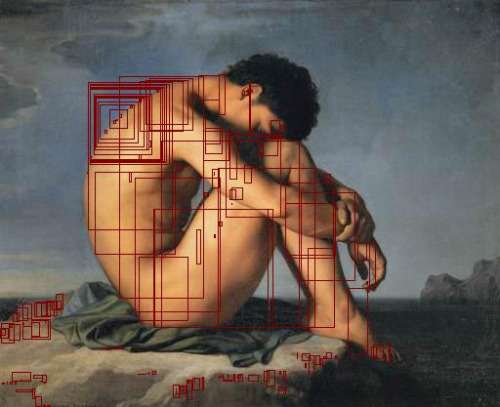

Algorithm detects nudity in images, offers demo page

An algorithm has been designed to tell if somebody in a color photo is naked. Isitnude.com launched earlier this month; its demo page invites you to try it out to test its power in nudity detection. You can choose from a selection of images at the bottom of the page, including pics of Vladimir Putin on horseback and Tiger Woods in golf mode. We tried it out, dragging and dropping a picture of Woods over into the box and the message promptly said "Not nude-G." "You can probably post this."

Other notes on the page include, "We apologize if we didn't get it right, we are improving every day." "Please note that we cannot detect black and white images."

(Algorithmia does not retain images.)

The company behind this effort, Algorithmia, was founded in 2013 to advance algorithm development and use. "As developers ourselves we believe that given the right tools the possibilities for innovation and discovery are limitless."

They said they are building "what we believe to be the next era of programming: a collaborative, always live and community driven approach to making the machines that we interact with better." The community driven API exposes "the collective knowledge of algorithm developers across the globe."

"We're building a community around state-of-the-art algorithm development. Users can create, share, and build on other algorithms and then instantly make them available as a web service."

Lucy Black in I Programmer noted the use advantage. "The idea behind Algorithmia is that where an algorithm already exists you don't need to code your own, instead you can simply paste in its functionality using its cloud-based API."

In his story about Algorithmia and the demo, Brian Barrett in Wired said, "His company is an algorithmic clearing house, taking computational solutions from academia and beyond and offering them to the world at large for a fee."

Why the interest in detecting nudity in photographs?

"A customer came to us trying to run a site that needs to be kid-friendly," said Algorithmia CTO Kenny Daniel in Wired. The customer wanted the ability to screen images with some confidence that they would not be pornographic. Daniel said, "Anybody who's trying to run a community but wants to filter out objectionable content, or keep it kid-friendly, could benefit from this same algorithm."

In the company blog this month, they also discussed the rationale in enabling artificial intelligence to detect nudity.

"If there's one thing the internet is good for, it's racy material," said the blog. This is a headache for a number of reasons, including a) it tends to show up where you'd rather it wouldn't, like forums, social media, etc. and b) while humans generally know it when we see it, computers don't so much. We here at Algorithmia decided to give this one a shot."

To give it a shot, they turned to various sources. For one, the result is based on an algorithm by Hideo Hattori and on a paper authored by Rigan Ap-apid, De La Salle University. In the latter's paper, "An Algorithm for Nudity Detection," he presented an algorithm for detecting nudity in color images.

He said, "A skin color distribution model based on the RGB, Normalized RGB, and HSV color spaces is constructed using correlation and linear regression. The skin color model is used to identify and locate skin regions in an image. These regions are analyzed for clues indicating nudity or non-nudity such as their sizes and relative distances from each other. Based on these clues and the percentage of skin in the image, an image is classified nude or non-nude."

Meanwhile, plenty of images with lots of skin are "perfectly innocent," said the blog. "You might say that leaning too much on just color leaves the method, well, tone-deaf. To do better, you need to combine skin detection with other tricks."

Brian Barrett in Wired said "To help weed out false positives, Algorithmia added a few layers of intelligence."

The blog stated that "Our contribution to this problem is to detect other features in the image and using these to make the previous method more fine-grained."

To come up with the algorithm, they turned to the book Human Computer Interaction Using Hand Gestures by Prashan Premaratne, OpenCV's nose detection algorithm and face detection algorithm.

As I Programmer said, the algorithm is still a work in progress. They are still interested in further improvements. "There are countless techniques that can be used in place of or combined with the ones we've used to make an even better solution. For instance, you could train a convolutional neural network on problematic images," they said.

More information: isitnude.com/

© 2015 Tech Xplore