February 16, 2016 report

Study suggests humans and computers use different processes to identify objects visually

(Tech Xplore)—A small team of researchers from the U.S. and Israel has found via an experimental study, that despite progress made in getting computers to recognize images, they still do not use the same types of processes as humans. In their paper published in Proceedings of the National Academy of Sciences, the team describes their study and results and what they believe they learned about the way humans go about identifying visually observed objects.

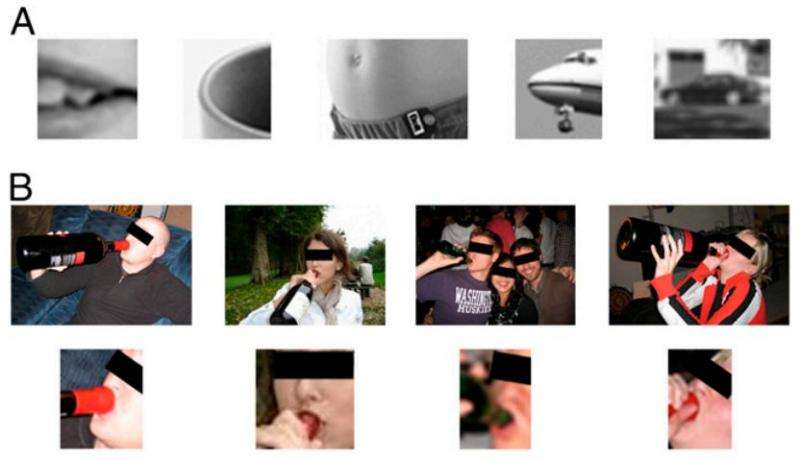

In order to get computers to recognize an object, many similar images are scanned and put into a database—when attempting to identify a new object, the computer simply compares it with items (or parts of them) in the database. Some have suggested that humans do the same when looking at the world around them, while others believe the process is quite different—after all humans still do a much better job of it. To better understand why, the researchers with this new effort set up an experiment where they had a huge number of volunteers look at images that were small or not in focus, to learn more about how the brain processes images.

The database method used by computers is considered to be a bottom-up approach to image identification, whereas using some other means to think about or deduce an image would be considered using a top-down approach. If people were still able to make out an airplane in a really fuzzy image, for example, the researchers reasoned, that would suggest we use some other bit of intelligence in figuring out what the things around us are, even if we have never seen them before. To that end, the researchers created a project on Amazon's Mechanical Turk, where workers are paid tiny amounts of money to do tiny jobs—in this case, the job was to identify objects in a picture that were of varying degrees in size or blurriness.

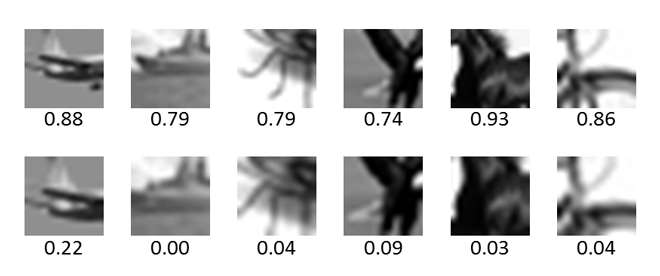

Over 14,000 workers responded, viewing 3,553 image patches—the team then used a computer to view and try to identify the same images, and then compared the results. They report that the humans were much better at it, as was expected. They also report that they found that humans have a drop-off point, where once a certain threshold of blurriness was encountered, very few could identify the object seen. Computers on the other hand had no drop off point, which the researchers suggest, means that humans and computers are using different processes to identify images. They suspect humans use both a bottom-up and top-down approach, which means that computers will have to be taught to do the same if they are ever to achieve the same level of image identification expertise.

More information: Atoms of recognition in human and computer vision , PNAS, www.pnas.org/cgi/doi/10.1073/pnas.1513198113

Abstract

Discovering the visual features and representations used by the brain to recognize objects is a central problem in the study of vision. Recently, neural network models of visual object recognition, including biological and deep network models, have shown remarkable progress and have begun to rival human performance in some challenging tasks. These models are trained on image examples and learn to extract features and representations and to use them for categorization. It remains unclear, however, whether the representations and learning processes discovered by current models are similar to those used by the human visual system. Here we show, by introducing and using minimal recognizable images, that the human visual system uses features and processes that are not used by current models and that are critical for recognition. We found by psychophysical studies that at the level of minimal recognizable images a minute change in the image can have a drastic effect on recognition, thus identifying features that are critical for the task. Simulations then showed that current models cannot explain this sensitivity to precise feature configurations and, more generally, do not learn to recognize minimal images at a human level. The role of the features shown here is revealed uniquely at the minimal level, where the contribution of each feature is essential. A full understanding of the learning and use of such features will extend our understanding of visual recognition and its cortical mechanisms and will enhance the capacity of computational models to learn from visual experience and to deal with recognition and detailed image interpretation.

© 2016 Tech Xplore