Making data-driven 3-D modeling easier

A new computational method, to be demonstrated at SIGGRAPH 2017, is addressing a well-known bottleneck in computer graphics: 3D content creation. The process of 3D content creation is complex and tedious. GRASS, a new generative model based on deep neural networks developed by a research team led by the National University of Defense Technology (NUDT), enables the automatic creation of plausible, novel 3D shapes, giving graphic artists in video games, virtual reality (VR) and film the ability to more quickly and effortlessly create and explore multiple 3D shapes so as to arrive at a final product.

This new method uses machine-learning techniques and artificial intelligence to eliminate the burden of generating multiple 3D shapes by hand.

The research paper, "GRASS: Generative Recursive Autoencoders for Shape Structures," is coauthored by researchers from Adobe Research, IIT Bombay, NUDT, Simon Fraser University and Stanford University. The authors are set to showcase their work at SIGGRAPH 2017 in Los Angeles, 30 July—3 August. The SIGGRAPH conference is known for the spotlight it shines annually on the most innovative in computer graphics research and interactive techniques worldwide.

"The time consuming process of 3D content creation prevents computer graphics from being as ubiquitous as we had hoped," says Kai (Kevin) Xu, a coauthor of the paper and associate professor of computer science at NUDT and soon-to-be visiting professor at Princeton University. "Our work is a data-driven automatic shape generation computational method. Given a set of example 3D shapes, our task is to generate multiple shapes of one object class, automatically. For example, given a set of chairs, our method can create more chairs but with different geometric structures, and do so quickly and simply, allowing even a novice user to utilize our method."

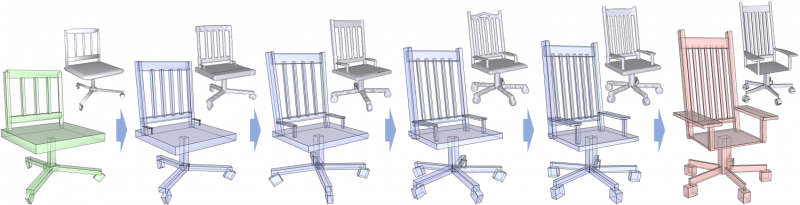

Generating new 3D shapes is challenging. They are typically constructed part-by-part, following a bottom-up, hierarchical approach for assembly. This is how 3D artists currently model 3D shapes, using popular software programs such as 3D Studio Max and Blender.

In this research, the team mimics the process for 3D shape generation via a recursive autoencoder for shape structures, which replaces the tedious part-by-part steps by a sampling from a deep generative model. GRASS learns to encode 3D shapes and then generate novel ones. A specific challenge the project had to address is that shape variability can manifest itself both in discrete (e.g. the number of spokes at the base of a swivel chair) and continuous ways (e.g. the precise shape of these spokes). The GRASS network successfully integrates both kinds of variability into a principled framework and is able to construct 3D shapes with the right overall structure, including symmetries and part arrangements. In a major advance over prior work, it achieves this without limiting the number of possible parts in a shape, and without being told which part is which. Essentially, GRASS has the ability to determine which part of a chair is the back and which part the leg.

The core ideas that led to GRASS were born out of two questions the researchers had been asking themselves for years. The first is whether 3D shapes can be transformed into and generated through genetic codes mimicking human DNA. Fittingly, the first code name for the project was "Shape DNA". GRASS learns to encode an arbitrarily complex 3D shape into a fixed set of parameters, and regenerate it from those parameters. The second question is how to best represent 3D shapes for computer-aided synthesis. The team eventually settled on a structural representation, describing a 3D shape as an organized hierarchy of its constituent parts.

With GRASS, "Everything is driven implicitly by the examples, or learned from data," notes Xu.

Computational methods like GRASS could one day transform the video game, film, computer-aided design (CAD) and VR industries. For example, designers building a new character for an animated film have to consider a great variety of alternative designs before arriving at the final one and a computational design tool, like GRASS, with its ability to learn and generate shapes, could automate the process of producing plausible design options. The learned model could also help designers efficiently explore the design space.

In addition to Xu, the research team is comprised of Jun Li, also from National University of Defense Technology; Siddhartha Chaudhuri, assistant professor of computer science at IIT Bombay; Ersin Yumer, research scientist at Adobe Research; Hao Zhang, professor of computing science at Simon Fraser University; and Leonidas Guibas, professor of computer science at Stanford University. Zhang worked on the project as a visiting professor in Guibas' lab at Stanford during his academic sabbatical.

More information: kevinkaixu.net/projects/grass.html