Eagle-eyed algorithm outdoes human experts

Artificial intelligence is now so smart that silicon brains frequently outthink humans.

When artificial intelligence pairs up with machine vision, computers can accomplish seemingly incredible tasks—think of Tesla's self-driving cars or Facebook's uncanny ability to pick out people's faces in photos.

Beyond its utility as a helpful social media tool, advanced image processing someday could help doctors quickly identify cancerous cells in images from biopsy samples or enable scientists to evaluate how well certain materials withstand conditions in a nuclear power reactor.

"Machine learning has great potential to transform the current human-involved approach of image analysis in microscopy," says Wei Li, who earned his master's degree in materials science and engineering from the University of Wisconsin-Madison in 2018.

Given that many problems in materials science are image-based, yet few researchers have expertise in machine vision, a major research bottleneck is image recognition and analysis. As a student, Li realized that he could leverage training in the latest computational techniques to help bridge the gap between artificial intelligence and materials science research.

With collaborators that included Kevin Field, a staff scientist at Oak Ridge National Laboratory, Li used machine learning to quickly and consistently detect and analyze microscopic-scale radiation damage to materials under consideration for nuclear reactors.

In other words, computers bested humans in this arduous task.

The researchers described their approach in a paper published July 18, 2018, in the journal npj Computational Materials.

Machine learning uses statistical methods to guide computers toward improving their performance on a task without receiving any explicit guidance from a human. Essentially, machine learning teaches computers to teach themselves.

"In the future, I believe images from many instruments will pass through a machine learning algorithm for initial analysis before being considered by humans," says Dane Morgan, a professor of materials science and engineering at UW-Madison and Li's advisor.

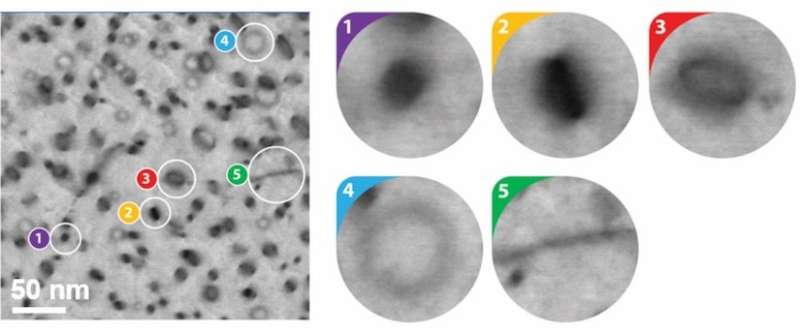

The researchers targeted machine learning as a means to rapidly sift through electron microscopy images of materials that had been exposed to radiation and identify a specific type of damage—a challenging task because the photographs can resemble a cratered lunar surface or a splatter-painted canvas.

That task—absolutely critical to developing safe nuclear materials—could make a time-consuming process much more efficient and effective.

"Human detection and identification is error prone, inconsistent and inefficient. Perhaps most importantly, it's not scalable," says Morgan. "Newer imaging technologies are outstripping human capabilities to analyze the data we can produce."

Previously, image-processing algorithms depended on human programmers to provide explicit descriptions of an object's identifying features. Teaching a computer to recognize something simple like a stop sign might involve lines of code describing a red octagonal object.

More complex, however, is articulating all of the visual cues that signal something is, for example, a cat. Fuzzy ears? Sharp teeth? Whiskers? A variety of critters have those same characteristics.

Machine learning now takes a completely different approach.

"It's a real change of thinking. You don't make rules—you let the computer figure out what the rules should be," says Morgan.

Today's machine learning approaches to image analysis often use programs called neural networks that seem to mimic the remarkable layered pattern-recognition powers of the human brain. To teach a neural network to recognize a cat, for example, scientists simply "train" the program by providing a collection of accurately labeled pictures of various cat breeds. The neural network takes over from there, building and refining its own set of guidelines for the most important features.

Similarly, Morgan and colleagues taught a neural network to recognize a very specific type of radiation damage, called dislocation loops, which are some of the most common, yet challenging defects to identify and quantify—even for a human with decades of experience.

After training with 270 images, the neural network, combined with another machine learning algorithm called a cascade object detector, correctly identified and classified roughly 86 percent of the dislocation loops in a set of test pictures. For comparison, human experts found 80 percent of the defects.

"When we got the final result, everyone was surprised," says Field. "Not only by the accuracy of the approach, but the speed. We can now detect these loops like humans while doing it in a fraction of time on a standard home computer."

After he graduated, Li took a job with Google. But the research is ongoing: Currently, Morgan and Field are working to expand their training data set and teach a new neural network to recognize different kinds of radiation defects. Eventually, they envision creating a massive cloud-based resource for materials scientists around the world to upload images for near-instantaneous analysis.

"This is just the beginning," says Morgan. "Machine learning tools will help create a cyber infrastructure that scientists can utilize in ways we are just beginning to understand."

More information: Wei Li et al. Automated defect analysis in electron microscopic images, npj Computational Materials (2018). DOI: 10.1038/s41524-018-0093-8