June 6, 2019 feature

Evolving neural networks with a linear growth in their behavior complexity

Evolutionary algorithms (EAs) are designed to replicate the behavior and evolution of biological organisms while solving computing problems. In recent years, many researchers have developed EAs and used them to tackle a variety of optimization tasks.

Past studies have also explored the use of these algorithms for learning the topology and connection weights of neural networks that power robots or virtual agents. When applied in these context, EAs could have numerous advantages, for instance enhancing the performance of artificial intelligence (AI) agents and improving our current understanding of biological systems.

So far, however, real world evolutionary robotics applications have been scarce, with very few studies succeeding in producing complex behaviors using EAs. Researchers at Nottingham Trent University and the Max Planck Institute of Mathematics in the Sciences have recently developed a new approach to evolve neural networks with a sustained linear growth in the complexity of their behavior.

"If we want a sustained linear growth of complexity during evolution, we must ensure that the properties of the environment in which evolution takes place, including the population structure and the properties of the neural networks that are relevant to the applied mutation operators, remain constant on average over evolutionary time," the researchers explained in their paper. "Freezing old network structures is one method that helps in achieving this, but as the investigations presented here will show, it is not sufficient on its own and not even the major contributor towards achieving the goal."

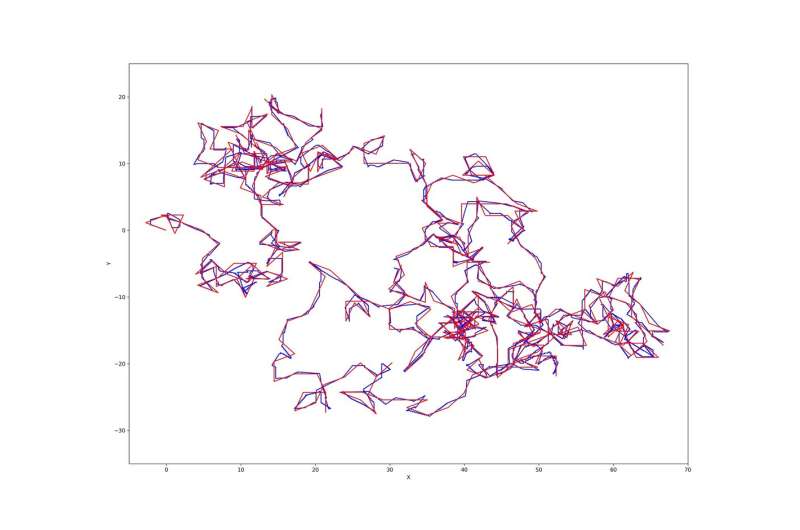

In their study, the researchers focused on a task in which a robotic agent needs to follow a predefined trajectory on an infinite 2-D plane for as long as possible, which they refer to as the "trajectory following task." In their version of this task, the agent did not receive any information via its sensors about where it should be located. If it is too far from the trajectory, however, the agent "dies." In this context, evolution should allow the agent to adapt to this task and learn to follow the trajectory using open loop control.

To achieve a linear growth in the complexity of the agent's evolutionary behaviors while performing this task, the researchers added four key features to standard methods for evolving neural networks. Essentially, they froze the network's previously evolved structure, while also adding temporal scaffolding, a homogeneous transfer function for output nodes and mutations that create new pathways to outputs.

While adding mutations to the networks and changing the transfer functions of outputs led to some improvement in performance, they found that the most significant enhancement was associated with the use of scaffolding. This suggests that standard neural networks are not particularly good at producing behavior that varies over time in a way that is easily accessible by evolution.

In their paper, the researchers propose that neural networks augmented by scaffolding could be a viable solution to attain increasingly complex behaviors and evolution in neural networks. In the future, the approach they presented could inform the development of new tools to evolve neural networks for robot control and other tasks.

"Overall, evolved complexity is up to two orders of magnitude over that achieved by standard methods in the experiments we reported, with the major limiting factor for further growth being the available run time," the researchers wrote in their paper. "Thus, the set of methods proposed here promises to be a useful addition to various current neuroevolution methods."

More information: Benjamin Inden et al. Evolving neural networks to follow trajectories of arbitrary complexity, Neural Networks (2019). DOI: 10.1016/j.neunet.2019.04.013

© 2019 Science X Network