The brain inspires a new type of artificial intelligence

Machine learning, introduced 70 years ago, is based on evidence of the dynamics of learning in the brain. Using the speed of modern computers and large datasets, deep learning algorithms have recently produced results comparable to those of human experts in various applicable fields, but with different characteristics that are distant from current knowledge of learning in neuroscience.

Using advanced experiments on neuronal cultures and large scale simulations, a group of scientists at Bar-Ilan University in Israel has demonstrated a new type of ultrafast artificial intelligence algorithms—based on the very slow brain dynamics—which outperform learning rates achieved to date by state-of-the-art learning algorithms.

In an article published today in the journal Scientific Reports, the researchers rebuild the bridge between neuroscience and advanced artificial intelligence algorithms that has been left virtually useless for almost 70 years.

"The current scientific and technological viewpoint is that neurobiology and machine learning are two distinct disciplines that advanced independently," said the study's lead author, Prof. Ido Kanter, of Bar-Ilan University's Department of Physics and Gonda (Goldschmied) Multidisciplinary Brain Research Center. "The absence of expectedly reciprocal influence is puzzling."

"The number of neurons in a brain is less than the number of bits in a typical disc size of modern personal computers, and the computational speed of the brain is like the second hand on a clock, even slower than the first computer invented over 70 years ago," he continued. "In addition, the brain's learning rules are very complicated and remote from the principles of learning steps in current artificial intelligence algorithms," added Prof. Kanter, whose research team includes Herut Uzan, Shira Sardi, Amir Goldental and Roni Vardi.

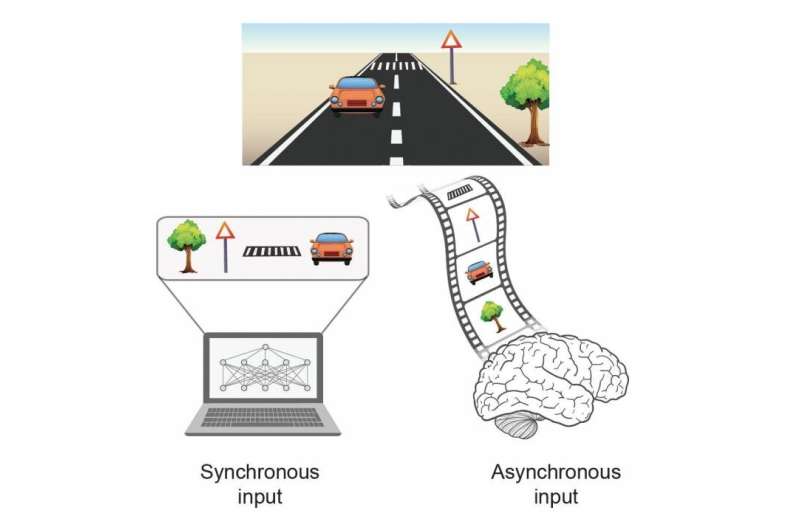

Brain dynamics do not comply with a well-defined clock synchronized for all nerve cells, since the biological scheme has to cope with asynchronous inputs, as physical reality develops. "When looking ahead one immediately observes a frame with multiple objects. For instance, while driving one observes cars, pedestrian crossings, and road signs, and can easily identify their temporal ordering and relative positions," said Prof. Kanter. "Biological hardware (learning rules) is designed to deal with asynchronous inputs and refine their relative information." In contrast, traditional artificial intelligence algorithms are based on synchronous inputs, hence the relative timing of different inputs constituting the same frame is typically ignored.

The new study demonstrates that ultrafast learning rates are surprisingly identical for small and large networks. Hence, say the researchers, "the disadvantage of the complicated brain's learning scheme is actually an advantage." Another important finding is that learning can occur without learning steps through self-adaptation according to asynchronous inputs. This type of learning-without-learning occurs in the dendrites, several terminals of each neuron, as was recently experimentally observed. In addition, network dynamics under dendritic learning are governed by weak weights which were previously deemed insignificant.

The idea of efficient deep learning algorithms based on the very slow brain's dynamics offers an opportunity to implement a new class of advanced artificial intelligence based on fast computers. It calls for the reinitiation of the bridge from neurobiology to artificial intelligence and, as the research group concludes, "Insights of fundamental principles of our brain have to be once again at the center of future artificial intelligence."

More information: Herut Uzan et al. Biological learning curves outperform existing ones in artificial intelligence algorithms, Scientific Reports (2019). DOI: 10.1038/s41598-019-48016-4