This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

Robotic hand with tactile fingertips achieves new dexterity feat

Improving the dexterity of robot hands could have significant implications for automating tasks such as handling goods for supermarkets or sorting through waste for recycling.

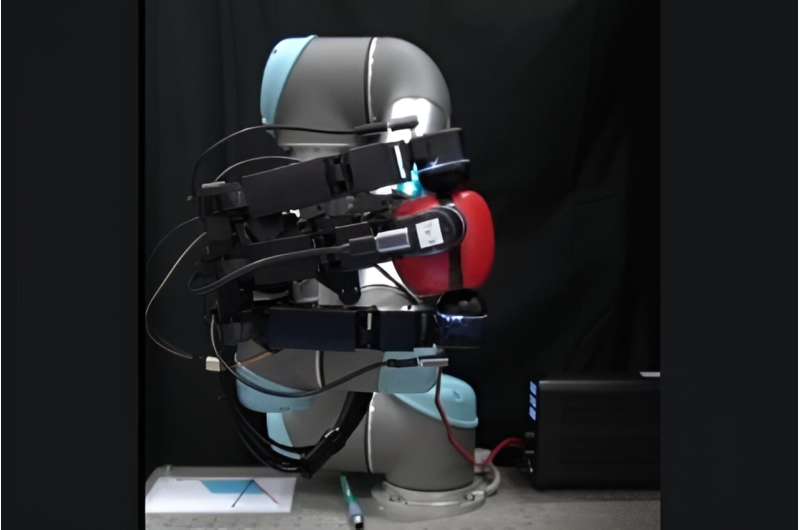

Led by Professor of Robotics and AI Professor Nathan Lepora, the team at the University of Bristol have created a four-fingered robotic hand with artificial tactile fingertips capable of rotating objects such as balls and toys in any direction and orientation. It can even do this when the hand is upside down—something which has never been done before. The paper is posted to the arXiv preprint server.

In 2019, OpenAI became the first to show human-like feats of dexterity with a robot hand. However, despite making front-page news, OpenAI soon disbanded their 20-strong robotics team. OpenAI's set up used a cage holding 19 cameras and more than 6,000 CPUs to learn huge neural networks which could control the hands, but this operation would have required significant costs.

Professor Lepora and his colleagues wanted to see if similar results could be achieved using simpler and more cost-efficient methods.

In the past year, four university teams from MIT, Berkeley, New York (Columbia) and Bristol have shown complex feats of robot hand dexterity from picking up and passing rods to rotating children's toys in-hand—and all have done so using simple set-ups and desktop computers.

As detailed in the recent Science Robotics article "The future lies in a pair of tactile hands," the key advance that made this possible was that the teams all built a sense of touch into their robot hands.

Developing a high-resolution tactile sensor became possible thanks to advances in smartphone cameras which are now so tiny they can comfortably fit inside a robot fingertip.

"In Bristol, our artificial tactile fingertip uses a 3D-printed mesh of pin-like papillae on the underside of the skin, based on copying the internal structure of human skin," Professor Lepora explains.

"These papillae are made on advanced 3D-printers that can mix soft and hard materials to create complicated structures like those found in biology.

"The first time this worked on a robot hand upside-down was hugely exciting as no-one had done this before. Initially the robot would drop the object, but we found the right way to train the hand using tactile data and it suddenly worked even when the hand was being waved around on a robotic arm."

The next steps for this technology is to go beyond pick-and-place or rotation tasks and move to more advanced examples of dexterity, such as manually assembling items like Lego.

More information: Max Yang et al, AnyRotate: Gravity-Invariant In-Hand Object Rotation with Sim-to-Real Touch, arXiv (2024). DOI: 10.48550/arxiv.2405.07391

Nathan F. Lepora, The future lies in a pair of tactile hands, Science Robotics (2024). DOI: 10.1126/scirobotics.adq1501

Project page on GitHub