March 5, 2020 report

In-sensor computing to speed up machine vision

By applying in-sensor computing of analog data, a team of researchers at Vienna University of Technology's Institute of Photonics has developed a way to speed up machine vision. In their paper published in the journal Nature, the group describes their design and how well it performed during testing. Yang Chai with Hong Kong Polytechnic University has published a News & Views piece in the same journal issue describing the work by the team.

With current technology, machine vision is carried out using a basic system involving a device with an image sensor that responds to light. Data from the image sensor is converted from an analog to a digital signal with another device. The digital data is then processed by yet another device, either locally or in the cloud. This system works reasonably well for current applications, but will not be suitable for those in the future because of the lag involved in reading and processing huge amounts of image data. In this new effort, the researchers have proposed a new type of image sensor that can process analog data to a limited extent.

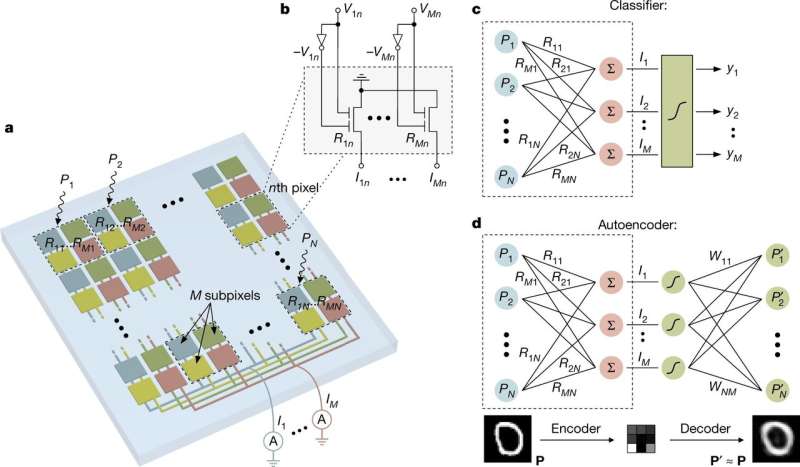

The image sensor envisaged by the team in Austria consists of embedding trios of photodiodes on a chip in a way that allows for increasing or decreasing their sensitivity to light using an applied voltage, a setup that allows each diode to be individually tuned or weighted. In such a setup, the diodes act similarly to nerves in the human eye. As images are presented to the device, all of the diodes react based on their tuning—together, they serve as a network vision processor. When light arrives at the sensor, it is processed by adding the light intensity from each of the columns and rows that make up the sensor array. The array of diodes is then trained for a task by tweaking each member individually based on a desired outcome. The initial learning stage takes a small amount of time, but once the network has been trained, processing occurs at a rate equal to the reaction time of the photodiodes.

The device envisioned by the researchers was not meant to produce images. Instead, it filters out unnecessary data and carries out some initial sorting. To test it, the researchers taught their device to sort three simplified letters. They also used it to do some very basic auto-encoding based on key features of a given image. They note that their design and device are still in the proof-of-concept stage, but say their findings so far are encouraging.

More information: Lukas Mennel et al. Ultrafast machine vision with 2D material neural network image sensors, Nature (2020). DOI: 10.1038/s41586-020-2038-x

© 2020 Science X Network