June 26, 2020 feature

A deep reinforcement learning framework to identify key players in complex networks

Network science is an academic field that aims to unveil the structure and dynamics behind networks, such as telecommunication, computer, biological and social networks. One of the fundamental problems that network scientists have been trying to solve in recent years entails identifying an optimal set of nodes that most influence a network's functionality, referred to as key players.

Identifying key players could greatly benefit many real-world applications, for instance, enhancing techniques for the immunization of networks, as well as aiding epidemic control, drug design and viral marketing. Due to its NP-hard nature, however, solving this problem using exact algorithms with polynomial time complexity has proved highly challenging.

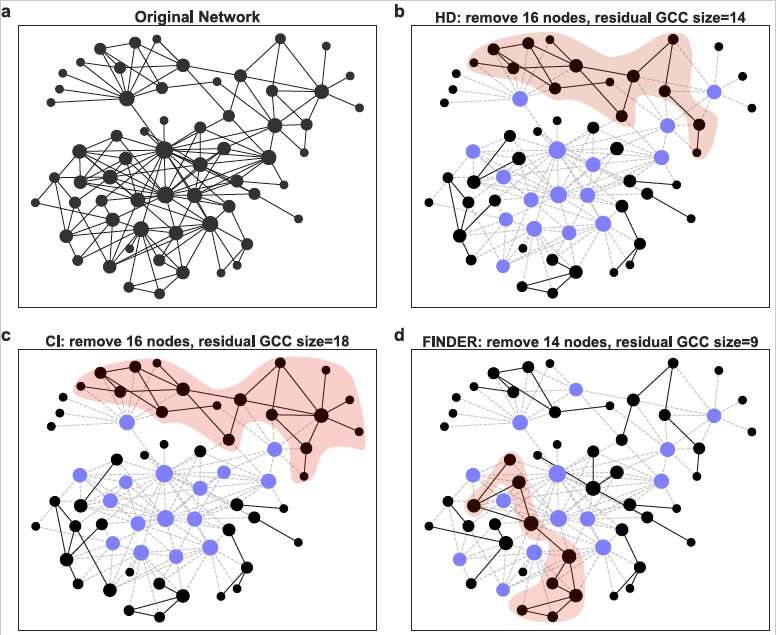

Researchers at National University of Defense Technology in China, University of California, Los Angeles (UCLA), and Harvard Medical School (HMS) have recently developed a deep reinforcement learning (DRL) framework, dubbed FINDER, that could identify key players in complex networks more efficiently. Their framework, presented in a paper published in Nature Machine Intelligence, was trained on a small set of synthetic networks generated by classical network models and then applied to real-world scenarios.

"This work was motivated by a fundamental question in network science: How can we find an optimal set of key players whose activation (or removal) would maximally enhance (or degrade) network functionality?" Yang-Yu Liu, one of the senior researchers who carried out the study, told TechXplore. "Many approximate and heuristic strategies have been proposed to deal with specific application scenarios, but we still lack a unified framework to solve this problem efficiently."

FINDER, which stands for FInding key players in Networks through DEep Reinforcement learning, builds on recently developed deep learning techniques for solving combinatorial optimization problems. The researchers trained FINDER on a large set of small synthetic networks generated by classical network models, guiding it using a reward function specific to the task it is trying to solve. This strategy guides FINDER in determining what it should do (i.e., what node it should pick) to accumulate the greatest reward over a period of time based on its current state (i.e., the current network structure).

"It might be straightforward to represent states and actions in traditional reinforcement learning tasks, such as in robotics, which is not the case for networks," Yizhou Sun, another senior researcher involved in the study, told TechXplore. "Another challenge we faced while working on this project was determining how to represent a network, as it has a discrete data structure and lies in an extremely high-dimensional space. To address this issue, we extended the current graph neural network to represent nodes (actions) and graphs (states), which is jointly learned with the reinforcement learning task."

In order to efficiently represent complex networks, the researchers collectively determined the best representation for individual network states and actions and the best strategy for identifying an optimal action when the network is in specific states. The resulting representations can guide FINDER in identifying key players in a network.

The new framework devised by Sun, Liu and their colleagues is highly flexible and can thus be applied to the analysis of a variety of real-world networks simply by changing its reward function. It is also highly effective, as it was found to outperform many previously developed strategies for identifying key players in networks both in terms of efficiency and speed. Remarkably, FINDER can easily be scaled up to analyze a wide range of networks containing thousands or even millions of nodes.

"Compared to existing techniques, FINDER achieves superior performances in terms of both effectiveness and efficiency in finding key players in complex networks," Liu said. "It represents a paradigm shift in solving challenging optimization problems on complex real-world networks. Requiring no domain-specific knowledge, but just the degree heterogeneity of real networks, FINDER achieves this goal by offline self-training on small synthetic graphs only once, and then generalizes surprisingly well across diverse domains of real-world networks with much larger sizes."

The new deep reinforcement framework has so far achieved highly promising results. In the future, it could be used to study social networks, power grids, the spread of infectious diseases and many other types of network.

The findings gathered by Liu, Sun and their colleagues highlight the promise of classical network models such as the Barabási–Albert model, from which they drew inspiration. While simple models may appear very basic, in fact, they often capture the primary feature of many real-world networks, namely the degree heterogeneity. This feature can be of huge value when trying to solve complex optimization problems related to intricate networks.

"My lab is now pursuing several research directions along this same line of research, including: (1) designing better graph representation learning architectures; (2) exploring how to transfer knowledge between different graphs and even graphs from different domains; (3) investigating other NP-hard problems on graphs and solving them from learning perspective," Sun said.

While Sun and her team at UCLA plan to work on new techniques for network science research, Liu and his team at HMS would like to start testing FINDER on real biological networks. More specifically, they would like to use the framework to identify key players in protein-protein interaction networks and gene regulatory networks that could play crucial roles in human health and disease.

More information: Changjun Fan et al. Finding key players in complex networks through deep reinforcement learning, Nature Machine Intelligence (2020). DOI: 10.1038/s42256-020-0177-2

Learning combinatorial optimization algorithms over graphs. papers.nips.cc/paper/7214-lear … gorithms-over-graphs

Reinforcement learning for solving the vehicle routing problem. papers.nips.cc/paper/8190-rein … icle-routing-problem

Neural combinatorial optimization with reinforcement learning. arXiv:1611.09940 [cs.AI]. arxiv.org/abs/1611.09940

Albert-László Barabási et al. Emergence of Scaling in Random Networks, Science (2002). DOI: 10.1126/science.286.5439.509

Combinatorial optimization with graph convolutional networks and guided tree search. papers.nips.cc/paper/7335-comb … d-guided-tree-search

Machine learning for combinatorial optimization: a methodological tour d'horizon. arXiv:1811.06128 [cs.LG]. arxiv.org/abs/1811.06128

James J. Q. Yu et al. Online Vehicle Routing With Neural Combinatorial Optimization and Deep Reinforcement Learning, IEEE Transactions on Intelligent Transportation Systems (2019). DOI: 10.1109/TITS.2019.2909109

© 2020 Science X Network