July 1, 2020 feature

A hyperdimensional computing system that performs all core computations in-memory

Hyperdimensional computing (HDC) is an emerging computing approach inspired by patterns of neural activity in the human brain. This unique type of computing can allow artificial intelligence systems to retain memories and process new information based on data or scenarios it previously encountered.

Most HDC systems developed in the past only perform well on specific tasks, such as natural language processing (NLP) or time series problems. In a paper published in Nature Electronics, researchers at IBM Research- Zurich and ETH Zurich presented a new HDC system that performs all core computations in-memory and that could be applied to a variety of tasks.

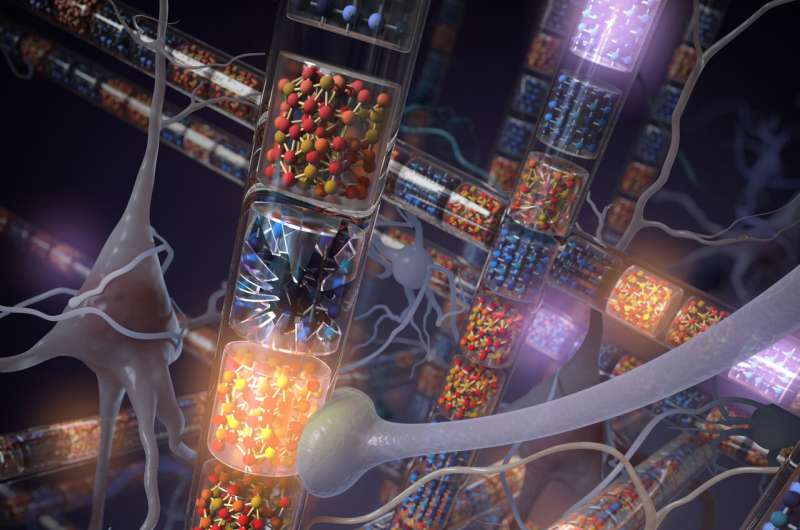

"Our work was initiated by the natural fit between the two concepts of in-memory computing and hyperdimensional computing," Abu Sebastian and Abbas Rahimi, the two lead researchers behind the study, told TechXplore. "At IBM Research- Zurich, we have been developing in-memory computing platforms based on phase-change memory (PCM), while at ETH Zurich, we have been exploring a brain-inspired computing paradigm called hyperdimensional computing."

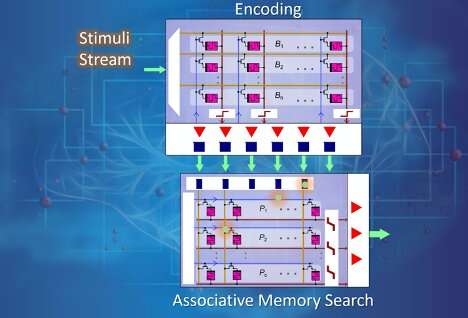

In their past work, the researchers observed that the primary operations involved in HDC, namely encoding and associative memory search, both involve the manipulation and comparison of large distributed patterns within the system's memory. As a result of this characteristic, these systems can be efficiently fabricated using PCM crossbar arrays, in a way that enables the advantages of analog in-memory computing.

"This tailor-made combination not only avoids the von Neumann bottleneck (aka memory wall), but also significantly improves energy efficiency as well as robustness against variability, noise, and failures," Sebastian and Rahimi explained. "Almost two years ago, this observation prompted us to initiate a joint research in this direction between ETH and IBM."

To model neural activity patterns, HDC systems use rich algebra that defines a set of rules to build, bind and bundle different hypervectors. Hypervectors are holographic 10,000-dimensional (pseudo) random vectors with independent and identically distributed components. By using these hypervectors, HDC enables the creation of powerful computing systems that can be used to complete sophisticated cognitive tasks, such as object detection, language recognition, voice and video classification, time series analysis, text categorization and analytical reasoning.

In their paper, Sebastian, Rahimi and their colleagues presented a complete in-memory HDC system that can tackle a variety of tasks. Their system has two key components: an HDC encoder and an associative memory.

"Our system performs core computations in-memory with logical and dot product operations on memristive devices," Sebastian and Rahimi said. "Due to the inherent robustness of HDC, it was possible to approximate the mathematical operations associated with HDC to make it suitable for hardware implementation and to use analog in-memory computing without compromising the accuracy."

Most in-memory HDC architectures developed in the past are only applicable to a limited set of tasks, such as single language recognition or binary classification tasks. In addition, these systems were primarily evaluated in simulations and used compact models based on small prototypes, with a few resistive devices.

In contrast, the system developed by Sebastian and Rahimi exploits over 700,000 PCM devices. It is thus arguably one of the largest and most significant experimental demonstrations of in-memory HDC presented so far.

The prototype is among the first HDC systems programmed to support different hypervector representations, dimensionalities, as well as types of input symbols and of output classes. This makes it suitable for multiple applications, ranging from NLP to news classification and bio-signal processing.

"Our work truly demonstrates the potential benefits of analog in-memory computing at scale by executing a wide range of classification tasks on the noisy hardware substrate while achieving comparable accuracies to precise software implementations," Sebastian and Rahimi said. "Such robust analog in-memory computing is accomplished by providing a novel look at data representations, associated operations with graceful approximations, and materials and substrates that naturally enable them."

The researchers evaluated their system in a series of experiments, testing its performance on three tasks commonly tackled by AI techniques, namely language classification, news classification and hand gesture recognition based on the analysis of electromyography signals. In all of these tasks, their HDC system achieved a near-optimal trade-off between the complexity of a task and the classification accuracy. Sebastian, Rahimi and their colleagues tested their system using 760,000 phase-change memory devices that performed analog in-memory computing processes and found that it achieved similar accuracies to those of popular software techniques.

"We experimentally demonstrated that an HDC platform using PCM-based in-memory computing can achieve over 600% energy savings compared to an optimized digital system based on 65-nm CMOS technology," Sebastian and Rahimi said.

In the future, the HDC system introduced in this recent study could enable the creation of new technology with advanced memory capabilities that can complete numerous different classification tasks. The system could soon be implemented and tested in a variety of real-world settings, which would allow the researchers to further evaluate its performance.

"In our HDC architecture, the encoding of information and memory storage are separate processes by construction," Sebastian and Rahimi said. "This key disentanglement is recently appreciated in modern deep neural networks to rescue them from catastrophic forgetting and to enable few-shot learning as well as retaining for a lifetime. Our architecture and representational system will play a central role for the next generation of AI to deliver systems that can learn fast, retain information throughout their lifetime and do this efficiently even with the right materials and substrates."

More information: Geethan Karunaratne et al. In-memory hyperdimensional computing, Nature Electronics (2020). DOI: 10.1038/s41928-020-0410-3

© 2020 Science X Network