Optimizing neural networks on a brain-inspired computer

Many computational properties are maximized when the dynamics of a network are at a 'critical point," a state where systems can quickly change their overall characteristics in fundamental ways, transitioning e.g. between order and chaos or stability and instability. Therefore, the critical state is widely assumed to be optimal for any computation in recurrent neural networks, which are used in many AI applications.

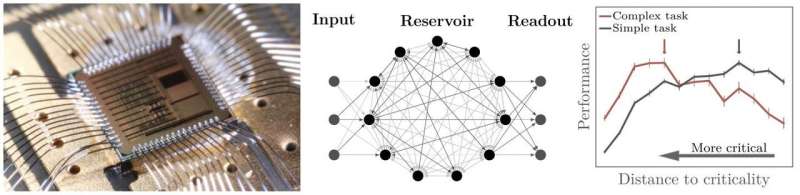

Researchers from the HBP partner Heidelberg University and the Max-Planck-Institute for Dynamics and Self-Organization challenged this assumption by testing the performance of a spiking recurrent neural network on a set of tasks with varying complexity at—and away from critical dynamics. They instantiated the network on a prototype of the analog neuromorphic BrainScaleS-2 system. BrainScaleS is a state-of-the-art brain-inspired computing system with synaptic plasticity implemented directly on the chip. It is one of two neuromorphic systems currently under development within the European Human Brain Project.

First, the researchers showed that the distance to criticality can be easily adjusted in the chip by changing the input strength, and then demonstrated a clear relation between criticality and task-performance. The assumption that criticality is beneficial for every task was not confirmed: whereas the information-theoretic measures all showed that network capacity was maximal at criticality, only the complex, memory intensive tasks profited from it, while simple tasks actually suffered. The study thus provides a more precise understanding of how the collective network state should be tuned to different task requirements for optimal performance.

Mechanistically, the optimal working point for each task can be set very easily under homeostatic plasticity by adapting the mean input strength. The theory behind this mechanism was developed very recently at the Max Planck Institute. "Putting it to work on neuromorphic hardware shows that these plasticity rules are very capable in tuning network dynamics to varying distances from criticality," says senior author Viola Priesemann, group leader at MPIDS. Thereby tasks of varying complexity can be solved optimally within that space.

The finding may also explain why biological neural networks operate not necessarily at criticality, but in the dynamically rich vicinity of a critical point, where they can tune their computation properties to task requirements. Furthermore, it establishes neuromorphic hardware as a fast and scalable avenue to explore the impact of biological plasticity rules on neural computation and network dynamics.

"As a next step, we now study and characterize the impact of the spiking network's working point on classifying artificial and real-world spoken words," says first author Benjamin Cramer of Heidelberg University.

More information: Benjamin Cramer et al, Control of criticality and computation in spiking neuromorphic networks with plasticity, Nature Communications (2020). DOI: 10.1038/s41467-020-16548-3