July 13, 2020 feature

Using a quantum-like model to enable perception in robots with limited sensing capabilities

Over the past few years, researchers have been trying to apply quantum physics theory to a variety of fields, including robotics, biology and cognitive science. Computational techniques that draw inspiration from quantum systems, also known as quantum-like (QL) models, could potentially achieve better performance and more sophisticated capabilities than more conventional approaches.

Researchers at University of Genoa, in Italy, have recently investigated the feasibility of using a QL approach to enhance a robot's sensing capabilities. In their paper, pre-published on arXiv, they present the results of a case study where they tested a QL perception model on a robot with limited sensing capabilities within a simulated environment.

"The idea for this study came to me after reading an article written in 1993 by Anton Amann, ('The Gestalt problem in quantum theory') in which he compared the problem of Gestalt perception with the attribution of molecular shape in quantum physics," Davide Lanza, one of the researchers who carried out the study, told TechXplore. "I was amazed by this parallel between cognition and quantum phenomena, and I discovered then the flourishing field of quantum cognition studies."

Inspired by the ideas presented by Amann, Lanza reached out to his supervisor Fulvio Mastrogiovanni and asked him whether he could investigate the use of quantum cognition modeling to enhance robotic perception as part of his master's thesis. Once Mastrogiovanni approved his idea, he started defining a preliminary model to test the feasibility of using a QL approach in robotics, in collaboration with Paolo Solinas, a physics professor specialized in quantum computing working at University of Genoa.

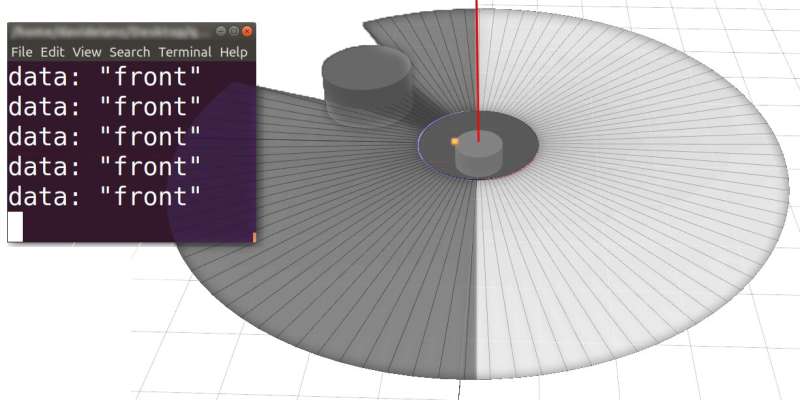

As an initial step in their research, Lanza and Solinas defined a simplified case study where a robot should be able to detect whether an object is placed in front of it or behind its back. Their goal was to investigate how a QL model would deal with uncertainty and perception disambiguation in this simple situation, which involved a choice between two options (i.e., front or back), before applying it to more complex scenarios.

"We stored the information collected by the robot's sensor in a single qubit," Lanza explained. "The qubit was allowed to have the two 'front' and 'back' states in a coherent superposition, enabling uncertainty modeling for mixed 'front-back' situations. Subsequently, when the qubit is measured, it returns a reading with a probability that is related to this superposition."

When the researchers tested a QL perception model in a simulation of the case study they defined, they found that it performed comparably to non-quantum techniques. However, the model only performed well when it was tested using a quantum simulator called QASM, which emulates the execution of quantum circuits on a classical computing device.

Actually implementing the model on the IBM Quantum Experience (IBMQ) platform, on the other hand, resulted in a number of errors, particularly in trials where the object was in an ambiguous position (i.e., when it was not so clear whether it was in front or at the back of the robot). Lanza, Solinas and Mastrogiovanni thus argue that QL perception models could be tested more effectively in simulations, as realizations on quantum backends can lead to a number of errors in unbalanced situations.

"Using a qubit is, in my opinion, a more compact and elegant solution for perception and cognition modeling," Lanza said. "Indeed, a qubit inherently provides interesting modeling features typical of quantum systems, with no need of further modules to deal with probabilistic outcomes."

The recent paper published on arXiv is merely the first part of the project carried out by Lanza, Solinas and Mastrogiovanni. The researchers are now exploring the possibility of applying the same QL model to more complex scenarios, while also testing it in simulations involving robots with multiple integrated sensors.

The findings they gathered so far highlight the potential of introducing models based on quantum theory in robotics research. In the future, they could inspire other research teams to apply QL models of human cognition to robotics problems and evaluate their performance.

"After this preliminary study, we started working on multisensorial integration, developing a model for multiple sensors able to interpolate the data and fully exploit quantum features," Lanza said.

More information: A preliminary study for a quantum-like robot perception model. arXiv:2006.02771 [cs.RO]. arxiv.org/abs/2006.02771

Multi-sensory integration in a quantum-like robot perception model. arXiv:2006.16404 [cs.RO].

arxiv.org/abs/2006.16404

© 2020 Science X Network