December 10, 2020 report

Deep reinforcement-learning architecture combines pre-learned skills to create new sets of skills on the fly

A team of researchers from the University of Edinburgh and Zhejiang University has developed a way to combine deep neural networks (DNNs) to create a new type of system with a new kind of learning ability. The group describes their new architecture and its performance in the journal Science Robotics.

Deep neural networks are able to learn functions by training on multiple examples repeatedly. To date, they have been used in a wide variety of applications such as recognizing faces in a crowd or deciding whether a loan applicant is credit-worthy. In this new effort, the researchers have combined several DNNs developed for different applications to create a new system with the benefits of all of its constituent DNNs. They report that the resulting system was more than just the sum of its parts—it was able to learn new functions that none of the DNNs could do working alone. The researchers call it a multi-expert learning architecture (MELA).

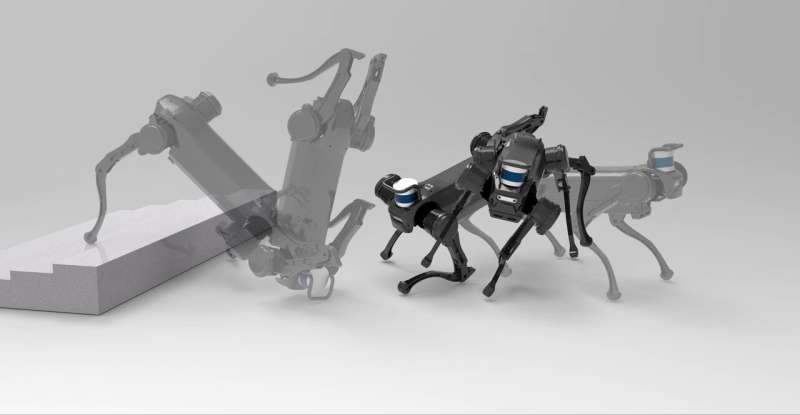

More specifically, the work involved training several DNNs for different functions. One learned to make a robot trot, for example; another could navigate around obstacles. All of the DNNs were then connected to a gating neural network that learned over time how to call the other DNNs when something came up that required its special skillset as it controlled a robot moving around its environment. That resulting system was then able to carry out all of the skills of all of the combined DNNs.

But that was not the end of the exercise—as the MELA learned more about its constituent parts and their abilities, it learned to use them together through trial and error in ways that it had not been taught. It learned, for example, how to combine getting up after falling with dealing with a slippery floor, or what to do if one of its motors failed. The researchers suggest their work marks a new milestone in robotics research, providing a new paradigm in which humans do not have to intercede when a robot encounters problems it has not experienced before.

More information: Chuanyu Yang et al. Multi-expert learning of adaptive legged locomotion, Science Robotics (2020). DOI: 10.1126/scirobotics.abb2174

© 2020 Science X Network