'Bleep-bloop-bleep! Say "cheese," human'

Good portrait photography is as much art as it is science. There are technical details like composition and lighting, but there's also a matter of connecting emotionally with the photo's subjects. Can you teach that to a robot?

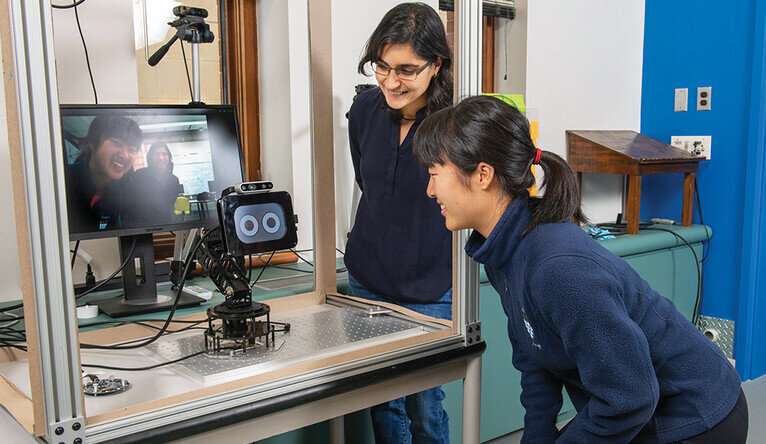

Marynel Vazquez wants to find out. She and her research team have built a robot designed to negotiate the quick but very complex interactions between photographer and subject—that is, putting someone at ease and drawing out a genuine smile, all in a matter of seconds. Built with a stylish retro-futuristic look, including 'eyes' on a touch screen programmed to interact with people, the robot photographer—they call it Shutter—is designed to catch the attention of passers-by. Vazquez has a few places she'd like to station it, all of which have good foot traffic. One is in the John Klingenstein Lab at the Center for Engineering Innovation & Design. Another is the corridor between Becton and Dunham Labs, a spot teeming with students and faculty as they pass to classes.

Most work in robot photography has homed in on the technical side of things—focus, lighting, placement of subject. But as professional photographers will tell you, that's only half the job. When people in a photo look glum or expressionless, all the compositional details won't save it. Vazquez and her team are focusing on the special ability of good photographers to spark that instant of joy and capture it in a photo.

"We're looking at what the robot photographer can do to get more positive reactions from people," said Vazquez, assistant professor of computer science. "Now that we have a social agent that can engage people and change what they're doing, we can ask, 'What opportunities does that open for taking photos?'"

In talking to human photographers, a common theme that came up was that laughter is a great way to make people comfortable in front of the camera.

"That made us interested in how to make the robot humorous," she said. "We've been trying different kinds of humor that the robot photographer can use. We're not really going after what the best joke is, but how we can use this humor to elicit smiles and use that to take better pictures.

Humor is, of course, subjective, and one person's comedy gold could leave another audience cold. To that end, Tim Adamson, a Ph.D. student in Vazquez's lab, programmed Shutter to have a wide range of funniness. These include a GIF of a dog sticking its head outside a car window at high speeds, audio samples of children laughing and goats bleating, and a meme of a man making a face at the camera with the quote "If Monday had a face… This would be it." And if all else fails, there's the classic "Say cheese!"

The big picture

Photography seemed to Vazquez an ideal intersection to study human-machine interactions in general—which is all part of the overall mission in Vazquez's laboratory.

"In today's world, you can imagine that robots will be out there—some already are, with Roombas and robots in some factories and warehouses," said Vazquez. "As a community, we've started to realize that it's important to study human-robot interaction in public environments. Our effort here at Yale with this robot is a first step in getting out of the lab to study more complex interactions with social robots."

A lot of research in the field has dealt with one-human-to-one-robot interactions, but Vazquez is also interested in group settings, a particularly complex human environment. More specifically, they want to help robots understand social contexts, get better at recognizing emotional statements and decision-making—all of which helps them act autonomously.

Vazquez's lab has taken on a number of projects, from working with Disney's research team while she was a Ph.D. student at Carnegie Mellon University to her recent work with students on remotely controlled robots for homebound children during the coronavirus lockdown. At the center of all this research is a deep dive into how humans work with robots. Part of her lab has a space sectioned off by large LEGO-like blocks, forcing humans in the room to figure out how to negotiate the space they're sharing with the lab's robot population. She can then compare those interactions to the human-to-human interactions in the same space.

The initial results of the research team's work with Shutter were detailed in a paper that appeared in March at the 2020 ACM/IEEE International Conference on Human-Robot Interaction. In a more recent paper, they focused specifically on how people perceive Shutter's robot gaze. The perception of how a robot is "paying attention" is a critical part of human-robot interaction. When the subject of inventory-taking robots that roam the aisles of a particular grocery store chain comes up in conversation, Vazquez sighs. On one hand, she said, it's great that the company decided to outfit their robots with eyes, which go a long way to humanize them. Unfortunately, though, the eyes are the googly kind that don't correlate to anything in their sightlines.

"Gaze is something that people are naturally attracted to, and our robot has eyes that help convey its attention to users," she said.

One example of this showed up in their research for Shutter. "We had two people approach the robot photographer, and it looked at only one of the people, so the other person moved away, even though in reality Shutter could have taken a picture of both of them." The subtle move of the robot's eyes immediately shifted the situation to make one person a participant and the other a bystander.

Unexpected encounters like that help make the research exciting, she said, and the more sophisticated Shutter becomes, it's likely they'll see more. Ideally, Vazquez said, Shutter could be located somewhere on campus where students pass by frequently but far enough from classrooms to allow for lively human-robot encounters. She also welcomes the possibility that some passers-by will act out in ways that they wouldn't with a human photographer.

"You might wonder what crazy things people might do when it's out there," she said, with a laugh. "My hope is that if crazy things happen, it's an opportunity for us to study them."