October 27, 2021 feature

New memcapacitor devices for neuromorphic computing applications

To train and implement artificial neural networks, engineers require advanced devices capable of performing data-intensive computations. In recent years, research teams worldwide have been trying to create such devices, using different approaches and designs.

One possible way to create these devices is to realize specialized hardware onto which neural networks can be mapped directly. This could entail, for instance, the use of arrays of memristive devices, which simultaneously perform parallel computations.

Researchers at Max Planck Institute of Microstructure Physics and the startup SEMRON GmbH in Germany have recently designed new energy-efficient memcapacitive devices (i.e., capacitors with a memory) that could be used to implement machine-learning algorithms. These devices, presented in a paper published in Nature Electronics, work by exploiting a principle known as charge shielding.

"We noticed that besides conventional digital approaches for running neural networks, there were mostly memristive approaches and only very few memcapacitive proposals," Kai-Uwe Demasius, one of the researchers who carried out the study, told TechXplore. "In addition, we noticed that all commercially available AI Chips are only digital/mixed signal based and there are few chips with resistive memory devices. Therefore, we started to investigate an alternative approach based on a capacitive memory device."

While reviewing previous studies, Demasius and his colleagues observed that all existing memcapacitive devices were difficult to scale up and exhibited a poor dynamic range. They thus set out to develop devices that are more efficient and easier to scale up. The new memcapacitive device they created draws inspiration from synapses and neurotransmitters in the brain.

"Memcapacitor devices are inherently many times more energy efficient compared to memristive devices, because they are electric field based instead of current based and the signal-to-noise ratio is better for the first case," Demasius said. "Our memcapacitor device is based on charge screening, which enables much better scalability and higher dynamic range in comparison to prior trials to realize memcapacitive devices."

The device created by Demasius and his colleagues controls the electric field coupling between a top gate electrode and a bottom read-out electrode via another layer, called the shielding layer. This shielding layer is in turn adjusted by an analog memory, which can store the different weight values of artificial neural networks, similarly to how neurotransmitters in the brain store and convey information.

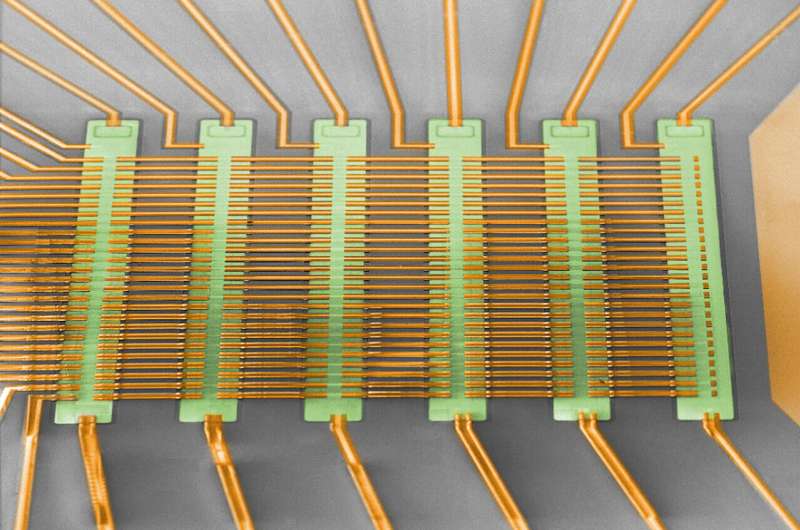

To evaluate their devices, the researchers arranged 156 of them in a crossbar pattern, then used them to train a neural network to distinguish between three different letters of the roman alphabet ("M," "P' and "I'). Remarkably, their devices attained energy efficiencies of over 3,500 TOPS/W at 8 Bit precision, which is 35 to 300 times larger compared to other existing memresistive approaches. These findings highlight the potential of the team's new memcapacitors for running large and complex deep learning models with a very low power consumption (in the μW regime).

"We believe that the next generation human-machine interfaces will heavily depend on automatic speech recognition (ASR)," Demasius said. "This not only includes wake-up-word detection, but also more complex algorithms, like speech-to-text conversion. Currently ASR is mostly done in the cloud, but processing on the edge has advantages with regards to data protection amongst other."

If speech recognition techniques improve further, speech could eventually become the primary means through which users communicate with computers and other electronic devices. However, such an improvement will be difficult or impossible to implement without large neural network-based models with billions of parameters. New devices that can efficiently implement these models, such as the one developed by Demasius and his colleagues, could thus play a crucial role in realizing the full potential of artificial intelligence (AI).

"We founded a start-up that facilitates this superior technology," Demasius said. "SEMRON´s vision is to enable these large artificial neural networks on a very small formfactor and power these algorithms with battery power or even energy harvesting, for instance on ear buds or any other wearable."

SEMRON, the start-up founded by Demasius and his colleagues, has already applied for several patents related to deep learning models for speech recognition. In the future, the team plans to develop more neural network-based models, while also trying to scale up the memcapacitor-based system they designed, by increasing both its efficiency and device-density.

"We are constantly filing patents for any topic related to this," Demasius said. "Our ultimate goal is to enable every device to carry heavily AI functionality on device and we also envision a lot of approaches when it comes to training or deep learning model architectures. Spiking neural nets and transformer based neural networks are only some examples. One strength is that we can support all these approaches, but of course constant research is necessary to keep up with all new concepts in that domain."

More information: Kai-Uwe Demasius, Aron Kirschen, and Stuart Parkin, Energy-efficient memcapacitor devices for neuromorphic computing, Nature Electronics(2021). DOI: 10.1038/s41928-021-00649-y

© 2021 Science X Network