An energy-efficient, light-weight, deep-learning algorithm for future optical artificial intelligence

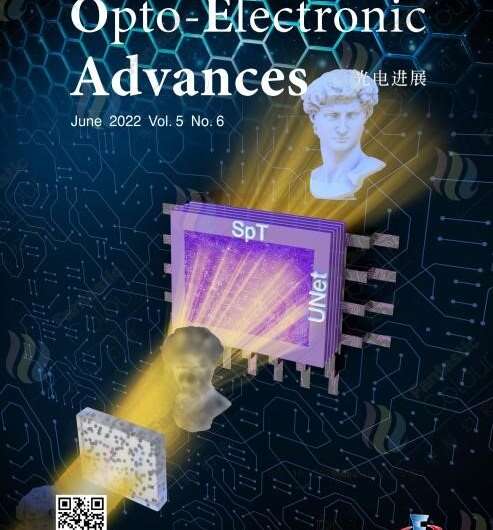

A new publication from Opto-Electronic Advances discusses a high performance "non-local" generic face reconstruction model using the lightweight Speckle-Transformer (SpT) Unet.

By exploiting the feature extraction and generalization capabilities of existing advanced computer neural networks, combined with the speed of light, low energy consumption and parallel multi-dimensional optical signal processing capabilities of optical artificial intelligence algorithms, optical artificial intelligence for computational imaging (CI) is designed and developed.

Significant progress has been made in CI, in which electrical convolutional neural networks (CNNs) have demonstrated that image reconstruction ranging from non-invasive medical imaging through the tissue to autonomous navigation of vehicles in foggy conditions can be reconstructed. However, due to the limited "local" kernel size of the convolutional operator, the performance of CNNs is inaccurate for spatially dense patterns, such as the generic face images. Therefore, a "non-local" kernel that can extract long-term dependencies of the feature maps is urgently needed. The transformers are modules that rely entirely on the attention mechanism and can be easily parallelized.

Moreover, the transformer assumes minimal prior knowledge about the structure of the problem as compared to their convolutional and recurrent counterparts in deep learning. In vision, transformers have been successfully used for image recognition, object detection, segmentation, image super-resolution, video understanding, image generation, text-image synthesis, and so on. However, based on current knowledge, none of the investigations has explored the performance of the transformers in CI, such as speckle reconstruction.

In this article, a "non-local" model, termed the Speckle-Transformer (SpT) UNet, is implemented for highly accurate, energy-efficient paralleling processing of the speckle reconstructions. The network is a UNet architecture including advanced transformer encoder and decoder blocks.

For better feature reservation/extraction, the authors propose and demonstrate three key mechanisms, i.e., pre-batch normalization (pre-BN), position encoding in multi-head attention/multi-head cross-attention (MHA/MHCA), and self-built up/down sampling pipelines. For the "scalable" data acquisition, four different grits of diffusers within the 40 mm detection range are considered. It is worth noting that the SpT UNet is a lightweight network that is less than one order of parameters compared with other state-of-art "non-local" networks, such as ViT, and SWIN Transformer in vision computation.

The authors further quantitatively evaluate the network performance with four scientific indicators: Pearson correlation coefficient (PCC), structural similarity measure (SSIM), Jaccard index (JI), and peak signal-to-noise ratio (PSNR). The lightweight SpT UNet reveals a high efficiency and strong comparative performance with Pearson Correlation Coefficient (PCC), and structural similarity measure (SSIM) exceeding 0.989, and 0.950, respectively. For optical artificial intelligence, as the paralleling processing model, the light-weight SpT UNet can be further implemented as an all-optical neural network with surpassing feature extraction, light speed and passive processing abilities.

More information: Yangyundou Wang et al, High performance "non-local" generic face reconstruction model using the lightweight Speckle-Transformer (SpT) UNet, Opto-Electronic Advances (2022). DOI: 10.29026/oea.2023.220049