This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Psychology graduate explores human preferences when considering autonomous robots as companions, teammates

With the fierce debate broiling over the promise versus perceived dangers of Artificial Intelligence (AI) and autonomous robots, Nicole Moore of the University of Alabama in Huntsville (UAH) has had a study published that is especially timely.

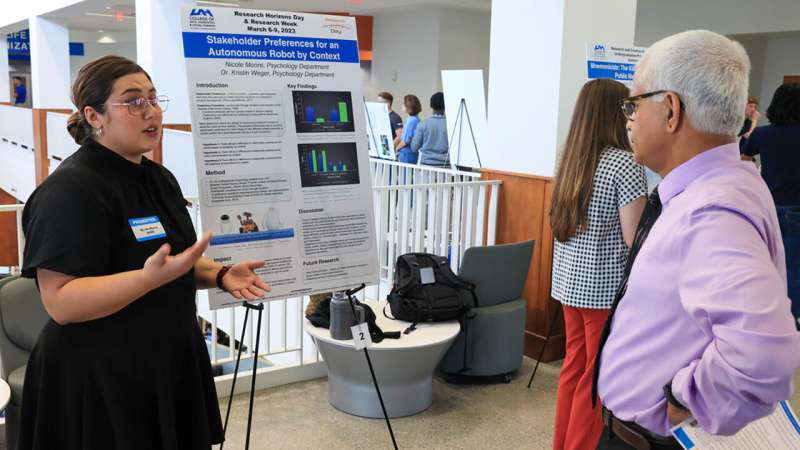

Titled "Stakeholder Preferences for an Autonomous Robot Teammate," Moore's research focuses on user-held preferences: specifically, which factors in autonomous robot design are the most preferable to their human counterparts, and whether these criteria vary according to the ways the technology is applied. The researcher received her bachelor's degree in psychology this May from UAH, a part of the University of Alabama System.

Moore participated in the Interdisciplinary Undergraduate Experience (INCLUDE) program, which is a university wide initiative drawing students from various disciplines, in this case philosophy, psychology, computer science, industrial and systems engineering and mechanical engineering students who collaborated on a grant challenge during their capstone coursework.

"The INCLUDE team was tasked with designing an autonomous robot to participate in a simulation of capture the flag against another autonomous robot," Moore explains. "My role was to examine what the stakeholder preferences were toward the design, interaction and function of the robot."

The researcher notes that previous literature on autonomous robots suggests people tend to attribute human qualities to their mechanical counterparts. "But there are additional factors that affect the trust associated with them," she notes.

The study was a mixed-methods survey design. Participants were randomly assigned to read a vignette about a robotic sports teammate or a robotic social companion before answering open-ended and closed-ended questions regarding robot design preferences. The study was followed by interviews conducted with members from industry and the Department of Defense regarding the design of a robotic combat companion.

Rather than the "one-size-fits-all" humanlike design often depicted in popular culture, Moore's study indicates that preferred robot types and function preferences can vary widely, depending on the specific application.

"For instance, individuals preferred the robotic social companion to be more social and emotional in its interactions with other humans and robots alike," the researcher learned. "In contrast, for the robotic sports teammate, a more dangerous and aggressive interaction with others was valued. Results from the interview responses regarding the design features of a robotic combat companion showed preferences to exist for more machine-like robots.

"Our results also showed that when conceptualizing the design of autonomous robots for human interaction, the communication modality is an essential factor," Moore goes on to say. For example, individuals preferred the robotic social companion to use a female voice. For the robotic sports teammate, participants valued a masculine tone, while an authoritative masculine voice was being preferred for a robotic combat companion.

As to the dangers of autonomous robots and runaway AI, Moore points a finger at sources like Hollywood as the culprit behind much of the worry, but stresses the importance of gauging stakeholders to address specific concerns.

"I think that there is a lot of fear and uncertainty that goes with change," she says. "The majority of the respondents said a similar thing: there has to be a way to turn off or activate a 'kill' switch."

The recent graduate believes it's vital to focus on the benefits of these technologies as well, rather than simply be overly fearful of what lies ahead.

"Utilizing robots can be extremely beneficial to many different industries and potentially save lives," the researcher points out. "The military talks about using autonomous robots for surveillance missions instead of risking a human soldier. The caregiving industry is in dire need of caregivers for the elderly, but there aren't enough. I believe if the right design elements are taken into consideration under an ethically regulated umbrella, autonomous robots can help society."

The graduate's findings highlight the benefits of the interdisciplinary work being performed not only in the Department of Psychology, but the university as a whole, demonstrating how work across fields can inform other areas, such as science, technology, engineering and math applications.