This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

peer-reviewed publication

trusted source

proofread

YouTube video recommendations lead to more extremist content for right-leaning users, researchers suggest

YouTube tends to recommend videos that are similar to what people have already watched. New research has found that those recommendations can lead users down a rabbit hole of extremist political content.

A multidisciplinary research team in communication and computer science at the University of California, Davis, performed a systematic audit of YouTube's video recommendations in 2021 and 2022. They tested how a person's ideological leaning might affect what videos YouTube's algorithms recommend to them. For right-leaning users, video recommendations are more likely to come from channels that share political extremism, conspiracy theories and otherwise problematic content. Recommendations for left-leaning users on YouTube were markedly fewer, researchers said.

"YouTube's algorithm doesn't shield people from content from across the political spectrum," said Magdalena Wojcieszak, a professor of communication in the College of Letters and Science and a corresponding author of the study. "However, it does recommend videos that mostly match a user's ideology, and for right-leaning users those videos often come from channels that promote extremism, conspiracy theories and other types of problematic content."

The study, "Auditing YouTube's recommendation system for ideologically congenial, extreme, and problematic recommendations," was published this month in the Proceedings of the National Academy of Sciences.

YouTube is the most popular video-sharing platform, used by 81% of the U.S. population and with a steadily growing user base. More than 70% of content watched on YouTube is recommended by its algorithm.

Sock puppets and the recommendation rabbit hole

The research team created 100,000 "sock puppets" on YouTube to test the platform's recommendations. A "sock puppet" is an automated account that mimics an actual person using the platform. Just like actual YouTube users, this study's sock puppets watched videos and gathered YouTube's video recommendations.

All together, these sock puppets watched nearly 10 million YouTube videos from 120,073 channels that reflected ideological categories from the far left to the far right. To identify the political slant of a YouTube channel, the team cross-referenced the Twitter accounts of political figures that each channel followed.

Each of the research team's sock puppet accounts watched 100 randomly sampled videos from its assigned ideology for 30 seconds each. After watching the videos, the sock puppet's homepage recommendations were collected.

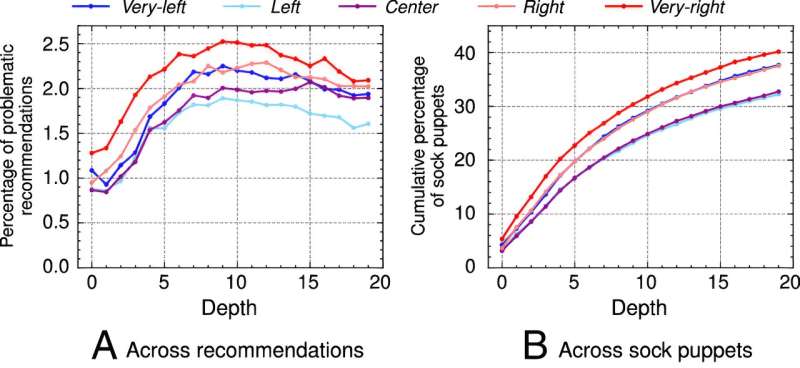

The "up-next" recommendations from a randomly selected video were also collected, and these showed what videos a real user would likely watch by passively following the video recommendations.

Extremist content becomes self-reinforcing

In this study, the research team defined problematic channels as those that shared videos promoting extremist ideas, such as white nationalism, the alt-right, QAnon and other conspiracy theories. More than 36% of all sock puppet users in the experiment received video recommendations from problematic channels. For centrist and left-leaning users, that number was 32%. For the most right-leaning accounts, that number was 40%.

Following the recommended videos can have a strong impact on what people see on YouTube, researchers said.

"Without requiring any additional input from people using the platform, YouTube's recommendations can, on their own, activate a cycle of exposure to more and more problematic videos," said Muhammad Haroon, a doctoral student in computer science in the College of Engineering and a corresponding author on the study.

"It's important that we identify the specific factors that increase the likelihood that users encounter extreme or problematic content on platforms like YouTube," Wojcieszak said. "Considering the current political climate in the United States, we need greater transparency in these social recommendation systems that may lead to filter bubbles and rabbit holes of radicalization."

More information: Muhammad Haroon et al, Auditing YouTube's recommendation system for ideologically congenial, extreme, and problematic recommendations, Proceedings of the National Academy of Sciences (2023). DOI: 10.1073/pnas.2213020120