This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Scientists uncover quantum-inspired vulnerabilities in neural networks

In a recent study merging the fields of quantum physics and computer science, Dr. Jun-Jie Zhang and Prof. Deyu Meng have explored the vulnerabilities of neural networks through the lens of the uncertainty principle in physics.

Their work, published in the National Science Review, draws a parallel between the susceptibility of neural networks to targeted attacks and the limitations imposed by the uncertainty principle—a well-established theory in quantum physics that highlights the challenges of measuring certain pairs of properties simultaneously.

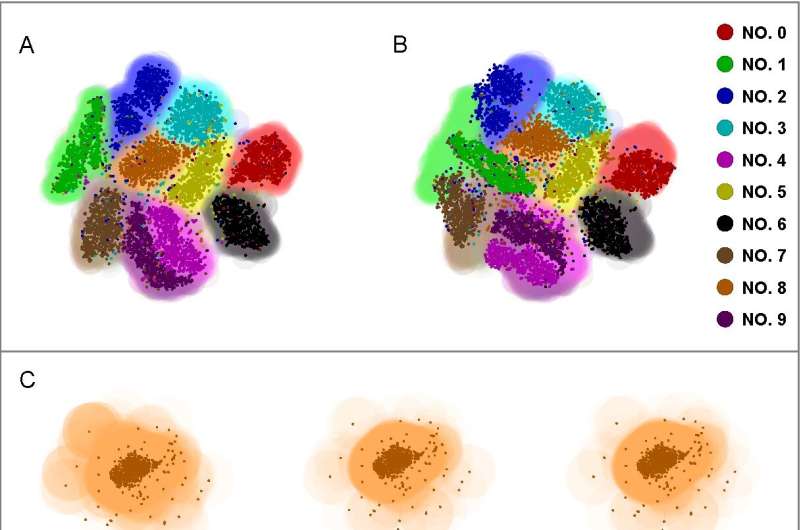

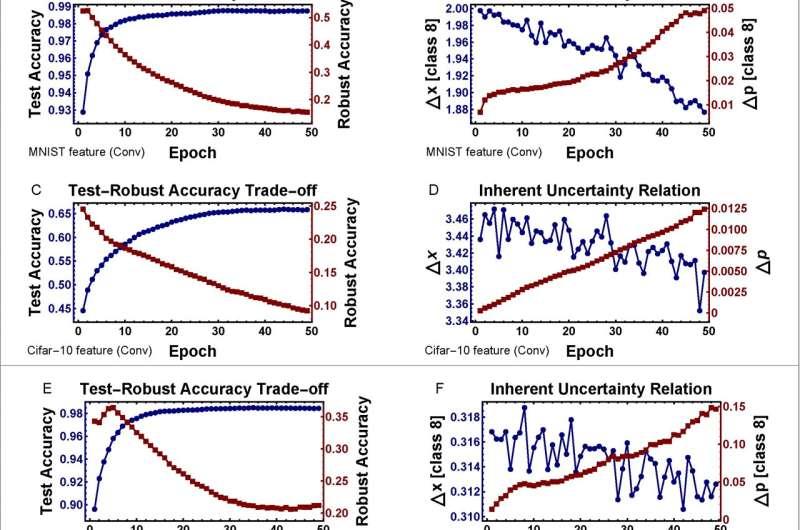

The researchers' quantum-inspired analysis of neural network vulnerabilities suggests that adversarial attacks leverage the trade-off between the precision of input features and their computed gradients.

"When considering the architecture of deep neural networks, which involve a loss function for learning, we can always define a conjugate variable for the inputs by determining the gradient of the loss function with respect to those inputs," says Dr. Zhang, whose expertise lies in mathematical physics.

This research is hopeful to prompt a reevaluation of the assumed robustness of neural networks and encourage a deeper comprehension of their limitations. By subjecting a neural network model to adversarial attacks, Dr. Zhang and Prof. Meng observed a compromise between the model's accuracy and its resilience.

Their findings indicate that neural networks, akin to quantum systems mathematically, struggle to precisely resolve both conjugate variables—the gradient of the loss function and the input feature—simultaneously, hinting at an intrinsic vulnerability. This insight is crucial for the development of new protective measures against sophisticated threats.

"The importance of this research is far-reaching," notes Prof. Meng, an expert in machine learning and the corresponding author of the paper.

"As neural networks play an increasingly critical role in essential systems, it becomes imperative to understand and fortify their security. This interdisciplinary research offers a fresh perspective for demystifying these complex 'black box' systems, potentially informing the design of more secure and interpretable AI models."

More information: Jun-Jie Zhang et al, Quantum-inspired analysis of neural network vulnerabilities: The role of conjugate variables in system attacks, National Science Review (2024). DOI: 10.1093/nsr/nwae141